Introducing OpenShift on OpenShift with Hosted Control Planes

The goal of this lab is to familiarize the attendees with the benefits and features of hosted virtual Red Hat OpenShift clusters deployed to bare metal OpenShift using hosted control planes (HCP). Hosted control planes (a downstream of the hypershift project) were made generally available, and fully supported, with OpenShift 4.14. This capability allows for a central management cluster to host multiple managed clusters in bare metal or virtualized form. This greatly increases the efficiency of an OpenShift deployment, saving on physical resource costs, cluster management overhead, and the total time to deploy, doing so is quite expedited. In addition this lab will explore the advantages of using a centralized management solution for the hosted clustersm which is provided by Advanced Cluster Management for Kubernetes (RHACM).

Terminology

-

Hosted cluster

An OpenShift Container Platform cluster with its control plane and API endpoint hosted on a management cluster. The hosted cluster includes the control plane and its corresponding data plane.

-

Hosted cluster infrastructure

Network, compute, and storage resources that exist in the tenant or end-user cloud account.

-

Hosted control plane

An OpenShift Container Platform control plane that runs on the management cluster, which is exposed by the API endpoint of a hosted cluster. The components of a control plane include etcd, the Kubernetes API server, the Kubernetes controller manager, and a VPN.

-

Managed cluster

A cluster that the hub cluster manages. This term is specific to the cluster lifecycle that the multicluster engine for Kubernetes Operator manages in Red Hat Advanced Cluster Management. A managed cluster is not the same thing as a management cluster. For more information, see Managed cluster.

-

Management, or hosting, cluster

An OpenShift Container Platform cluster where the HyperShift Operator is deployed and where the control planes for hosted clusters are hosted. The management cluster is synonymous with the hosting cluster.

-

Management cluster infrastructure

Network, compute, and storage resources of the management cluster.

-

Node pool

A resource that contains the compute nodes. The control plane contains node pools. The compute nodes run applications and workloads.

Why use hosted control planes?

With the introduction of OpenShift Virtualization Engine (OVE), Red Hat now provides an OpenShift experience that is tailored for, and targeted at, virtualization-only administrators. For customers who are using virtual OpenShift deployed to other hypervisor platforms, they can move to OVE without needing to change the way their hosted clusters are subscribed.

Furthermore, with updates to the bare metal OpenShift Kuberntes Engine (OKE), OpenShift Container Platform (OCP), and OpenShift Platform Plus (OPP) offerings, virtual OpenShift clusters deployed to these bare metal clusters inherit subscriptions of the same product type from the bare metal cluster. For cusotmers with substantial virtual OpenShift deployments, this offers a cost efftive way to move their OpenShift footprint away from existing hypervisors while also simplifying the management paradigm and improving resource efficiency.

Distributed Clusters for Disaster Recovery: A single hosted control plane instance deployed to a management or hub cluster could have a number of node pools assigned to the cluster from remote managed locations in disparate data centers, helping to protect against the loss of a cluster in the event of an unplanned disaster or data center outage. Should the worker nodes in one data center become unavailable, the application pods that are non-responsive could be relaunched on additional worker nodes in the cluster that reside in a different location.

Ease of Cluster Administration: Service providers or groups acting in such a manner can provision entirely new cluster environments rapidly, without additional overhead. New clusters can be made available to end users in a matter of minutes, and taken down once they are no longer needed. Developers may even be able to request the provisioning of clusters on demand for testing of a specific application in an isolated environment. The physical separation of control planes and worker nodes also allows cluster administrators to update control planes individually or even without updating the worker nodes for a cluster in tandem.

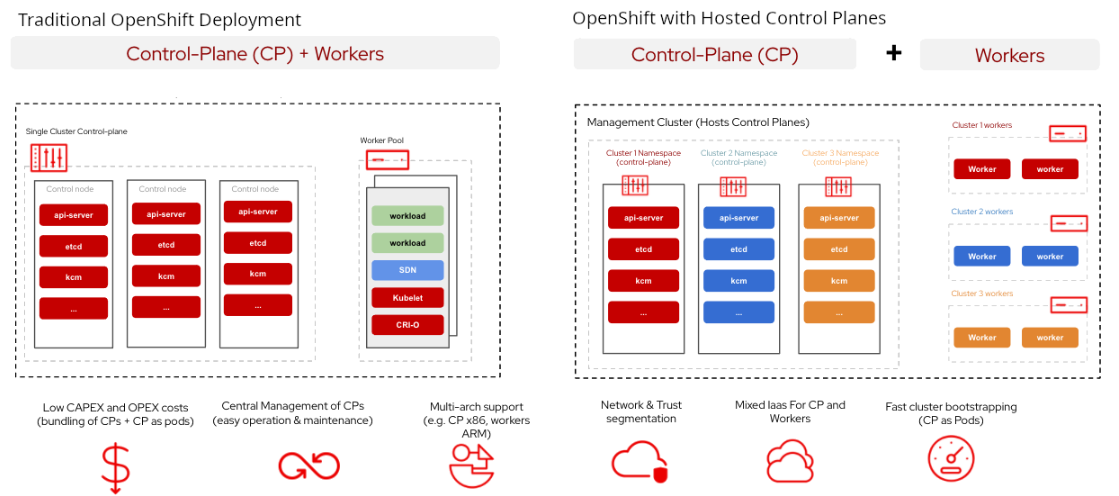

Reduction of Physical Resources: A traditional OpenShift deployment requires a minimum of 3 control plane hosts and 2 data plane hosts in order to be deployed as a highly available and fault-tolerant platform. In an environment where a number of separate clusters are being deployed, it would be more cost-effective to be able to deploy only worker nodes for each new cluster instance and to have the control plane for each not be localized but hosted in a centralized data center.

Secure Multi-Tenancy: In OpenShift, workloads and their responsibilities are often segregated through projects and access to these projects is governed by user accounts and permissions. While this virtual separation of applications exists, they still share the same level of access to all physical system resources as any other application and or project. The use of hosted control planes to dedicate entirely managed OpenShift clusters, either virtual or physical to projects or end users, offers a cluster the ability to safely separate workloads completely and allows for a truer form of multi-tenancy than traditionally available in OpenShift.

Secure Network Segregation: Due to the natural decoupling of the infrastructure, hosted control planes allow for the control planes themselves to be a part of the network domain of the management cluster, while the worker nodes reside on a different physical network and are able to be a part of that network domain. This arrangement ensures that management traffic is separated from data plane traffic, and that applications deployed do not have access to the control plane through physical separation.

Architectural Concepts

A hosted control planes cluster can be deployed to either bare metal nodes or virtual nodes provisioned with OpenShift Virtualization. In this lab we will be focused on deployments of virtual OpenShift clusters provided by OpenShift Virtualization.

A hosted control planes cluster saves on physical resources by reducing the number of nodes that need to be deployed to support the cluster. The hosted cluster control plane services are run in Pods, in a dedicated namespace, on the hosting cluster. This results in only worker nodes being provisioned for the hosted cluster.

The following graphic compares a hosted control planes cluster to a traditional OpenShift cluster:

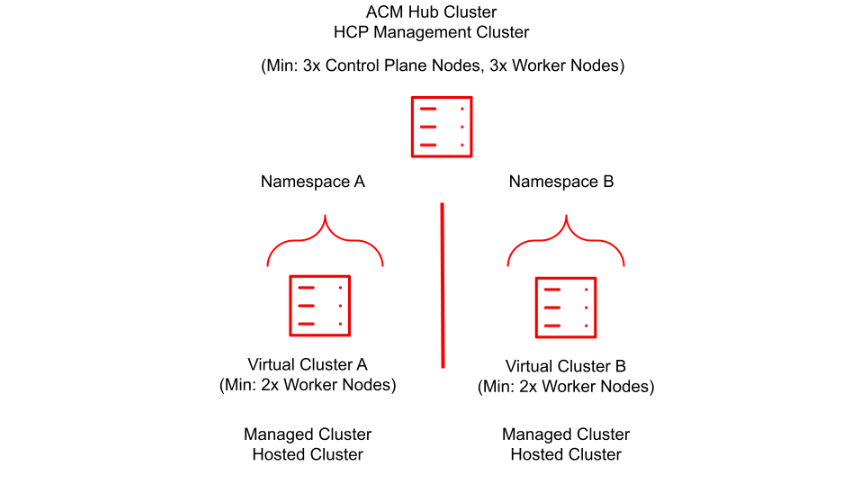

As stated previously, with virtual clusters provided by OpenShift Virtualization, administration teams can use a single centralized cluster with physical nodes to deploy a large number of individual clusters for multi-tenant workloads.

This is an example architecture showing a single hosting cluster, and multiple virtual clusters:

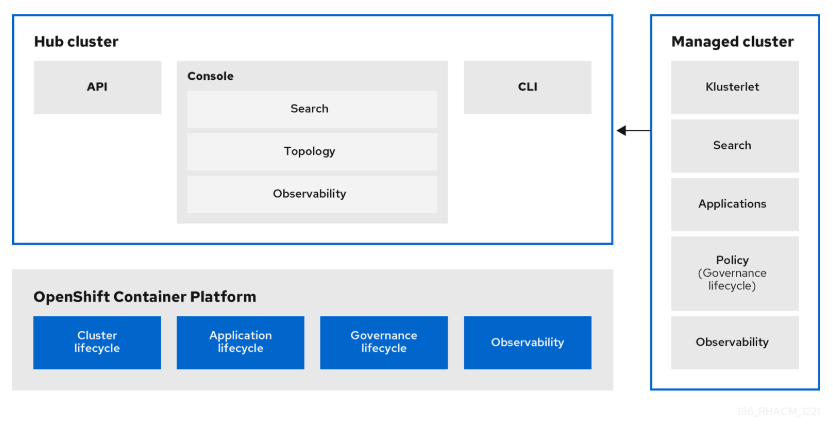

Such fleet management is greatly eased by the deployment of Red Hat Advanced Cluster Management for Kubernetes (RHACM) as a part of the solution.

The following graphic shows how a managed cluster depends on the hub cluster for a number of advanced features:

Lab Summary

These are the four main sections that will be covered in the lab:

Deployment: Using Red Hat Advanced Cluster Management for Kubernetes (RHACM) to deploy a virtual OpenShift on OpenShift cluster using Red Hat OpenShift Virtualization. This section also explores the environment once it’s deployed.

Configuration: Performing operations on the hosting cluster to enact configuration changes on the hosted cluster.

Scaling: NodePools in a hosted control planes environment, like MachineSets in standard clusters, can be configured to autoscale when resources are unavaialable to meet application requests. In this module we will deploy an application and scale it up, requiring the cluster to scale up dynamically to meet application needs.

Cluster Upgrades: This module is an exploration of centralized fleet management by experiencing the cluster upgrade processes, and a walkthrough of the cluster upgrade process using Red Hat Advanced Cluster Management for Kubernetes.

Requirements for the Lab Environment

-

Please read all instructions carefully when carrying out assigned tasks and do not only focus on the images provided.

-

If you are stuck and need assistance, please raise your hand and a proctor will see to you as soon as possible.

-

Each participant needs to have their own computer with a web browser and internet access.

-

A Chromium-based browser is recommended for the best experience.

Credentials for the OpenShift Console

Your OpenShift cluster console is available sample_openshift_cluster_console_url[here^].

An administrator user has been configured, and login is available with the following credentials:

-

User: sample_openshift_cluster_admin_username

-

Password: sample_openshift_cluster_admin_password

Bastion Access

A RHEL bastion host is available with common utilities pre-installed and OpenShift command line access pre-configured sample_bastion_public_hostname.

For SSH access to the bastion when needed, use the following credentials:

-

User: sample_bastion_ssh_user_name

-

Password: sample_bastion_ssh_password