Applying RHEL updates to the bootc base images

We looked at how to add new software packages to a bootc host, but what about applying updates to packages in the base image? Using dnf update during a container build is not a good practice, and can lead to inconsistent images based on package visibility at build time.

Red Hat publishes updated base images to the Container Catalog regularly, to understand how to make the best use of them, we need to revisit tagging.

What’s in a name?

Let’s do a quick refresher on what makes up the 'name' of a container image. The official convention for images is <host>/<namespace>/<repository>:<tag>.

host |

domain name of the registry in use, can include a port number if needed (eg |

namespace |

(optional) usually the username or organization name within the registry, can represent other things depending on the registry service. Not to be confused with Linux namespaces within the kernel. (eg |

repository |

what most folks think of as the 'name' of an image, but technically a space for a collection of images, typically related. these days, this collection is typically just iterations of a single image over time, not totally different but related images (eg |

tag |

(optional) identifier for a specific image in the repository, used to convey some information about the contents of the image, has a default of |

digest |

not part of the naming, but how images and tags in registries are actually tracked via SHA256, can be used to pull specific images directly (eg |

So far, we have be using registry.redhat.io/rhel9/rhel-bootc:9.5 in the FROM line of the Containerfile, so let’s break that down according to our table:

host |

registry.redhat.io |

namespace |

rhel9 |

repository |

rhel-bootc |

tag |

9.5 |

The earlier RHEL 9.4 images are also in the same rhel-bootc repository of the rhel9 namespace. This lets us keep the base images organized in a few different ways by using tags.

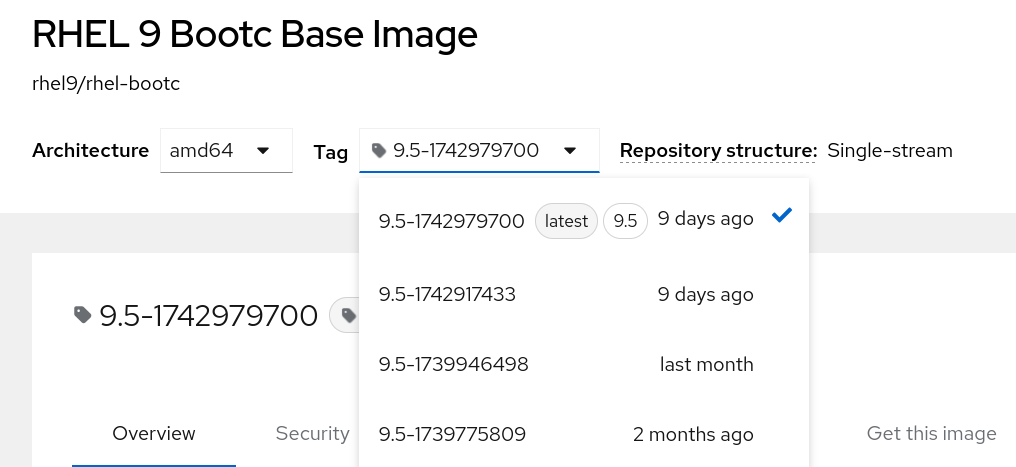

The table below describes the tags associated with the rhel-bootc repository.

latest |

the most recent minor release build within a major release |

9.5 |

the most recent build of a particular minor release |

9.5-1742979700 |

a timestamped build of a minor release |

|

Those timestamps are in UNIX epoch time, so the date -d@1742979700 Wed Mar 26 05:01:40 AM EDT 2025 |

At the time of writing, latest would point to 9.5, the most current minor release of RHEL 9. Both of these also point to 9.5-1742979700, as seen in the screenshot above. This also means that should a new timestamped build be published to the catalog, the 9.5 tag would automatically update to that next new build.

If that new build were a new minor release (eg 9.6), the latest tag would point to that minor.

The updates to base images follow the RHEL lifecycle. Minor releases will receive active updates for 6 months. If you do need to remain on a minor version longer, EUS is available with the standard policies. If required, it would be easiest to begin with an EUS image, rather than change the base image references 6 months later.

Knowing what tags point to what images, and therefor what software is available in your base image is important.

Using specific versions of base images

Let’s build a new image using an older tag that still checks out to get an idea of how this works and how you can use it to control updates.

nano Containerfile.baseFROM registry.redhat.io/rhel9/rhel-bootc:9.5-1739946498

RUN dnf install -y httpd

RUN echo "Hello Red Hat" > /var/www/html/index.html

RUN systemctl enable httpd.service|

Since we’re using a tag that doesn’t change in the registry, we know that we will always use the same base image no matter the state of the build host. If we had fewer tags or ones that moved more frequently, this is where we could use the SHA256 digest instead of a tag to be specific about image use. |

podman build --file Containerfile.base --tag {registry_hostname}/base-checkYou should notice that this build starts by pulling the base image, since it’s different than what we have been using. Since this is just an older version, you will still see shared layers being skipped.

Locally testing builds

In our normal workflow, we’d push this image to the registry and then update a bootc host to see any changes. Since these are, after all, container images, we can inspect them locally. Being able to run certain kinds of tests using a container engine without needing to fully boot an image can be a useful tool, especially when iterating on a build.

Let’s check the version of the kernel that was shipped in this older base image by running a shell in a temporary container.

podman run --rm -i {registry_hostname}/base-check rpm -qa httpd kernel*The arguments we pass to podman run are:

-

--rm→ remove the container immediately on exit -

-i→ pipe stdin into the container -

{registry_hostname}/base-check→ the image we just built to launch the container -

rpm -qa httpd kernel*→ the command to read from stdin and run inside the temporary container

kernel-modules-core-5.14.0-503.23.2.el9_5.x86_64 kernel-core-5.14.0-503.23.2.el9_5.x86_64 kernel-modules-5.14.0-503.23.2.el9_5.x86_64 kernel-5.14.0-503.23.2.el9_5.x86_64 httpd-2.4.62-1.el9_5.2.x86_64

You may be tempted to check the kernel version with something like uname -r, let’s see what happens

podman run --rm -i {registry_hostname}/base-check uname -r5.14.0-503.31.1.el9_5.x86_64

Since this is a container, and not a VM, we will see the host’s system kernel version not the one from the RPM list.

This sort of local testing is often called the 'inner loop' in software development, something not previously available to the design and development of standard operating system builds. For some testing, you need to launch a container fully to get systemd started instead of running it interactively, like checking on the httpd service.

While not every nuance of system behavior can be tested in a container (eg SELinux polices), this still can greatly reduce the amount of time usually needed to test changes Local VMs, like used in this lab, can be a very good way to create a full local testing environment for new bootc images.

Updating the base image using tags

To update the base image, we only need to change the tag to the latest timestamp variant (as of the writing of this exercise) and build the image:

nano Containerfile.baseFROM registry.redhat.io/rhel9/rhel-bootc:9.5-1747115631

RUN dnf install -y httpd

RUN echo "Hello Red Hat" > /var/www/html/index.html

RUN systemctl enable httpd.servicepodman build --file Containerfile.base --tag {registry_hostname}/base-checkYou should notice that this build skips all of the layers when pulling the base image. While it’s a different tag, it’s the same image as the one we’ve used throughout the exercises. Multiple tags can refer to a single image, allow you to convey meaningful information about an image.

You should also notice that we completely rebuilt the image, since the change in the container definition was at the very first instruction. This means the whole cache is skipped, unlike the previous builds in this lab.

Now let’s check the package versions in this base image using the same commands as before, but with our new image.

podman run --rm -i {registry_hostname}/base-check rpm -qa httpd kernel*kernel-modules-core-5.14.0-503.40.1.el9_5.x86_64 kernel-core-5.14.0-503.40.1.el9_5.x86_64 kernel-modules-5.14.0-503.40.1.el9_5.x86_64 kernel-5.14.0-503.40.1.el9_5.x86_64 httpd-2.4.62-4.el9.x86_64

There’s a newer kernel provided by the updated base image, but the version of httpd installed is the same. We’re using repositories directly from the Red Hat services, any packages we add during the container build will be whatever is most recently published. You can also update to a newer minor version by simply changing the tag to match.

Moving to newer versions of RHEL

Applying updates within a single minor version is the image mode analogue to standard patching for package mode hosts. We publish new versions of the base that includes new packages we’re shipping at the moment. The naming discussion also talked about what happens with new minor release. Being able to move not only between updates to a base image but to new minor or even major versions makes adopting and certifying new images much easier.

Normally, a new minor or major version of RHEL requires a number of changes along the line to integrate into automation, deliver to developers to test and certify the app, then on to the production deployment workflow. There’s usually a need for parallel systems as well, contributing to sprawl and management burden. Everything you’ve looked at so far with image mode opens a new path: replace the base image in a Containerfile, publish a new image, apply to an existing host, and rollback after testing. Let’s do that now.

Take the containerfile that has our Apache example and update it to RHEL 9.6.

nano ContainerfileFROM registry.redhat.io/rhel9/rhel-bootc:9.6

RUN dnf install -y httpd

ADD etc/ /etc

RUN <<EORUN

set -euo pipefail

mv /var/www /usr/share/www

sed -i 's-/var/www-/usr/share/www-' /etc/httpd/conf/httpd.conf

EORUN

RUN echo "Hello Red Hat Summit 2025!!" > /usr/share/www/html/index.html

RUN systemctl enable httpd.serviceAnd that’s really it. By changing the base image the FROM line uses, we now will build using the latest minor release. Publishing this for use, we want to use a new tag that let’s everyone know this image has a different version of RHEL inside.

podman build --file Containerfile --tag {registry_hostname}/httpd:9.6podman push {registry_hostname}/httpd:9.6Our newly updated image is available for any image mode host now. That’s all it takes to start adopting a new minor RHEL release. From here, we could pick a virtual machine and use the bootc switch command to start testing changes, but the technological changes between 9.5 and 9.6 are fairly small. That’s part of the lifecycle of RHEL. What about something that has a bigger jump?

Testing a new major release

Looking again at the naming convention discussion, you may notice that we use the namespace and repository part of the specification when publishing images. We don’t have users or organizations within the catalog for Red Hat, those are used for partner images. But we can make use of them to separate different major versions of RHEL. You may already be familiar with this from UBI, which has been publishing this way for a while: ubi8/ubi vs ubi9/ubi. The common repository name across namespaces makes it a little easier to understand what’s moving.

Edit the Containerfile again, but replace both the tag and the namespace.

FROM registry.redhat.io/rhel10/rhel-bootc:10.0

RUN dnf install -y httpd

ADD etc/ /etc

RUN <<EORUN

set -euo pipefail

mv /var/www /usr/share/www

sed -i 's-/var/www-/usr/share/www-' /etc/httpd/conf/httpd.conf

EORUN

RUN echo "Hello Red Hat Summit 2025!!" > /usr/share/www/html/index.html

RUN systemctl enable httpd.serviceWe now have an image using RHEL 10, with no other modifications to the rest of the containerfile. Build and push means we have published 3 images that differ only on RHEL version: the most recent 9.5, the recently released 9.6, and the brand new 10.0.

podman build --file Containerfile --tag {registry_hostname}/httpd:10.0podman push {registry_hostname}/httpd:10.0With something like testing a new major release, having the ability to test locally and in-place on a development host this quickly is key to faster adoption. Now, this isn’t a replacement for in-place upgrades, we are providing a new image. In an environment with more downstream images and live data, this example is only the starting point. We could start local testing, like at the start of this exercise, but having a built-in rollback means we can pick any host and start live tests.

The virtual machine you have created in the previous exercise should still be running. You can check this with:

virsh --connect qemu:///system listAnd the output should list the virtual machine called qcow-vm as running.

Now you can ssh into the virtual machine:

ssh core@qcow-vmTo deploy our new RHEL 10 based image for testing, we’ll use a new command bootc switch that tells bootc to track the new tag. We’ll talk more about what bootc switch does and how we can use it in the next exercise.

sudo bootc switch {registry_hostname}/httpd:10.0layers already present: 1; layers needed: 68 (721.0 MB) Fetching layers ████████████████████ 68/68 └ Fetching ████████████████████ 441 B/441 B (0 B/s) layer 3da3986d7339742f99b81 Fetched layers: 687.60 MiB in 40 seconds (17.11 MiB/s) ⠒ Deploying Deploying: done (4 seconds) Pruned images: 0 (layers: 0, objsize: 39.1 kB) Queued for next boot: node.545jj.gcp.redhatworkshops.io/httpd:10.0 Version: 10.20250116.0 Digest: sha256:19db07016bae2c6693337888474d54aa945599d1f9b991cb9d794e6daf1558e7

And then reboot to activate the new deployment.

After a moment you can log back into the virtual machine:

ssh core@qcow-vmAnd check out the version of RHEL running;

cat /etc/os-releaseNAME="Red Hat Enterprise Linux" VERSION="10.20250116.0.0 (Coughlan)" ID="rhel" ID_LIKE="centos fedora" VERSION_ID="10.0" PLATFORM_ID="platform:el10" PRETTY_NAME="Red Hat Enterprise Linux 10.20250116.0.0 Beta (Coughlan)" ANSI_COLOR="0;31" LOGO="fedora-logo-icon" CPE_NAME="cpe:/o:redhat:enterprise_linux:10::baseos" HOME_URL="https://www.redhat.com/" VENDOR_NAME="Red Hat" VENDOR_URL="https://www.redhat.com/" DOCUMENTATION_URL="https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/10" BUG_REPORT_URL="https://issues.redhat.com/" REDHAT_BUGZILLA_PRODUCT="Red Hat Enterprise Linux 10" REDHAT_BUGZILLA_PRODUCT_VERSION=10.0 REDHAT_SUPPORT_PRODUCT="Red Hat Enterprise Linux" REDHAT_SUPPORT_PRODUCT_VERSION="10.0" OSTREE_VERSION='10.20250116.0'

And our httpd service is working

systemctl status httpd.service --no-pagerWe’ve confirmed it’s RHEL 10, now we can start with our acceptance testing. Do our services work like we expect? Is the application throwing any errors? Were any deprecated features, like teamed NICs, being used? And if we run into a major error right at the start, we can simply rollback the host.

Before proceeding, make sure you have logged out of the virtual machine:

logoutThe prompt should look like `[lab-user@bastion ~]$ ` before continuing.

Making use of the tags in the catalog provides you with more options to control and trace your base images. To do the same with the packages you install on top of the base, you can use something like Satellite to control the visibility of new packages at build time. This combination can eliminate any drift between base images and installed packages from repositories.

You could routinely check the catalog for updates on some set schedule that works for your operational needs. This could be tedious across a large number of standard builds and could be automated. You could also take advantage of a new set of tools, not usually available for standard operating environment build and design. As image mode uses standard container methods, you can use other standard container tools like those that drive current gitops pipelines. This opens the door to entirely different ways of automating the building, deploying, and maintaining of your standard operating environments and the application images that use them. If you’d like to read more about a gitops flow, we have a blog that walks through a simple set up on GitHub with an accompanying template.