Using Red Hat AI Inference Server

In this lab, you will be using Red Hat AI Inference Server, running in a container on RHEL 9.5 and serving the gemma-3-1b-it-quantized.w8a8 model.

In order to get the inference server running you would need to pull its image from the registry and then create and run a container with that image.

To save you time, the image that will be used in the container has been pre-pulled and is already available in your RHEL host. The RHEL server has, by default, an NVIDIA L4 GPU with 24Gb of memory. As we said in the introduction, Red Hat AI Inference Server can use many other accelerators. The RHAIIS container has been created and it is already running on the host.

Monitor GPU utilization

-

Check that the RHAIIS systemd service is running, start by working in the top terminal.

sudo systemctl status rhaiisSample Output● rhaiis.service - RHAIIS Service Loaded: loaded (/etc/systemd/system/rhaiis.service; enabled; preset: disabled) Active: active (running) since Thu 2025-06-26 18:57:43 UTC; 3s ago Main PID: 14418 (podman) Tasks: 11 (limit: 98873) Memory: 35.8M CPU: 92ms CGroup: /system.slice/rhaiis.service ├─14418 /usr/bin/podman run --rm -it --device nvidia.com/gpu=all -p 8000:8000 --ipc=host --env HUGGING_FACE_HUB_TOKEN=hf_random_token> └─14432 catatonit -P -

Query its OpenAPI endpoint for the model list

curl -s http://localhost:8000/v1/models | jqSample Output{ "object": "list", "data": [ { "id": "RedHatAI/gemma-3-1b-it-quantized.w8a8", "object": "model", "created": 1750964567, "owned_by": "vllm", "root": "RedHatAI/gemma-3-1b-it-quantized.w8a8", "parent": null, "max_model_len": 8192, "permission": [ { "id": "modelperm-9ff130086afd4fba87417239e10c36f3", "object": "model_permission", "created": 1750964567, "allow_create_engine": false, "allow_sampling": true, "allow_logprobs": true, "allow_search_indices": false, "allow_view": true, "allow_fine_tuning": false, "organization": "*", "group": null, "is_blocking": false } ] } ] }We can see that the model

RedHatAI/gemma-3-1b-it-quantized.w8a8is available. -

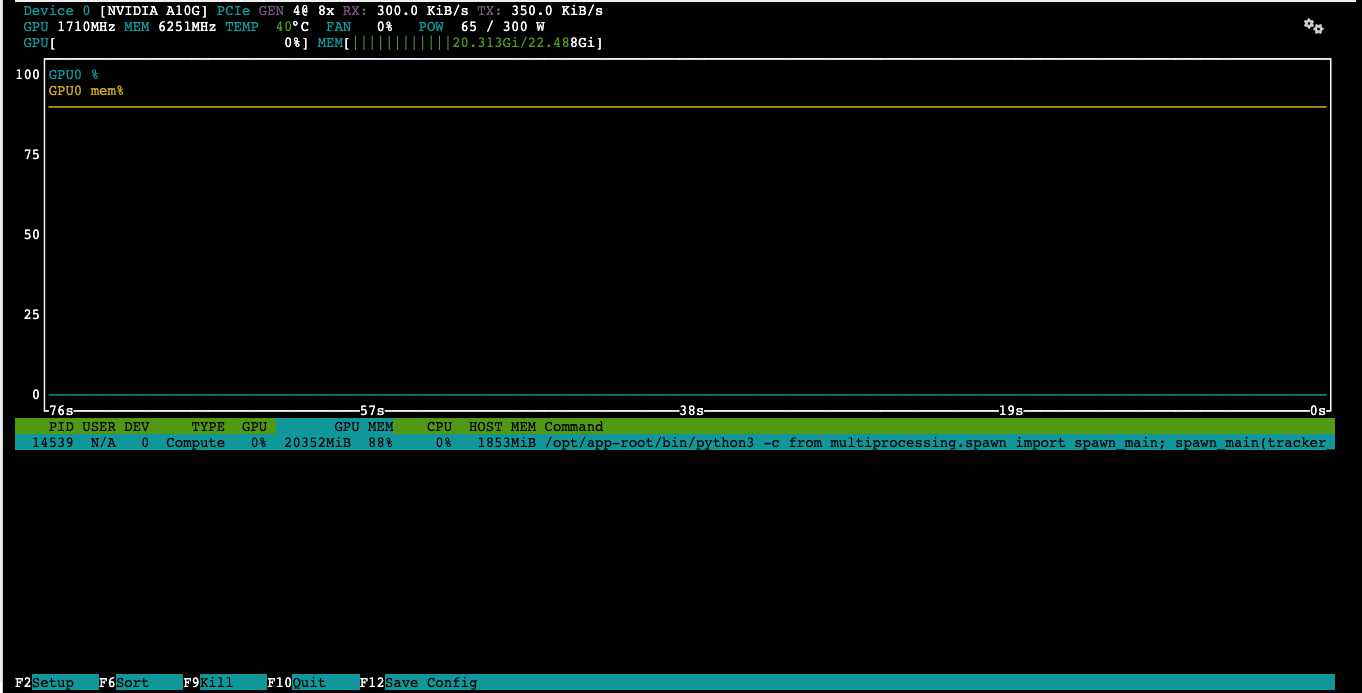

Check the GPU utilization by using the nvtop command in the bottom terminal. You can Exit at anytime by using either the q or kdb:[Esc] key.

nvtop

The ouput may not match the above and will vary depending on the GPU utilization, the GPU type, and the model being used.

Query Red Hat AI Inference Server

-

In the top terminal, submit this query using the

curlcommand. The request is long enough that the GPU will be fully utilized for a short while.curl -s -X POST http://localhost:8000/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "RedHatAI/gemma-3-1b-it-quantized.w8a8", "messages": [ { "role": "user", "content": "Write me 5 to 10 paragraphs about RHEL" } ], "temperature": 0.7, "max_tokens": 1500 }' | jqSample Output{ "id": "chatcmpl-91897da3596640b68d5bf581fc9da4c4", "object": "chat.completion", "created": 1750965146, "model": "RedHatAI/gemma-3-1b-it-quantized.w8a8", "choices": [ { "index": 0, "message": { "role": "assistant", "reasoning_content": null, "content": " .... .... .... .... ....", "tool_calls": [] }, "logprobs": null, "finish_reason": "stop", "stop_reason": 106 } ], "usage": { "prompt_tokens": 21, "total_tokens": 732, "completion_tokens": 711, "prompt_tokens_details": null }, "prompt_logprobs": null }Look at the termial where the GPU monitor is running and you will see how its resources are being used until the response is displayed. At the end of the response, you will see the usage data.

Try a few more queries by adjusting the content in the API call above and see how the GPU resources are used.

Using OpenAI API

Now, you will use OpenAI API to query Red Hat AI Inference Server. This is really useful if we want applications to be able to communicate with it. OpenAI API has become a standard as it supports stateful interactions, function calling, and integration with external tools and data, making it a powerful resource for building intelligent applications.

-

We will create a Python script that will submit the query to the Red Hat AI Inference Server. But before we need to install the OpenAI library.

pip install openaiSample OutputDefaulting to user installation because normal site-packages is not writeable Collecting openai Downloading openai-1.92.1-py3-none-any.whl (753 kB) |████████████████████████████████| 753 kB 19.2 MB/s .... <TRUNCATED> .... Installing collected packages: typing-extensions, sniffio, h11, exceptiongroup, typing-inspection, pydantic-core, httpcore, anyio, annotated-types, tqdm, pydantic, jiter, httpx, distro, openai Successfully installed annotated-types-0.7.0 anyio-4.9.0 distro-1.9.0 exceptiongroup-1.3.0 h11-0.16.0 httpcore-1.0.9 httpx-0.28.1 jiter-0.10.0 openai-1.92.1 pydantic-2.11.7 pydantic-core-2.33.2 sniffio-1.3.1 tqdm-4.67.1 typing-extensions-4.14.0 typing-inspection-0.4.1 -

Create a Python script that will submit the query to the Red Hat AI Inference Server.

As you can see in the simple script below, it is creating a client that asks our model why Red Hat AI Inference Server is good for RHEL. In here we already have a dummy api_key configured.

cat << 'EOF' > api.py from openai import OpenAI api_key = "llamastack" model = "RedHatAI/gemma-3-1b-it-quantized.w8a8" base_url = "http://localhost:8000/v1/" client = OpenAI( base_url=base_url, api_key=api_key, ) response = client.chat.completions.create( model=model, messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Why is Red Hat AI Inference Server a great fit for RHEL?"} ] ) print(response.choices[0].message.content) EOF -

Run the python script using the following command

python api.pyThe output is omitted as there will a degree of non-determinisim in it, however expect a lengthy response on topic.

Once again, you can see in the GPU monitor how its resources are being utilized until the response is returned.

Try a few more queries by adjusing the content in the Python script or the curl command to see how the GPU resources are used and the accuracy of the responses.

Conclusion

In this module, we’ve explored the Red Hat AI Inference Server (RHAIIS) and demonstrated its powerful capabilities as a production-ready LLM runtime. RHAIIS provides a robust, scalable solution for deploying and serving large language models in enterprise environments.

Key highlights of RHAIIS include:

-

Industry Standard OpenAI API Compatibility: RHAIIS implements the OpenAI API specification, making it easy to integrate with existing applications and tools that expect OpenAI-compatible endpoints. This compatibility reduces migration effort and allows for seamless adoption.

-

High Performance: The server efficiently utilizes GPU resources, providing fast inference times for Large Language Models.

-

Enterprise Ready: Built for Red Hat Enterprise Linux (RHEL), Red Hat OpenShift, and 3rd Party Platforms, RHAIIS offers the reliability, security, and support that enterprise environments require.

-

Flexible Model Support: RHAIIS supports various model formats and can serve different types of language models, making it versatile for different use cases.

-

Easy Integration: Developers can consume the RHAIIS API endpoint using any framework and language that supports industry standard OpenAI API.

The combination of OpenAI API compatibility, enterprise-grade reliability, and high performance makes RHAIIS an excellent choice for organizations looking to deploy AI inference capabilities on their RHEL, RHEL AI, OpenShift AI, and 3rd Party Platforms infrastructure. Its ability to serve models efficiently while maintaining compatibility with industry standards positions it as a compelling solution for modern AI workloads.