Module 1: Getting Started

In this Module, we will deploy a Llama-Stack Server, a powerful platform designed to simplify and streamline your development workflow.

We will take you through the process of setting up our first Llama-Stack Server instance, leveraging the Ollama Local LLM server for seamless local development. By the end of this segment, you will gain hands-on experience with the basics of Llama-Stack Server and be equipped to tackle more complex tasks in subsequent lab segments.

Throughout this Module, we will cover essential topics such as:

-

Setting up the Ollama Local LLM server

-

Configuring the Llama-Stack Server instance

-

Exploring core features and functionality

Let’s dive into the world of Llama-Stack Server and get started with our first instance!

Local LLM Development with Ollama

In this lab, you will be setting up your own local Large Language Model (LLM) server using Ollama. This approach allows you to experiment with AI coding assistance without relying on external cloud services, giving you full control over your AI environment.

Ollama provides a simple way to run various open-source large language models on your local machine. We have taken the liberty to install ollama server for you and download a few models to save you time.

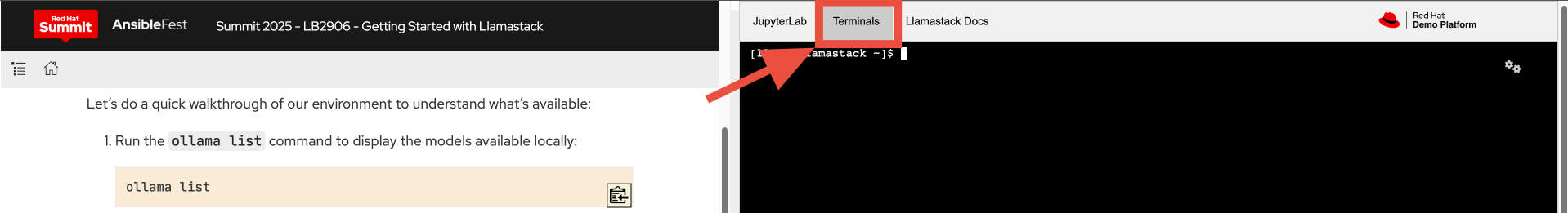

Let’s do a quick walkthrough of our environment to understand what’s available. Switch to the Terminals Tab, selectable on the top right of your Interface.

-

Run the

ollama listcommand to display the models available locally:ollama list-

You should see a list of models that are already downloaded and available for use.

[llama@llamastack ~]$ ollama list NAME ID SIZE MODIFIED granite3.2:8b 9bcb3335083f 4.9 GB 22 hours ago

-

-

Let’s pull down the "ollama pull llama3.2:3b-instruct-fp16" model with the

ollama pullcommand:ollama pull llama3.2:3b-instruct-fp16-

You should see the model being pulled down, that might take a moment:

[llama@llamastack ~]$ ollama pull llama3.2:3b-instruct-fp16 > pulling manifest pulling e2f46f5b501c... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████▏ 6.4 GB pulling 966de95ca8a6... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████▏ 1.4 KB pulling fcc5a6bec9da... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████▏ 7.7 KB pulling a70ff7e570d9... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████▏ 6.0 KB pulling 56bb8bd477a5... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████▏ 96 B pulling 1bc315994ceb... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████▏ 558 B verifying sha256 digest writing manifest success

-

-

Running

ollama run llama3.2:3b-instruct-fp16command will let us interact with our LLM on the command line, the--keepalive -1mwill make sure the model stays loaded forever, this is important for us as we plan to use this model throughout the lab.ollama run llama3.2:3b-instruct-fp16 --keepalive -1m-

Ask the model a question or two, and type

/byeor CTRL+D to exist the chat.Exiting the chat session will not kill your

ollama runprocess and at anytime you can confirm running models viaollama ps

-

Setting up your first LLamaStack Server

-

Let’s create some variables that will make the install easier, you can hard code your commands, but this makes them easy to remember and change when you create lots of these in the future:

export LLAMA_STACK_MODEL="meta-llama/Llama-3.2-3B-Instruct" (1) export LLAMA_STACK_PORT=8321 (2) export LLAMA_STACK_SERVER=http://localhost:$LLAMA_STACK_PORT (3) export LLAMA_SERVER_CONTAINER_IMAGE="docker.io/llamastack/distribution-ollama:0.2.7" (4) export CONTAINER_NAME="llamastack-ollama" (5) export OLLAMA_PORT=11434 (6)1 LLAMA_STACK_MODEL: the Inference Model,the model we will talk to, note Meta’s naming is different fromollama:2 LLAMA_STACK_PORT: the TCP port the llamastack server listens on3 LLAMA_STACK_SERVER: Llama Stack Server API endpoint, where clients and apps connect to4 LLAMA_SERVER_CONTAINER_IMAGE: Container image-

The Llama Stack version including the type of llm server (

ollama)

5 CONTAINER_NAME: Multiple Llama Stack servers exist for different LLM runtimes (ollama,vllm, etc) -

-

Run the container using the

podman runcommand: (If you are a docker user, just usedocker run)podman run -d \ --name $CONTAINER_NAME \ --network=host \ $LLAMA_SERVER_CONTAINER_IMAGE \ --port $LLAMA_STACK_PORT \ --env INFERENCE_MODEL="$LLAMA_STACK_MODEL" \ --env OLLAMA_URL=http://localhost:$OLLAMA_PORT -

Let’s watch the container load and see that it is running successfully

podman logs -f $CONTAINER_NAME-

Expect to see output similar to the text below when the container is running, suggesting that our container is listening on port 8321:

INFO 2025-04-01 22:13:16,340 llama_stack.providers.remote.inference.ollama.ollama:75 inference: checking connectivity to Ollama at `http://localhost:11434`... INFO 2025-04-01 22:13:20,120 llama_stack.providers.remote.inference.ollama.ollama:294 inference: Pulling embedding model `all-minilm:latest` if necessary... INFO 2025-04-01 22:13:26,550 __main__:478 server: Listening on ['::', '0.0.0.0']:8321 INFO: Started server process [1] INFO: Waiting for application startup. INFO 2025-04-01 22:13:26,567 __main__:148 server: Starting up INFO: Application startup complete. INFO: Uvicorn running on http://['::', '0.0.0.0']:8321 (Press CTRL+C to quit)

-

Command line fun with Llama-stack Server (OPTIONAL)

If you want to see some command line example commands you can run, follow along with the instructions, if you want to get straight into the code, you can skip this part as it is not required for the rest of the lab.

|

Switch to the Terminal window for this section, as any long-running commands will persist via its |

-

Let’s start by installing the llama-stack-client

source ~/venv/bin/activate pip install --upgrade pip pip install llama-stack-client==0.2.7 -

Point your

llama-stack-clientat your local llama-stack server, either using--endpoint $LLAMA_STACK_SERVERifLLAMA_STACK_SERVERis defined or--endpoint http://localhost:8321if not:llama-stack-client configure --endpoint http://localhost:8321Hit Return when prompted for an API Key

-

List the models that are currently served by the Llama-stack server

llama-stack-client models listWith a single command you can also add new ones, like this:

llama-stack-client models register --provider-id ollama --provider-model-id llama3.2-vision:11b llama3.2-vision:11b

-

You can talk directly to the model, to see that things are working

llama-stack-client \ inference chat-completion \ --message "Write me a small poem why llamas are better than bees without knees" -

You can also list different services available on the server, such as:

vector_dbs,toolgroups,shields,datasets,providersand many others:llama-stack-client --help -

Now that the llama-stack server is running, you can also engage with it through code, API access, simple

curlcommands and more:curl -sS $LLAMA_STACK_SERVER/v1/inference/chat-completion \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $API_KEY" \ -d "{ \"model_id\": \"$LLAMA_STACK_MODEL\", \"messages\": [{\"role\": \"user\", \"content\": \"Write me a small poem why llamas are better than bees without knees?\"}], \"temperature\": 0.0 }" | jq -r '.completion_message | select(.role == "assistant") | .content' -

You can pull out information with structured data request:

curl -sS $LLAMA_STACK_SERVER/v1/models -H "Content-Type: application/json" | jq -r '.data[].identifier'

Optional: Llama Stack Playground

A Web UI, Llama Stack Playground, has been included with your lab. This will allow you to, graphically, chat with your LLM, and as you go through the lab interact with both the Llama Stack RAG and Agentic components.

-

In one of your terminals' set up the virtual env (venv) with the Streamlit python dependencies

Streamlit is an open-source Python framework that allows data scientists, AI Developers, etc to quickly build and deploy interactive web applications and dashboards with minimal front-end experience.

pip install -r ~/lab/assets/ui/requirements.txtSample OutputCollecting llama-stack>=0.2.1 (from -r /home/llama/lab/assets/ui/requirements.txt (line 1)) Obtaining dependency information for llama-stack>=0.2.1 from https://files.pythonhosted.org/packages/c1/7f/3caf93be63e96602225ef84afaf7d13bdf1758b782e6f58000aab8ff52d9/llama_stack-0.2.7-py3-none-any.whl.metadata Downloading llama_stack-0.2.7-py3-none-any.whl.metadata (18 kB) Requirement already satisfied: llama-stack-client>=0.2.1 in ./venv/lib64/python3.12/site-packages (from -r /home/llama/lab/assets/ui/requirements.txt (line 2)) (0.2.7) ... Successfully uninstalled packaging-25.0 Successfully installed altair-5.5.0 blinker-1.9.0 blobfile-3.0.0 cachetools-5.5.2 durationpy-0.10 filelock-3.18.0 fire-0.7.0 fsspec-2025.3.2 gitdb-4.0.12 gitpython-3.1.44 google-auth-2.40.1 huggingface-hub-0.31.4 jiter-0.10.0 kubernetes-32.0.1 llama-stack-0.2.7 lxml-5.4.0 narwhals-1.40.0 oauthlib-3.2.2 openai-1.79.0 packaging-24.2 pillow-11.2.1 protobuf-6.31.0 pyarrow-20.0.0 pyasn1-0.6.1 pyasn1-modules-0.4.2 pycryptodomex-3.23.0 pydeck-0.9.1 regex-2024.11.6 requests-oauthlib-2.0.0 rsa-4.9.1 smmap-5.0.2 streamlit-1.45.1 streamlit-option-menu-0.4.0 tenacity-9.1.2 tiktoken-0.9.0 toml-0.10.2 watchdog-6.0.0 -

Start the Llama Stack Playground via

streamlit, we’ll run it in the backgroundnohup streamlit run ~/lab/assets/ui/app.py & -

Now either open the rightmost Tab Llama Stack Playground (after you deploy it) and reload it or in your web browser open a new Browser Tab

As you progress through the lab more and more of the Llama Stack playground will become available to you for exploration, ie after Module 3 you’ll be able to explore the RAG functionality.

Lab Summary: Getting Familiar with Llama Stack Basics

In this initial lab module, you took your first steps with the Llama Stack framework, exploring fundamental concepts for running Large Language Models (LLMs). And setting up Llama Stack.

Through practical exercises, you gained experience in:

-

Pulling and running an LLM with ollama Working with the Ollama LLM runtime

-

Initializing the Llama Stack Server: Connecting your Llama Stack Server to the LLM Runtime.

-

Initializing the Llama Stack Client: Connecting your Llama Stack Client to the Llama Stack Server

-

Performing basic chat completion: Sending prompts to an LLM and receiving responses.

This module provided a foundational understanding of interacting with LLMs via Llama Stack, setting up both the Llama Stack Server and Client and doing basic inference.