Exploring the Lab

The lab is designed to be interactive and hands-on, allowing you to explore the capabilities of LlamaStack and OpenShift AI in a practical setting. The lab environment is pre-configured with all necessary components, so you can focus on learning and experimentation without worrying about setup or configuration issues.

OpenShift Console

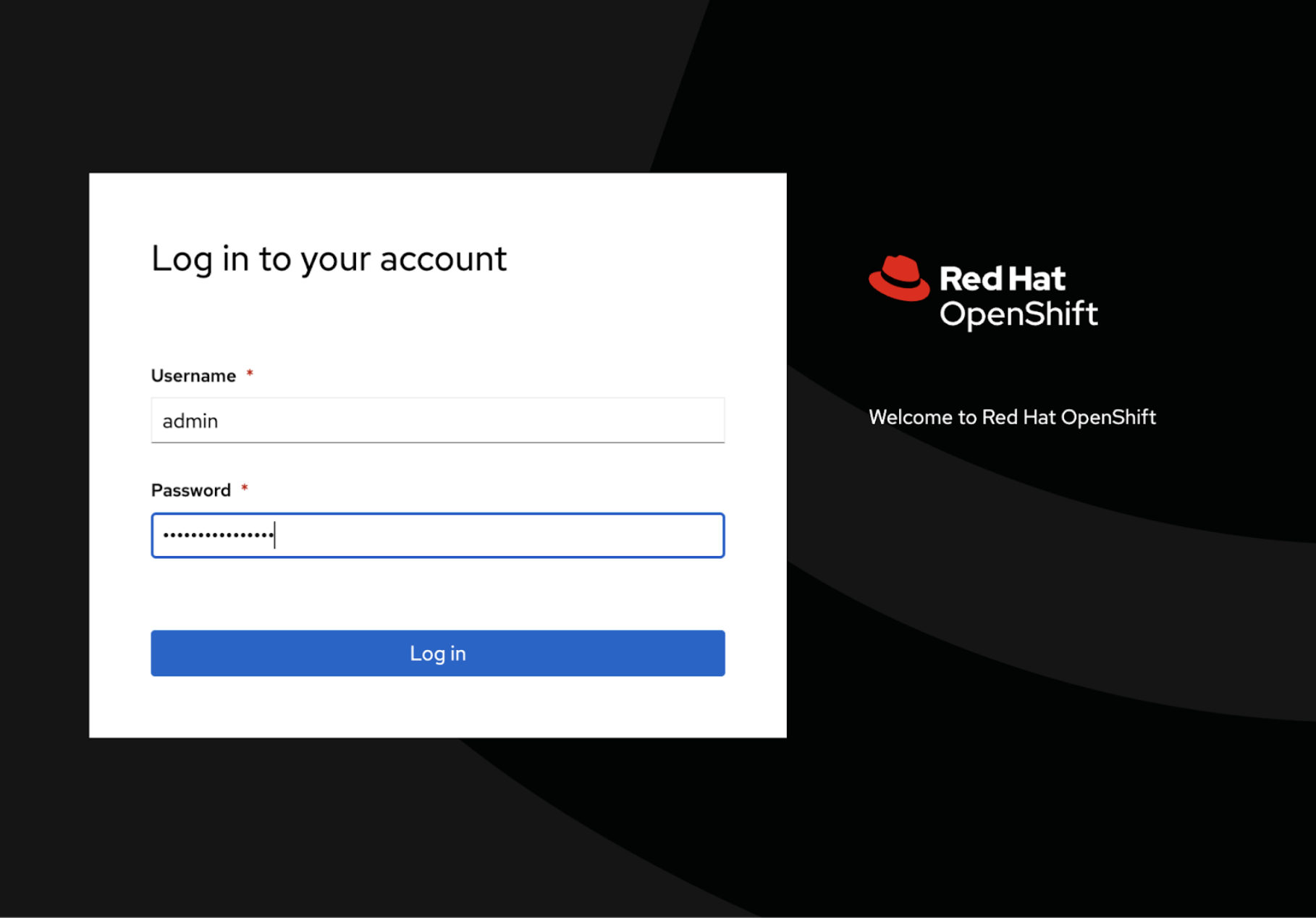

Log in to the cluster: Navigate to the OpenShift cluster and login with the provided credentials.

The URL for the OpenShift console is: {openshift_console_url}

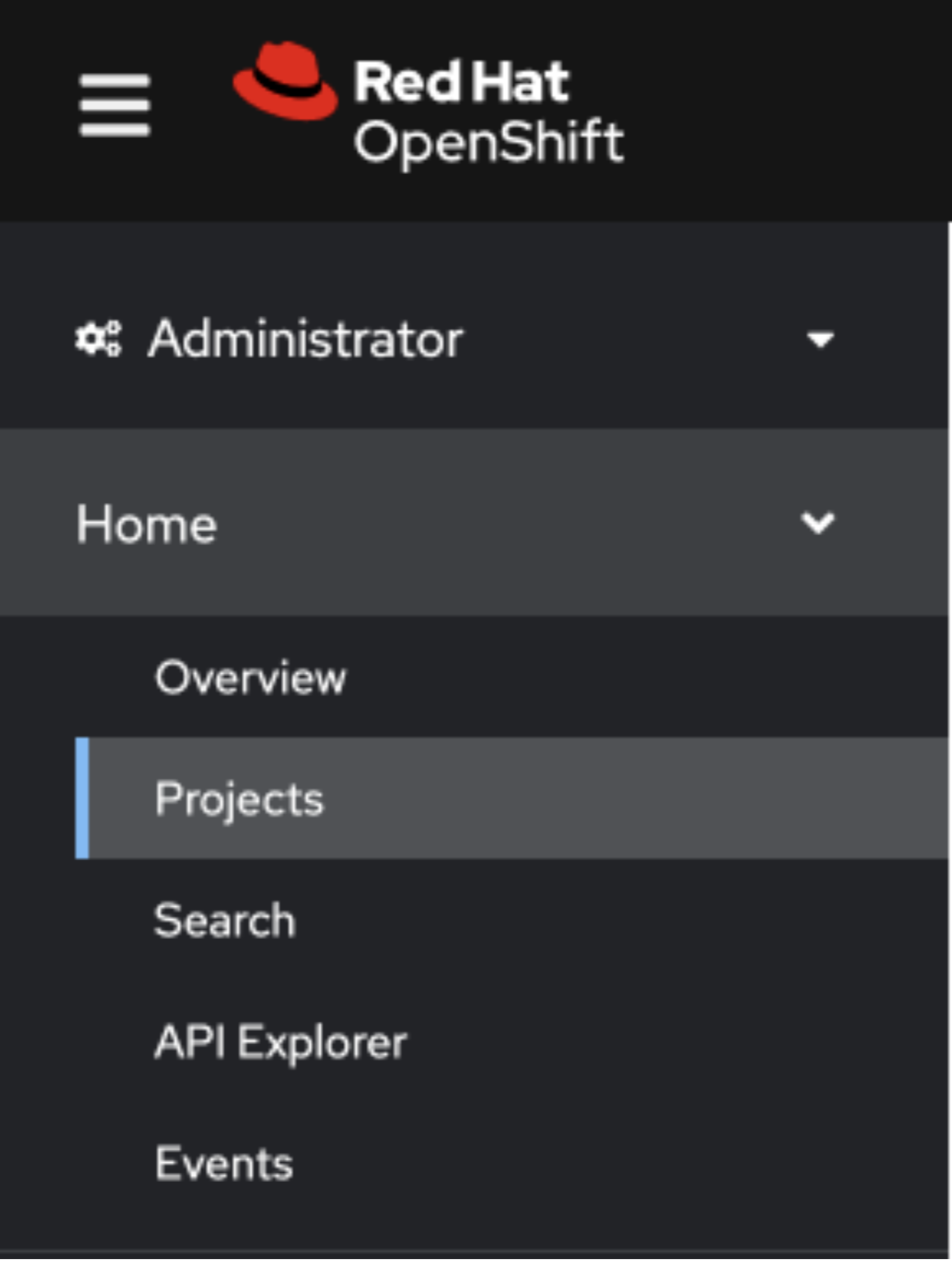

Once you are logged in, click on the Home → Projects tab on the left side of the console to view all the namespaces on the cluster:

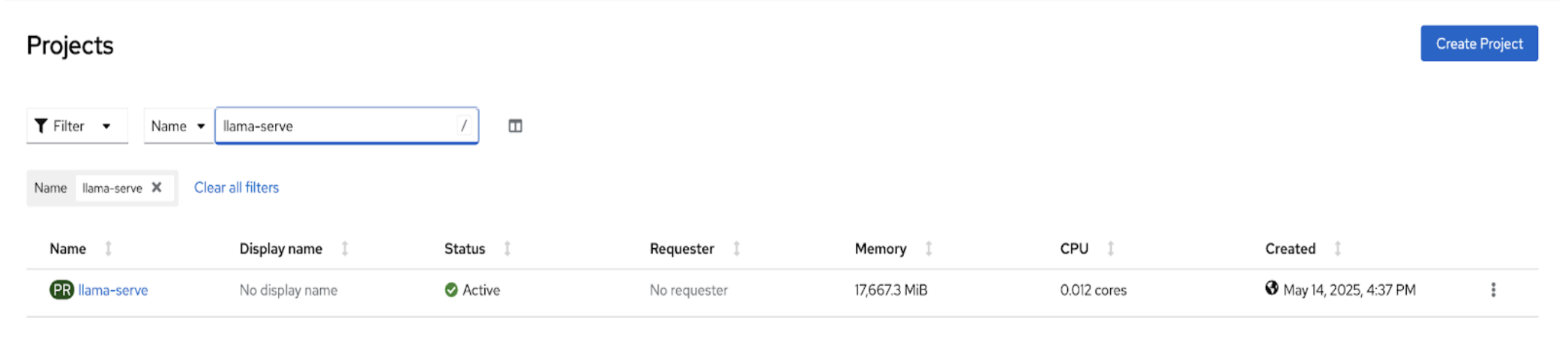

Search and select the llama-serve namespace, this is the namespace where all the lab components have been deployed.

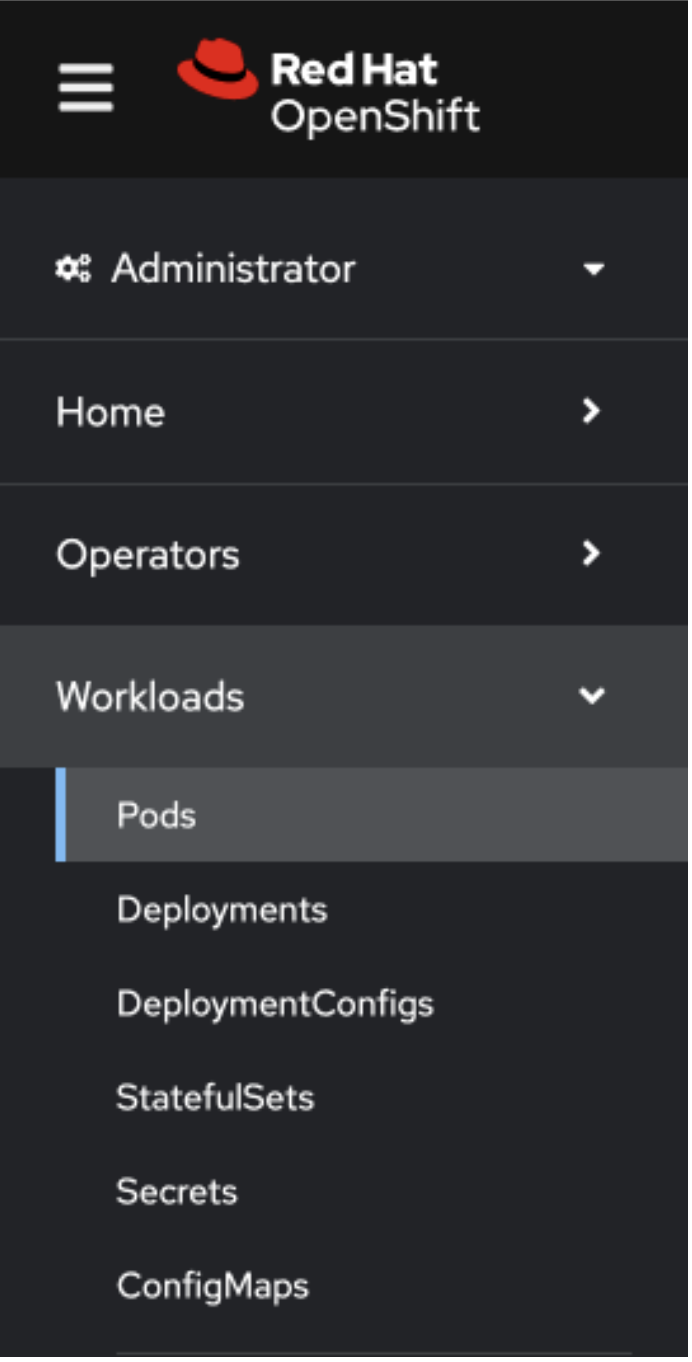

To view the pods running on the llama-serve namespace

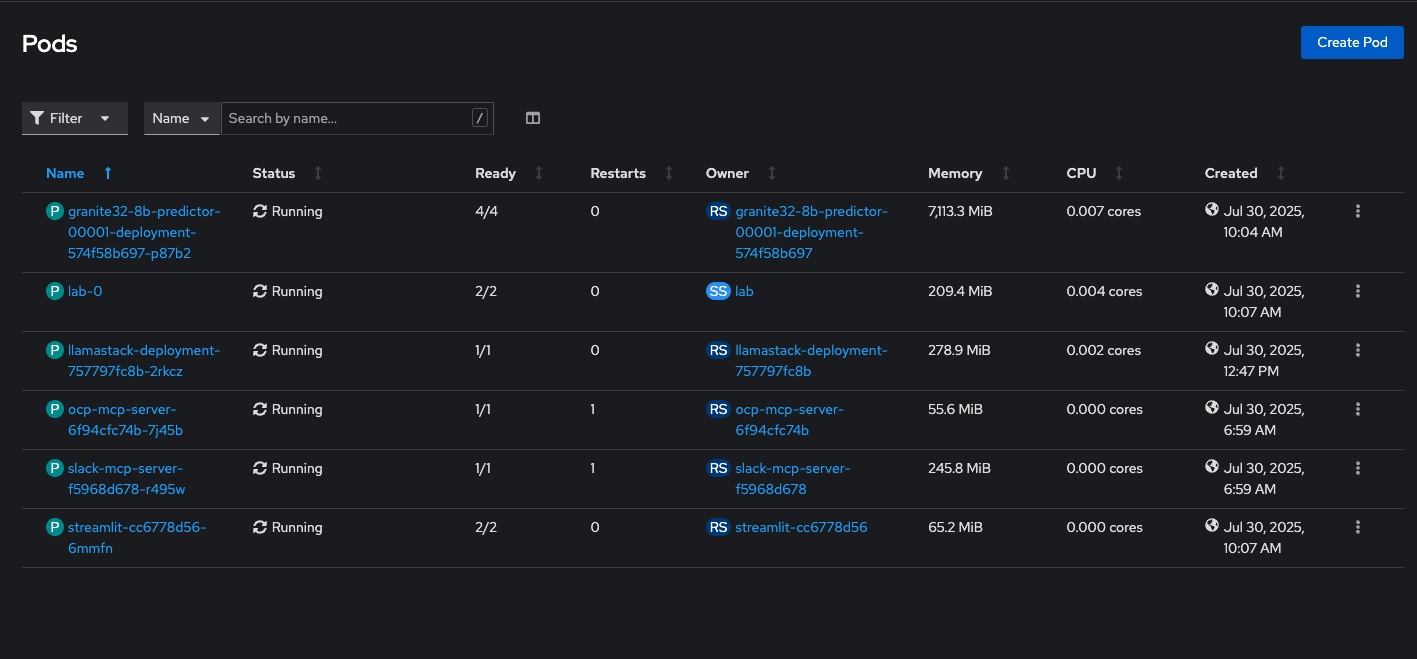

Click on Workloads → Pods to view all the components deployed and running as pods for this lab:

You should see the following pods running, each of which correspond to a specific component required for the lab:

Red Hat OpenShift AI Console

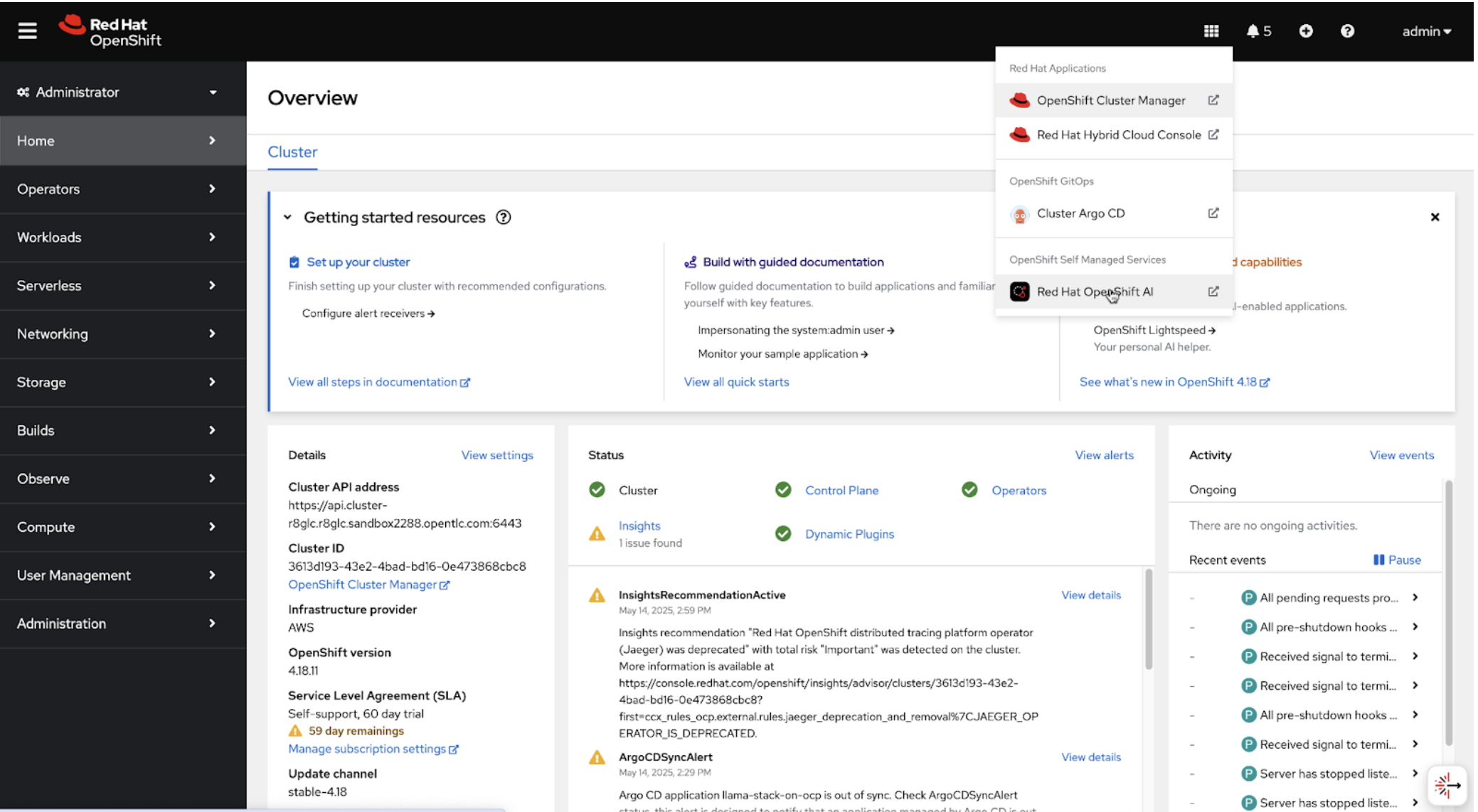

To view the models running on Openshift AI, use the following URL https://rhods-dashboard-redhat-ods-applications.{openshift_cluster_ingress_domain}/ or click on the Red Hat OpenShift AI application as shown below:

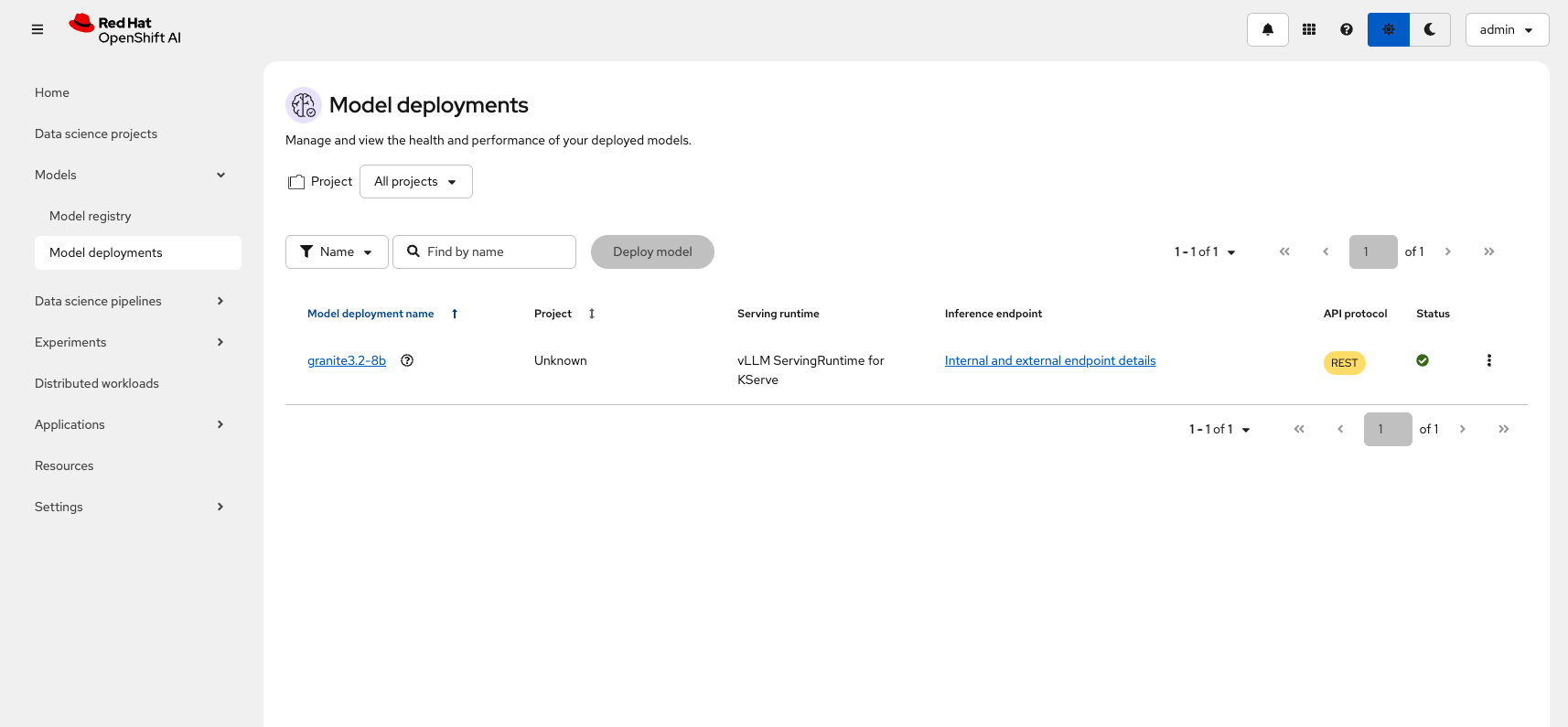

Navigate to the Model deployments tab that is located on the left hand side:

You will see one model running via the RHOAI vLLM ServingRuntime:

-

granite3.2-8b

Another model is also available in the Llama Stack environment, but it is not running via RHOAI vLLM ServingRuntime. This has been done to demonstrate the flexibility of Llama Stack in supporting inference servers that exist outside of the RHOAI environment. The remote model is:

-

llama3.2-3b

Jupyter Notebook Workbench

To launch the Jupyter notebook workbench use the following link https://rhods-dashboard-redhat-ods-applications.{openshift_cluster_ingress_domain}/projects/llama-serve?section=workbenches or follow the steps as shown below:

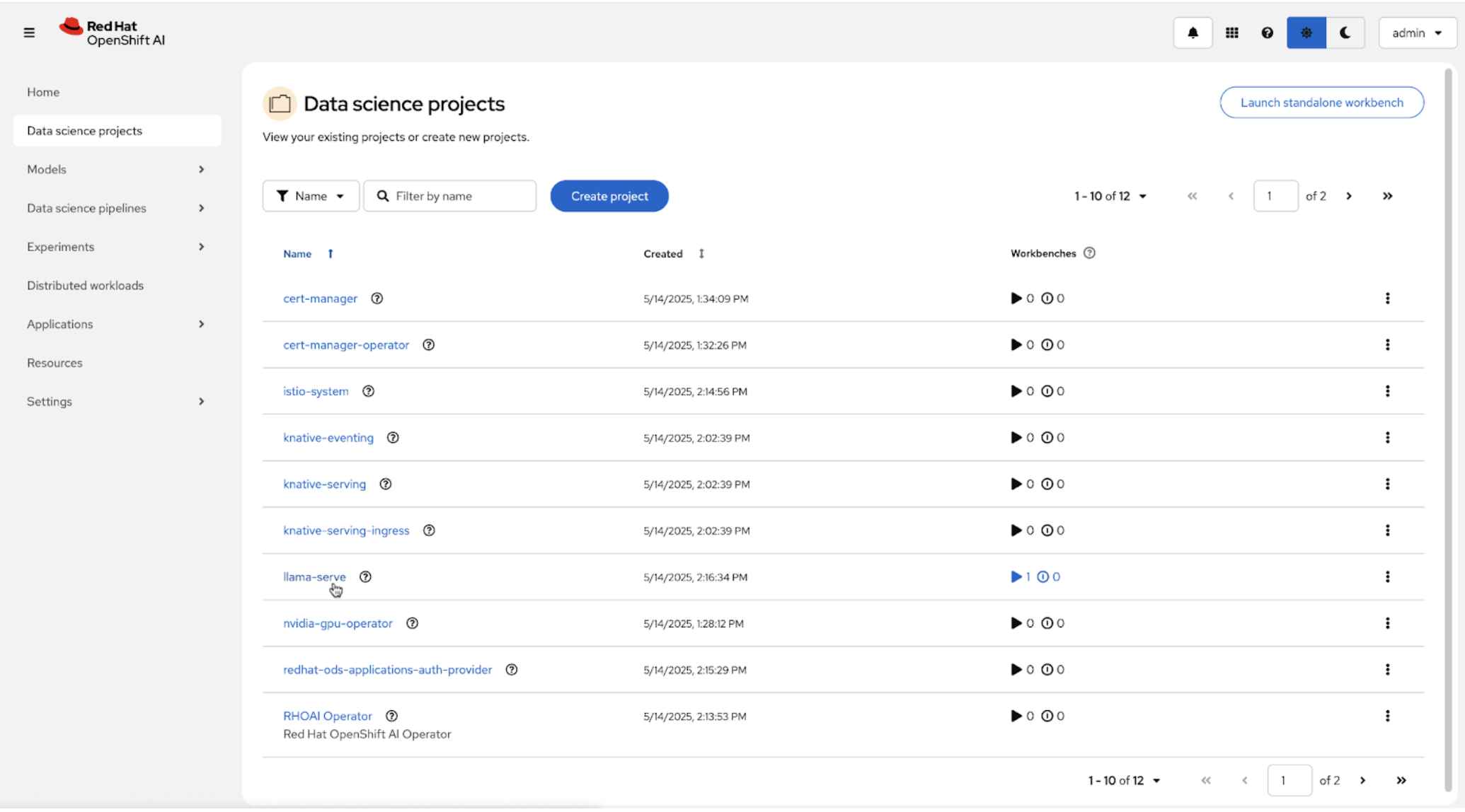

Your Jupyter workbench can be found in Data science projects (located on the left hand side of the console). Select the project llama-serve:

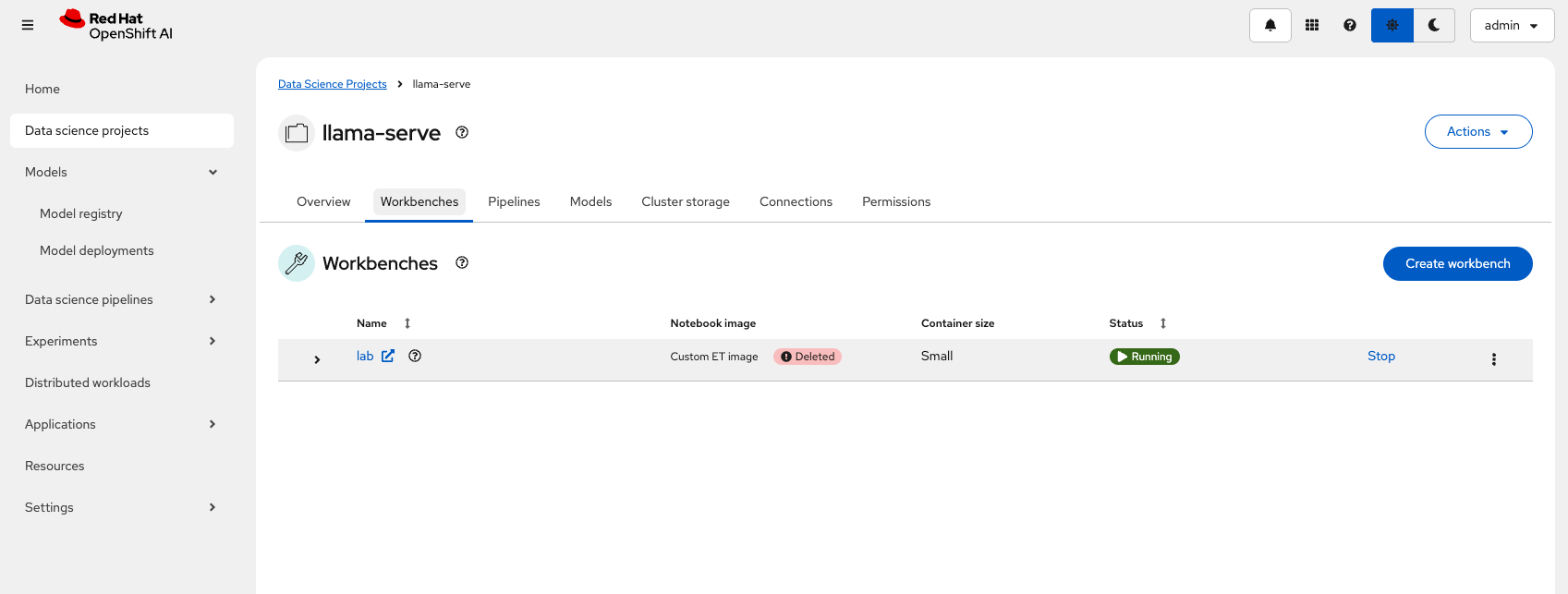

Once you have selected llama-serve as the data science project, you will see a workbenches tab as shown below:

Click on the blue arrow next to "lab" to access the Jupyter workbench. You will need to sign in again with your username and password. Upon opening this workbench, you’ll find a list of interactive notebooks that will walk you through different Llama Stack demos.

Now let’s start running these notebooks!