VMs and GitOps

In this exercise we will create VMs and pods using OpenShift GitOps. We will also configure a network policy to control the traffic between the VM and the pod.

|

Many of the commands in this module use variable substitutions, which will identified by curly braces. |

Clone the GitOps repository

To run this exercise we need to clone the course git repo.

The course git repo is located at https://github.com/juliovp01/etx-virt_delivery.

Clone the repository to your bastion VM.

$ git clone https://github.com/juliovp01/etx-virt_delivery

Cloning into 'etx-virt_delivery'...

remote: Enumerating objects: 1300, done.

remote: Counting objects: 100% (169/169), done.

remote: Compressing objects: 100% (119/119), done.

remote: Total 1300 (delta 69), reused 110 (delta 38), pack-reused 1131 (from 1)

Receiving objects: 100% (1300/1300), 50.33 MiB | 31.68 MiB/s, done.

Resolving deltas: 100% (412/412), done.

$ cd etx-virt_deliveryInstall the OpenShift GitOps Operator

This Openshift environment does not have Argo CD already deployed. So we have to deploy it. Run the following commands on your bastion VM.

$ oc adm new-project openshift-gitops

Created project openshift-gitops

$ oc apply -f content/gitops/bootstrap/subscription.yaml

subscription.operators.coreos.com/openshift-gitops-operator created

$ oc apply -f content/gitops/bootstrap/cluster-rolebinding.yaml

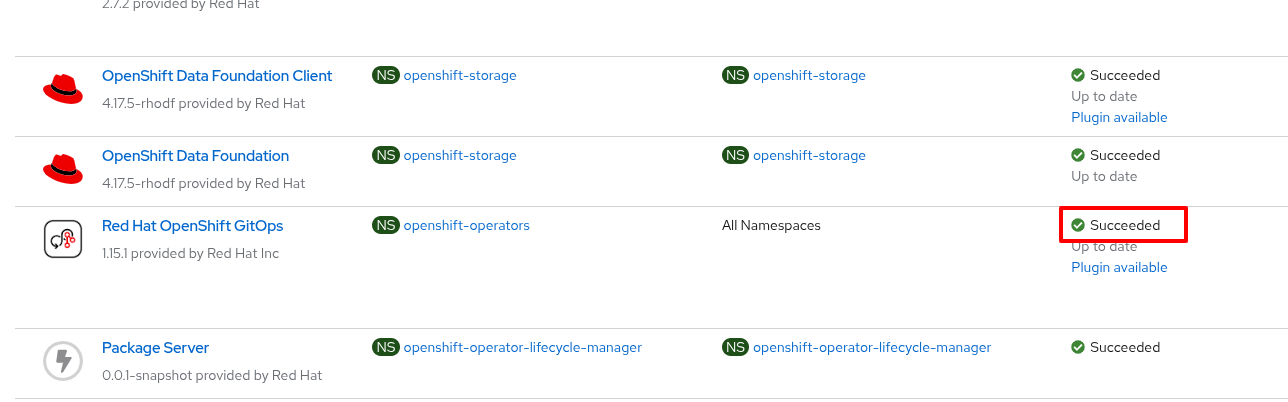

clusterrolebinding.rbac.authorization.k8s.io/cluster-admin-gitops-sc createdCheck the Installed Operators in your OpenShift console to verify that the Red Hat OpenShift GitOps operator was successfully installed.

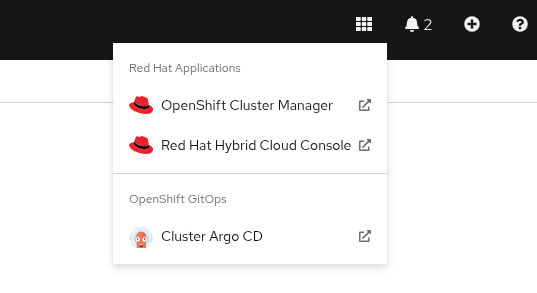

Open the ArgoCD console by clicking on the applications menu (3x3 boxes symbol) in the OpenShift console and selecting Cluster Argo CD.

Choose LOG IN VIA OPENSHIFT and enter your OpenShift credentials:

-

User: {openshift_cluster_admin_username}

-

Password: {openshift_cluster_admin_password}

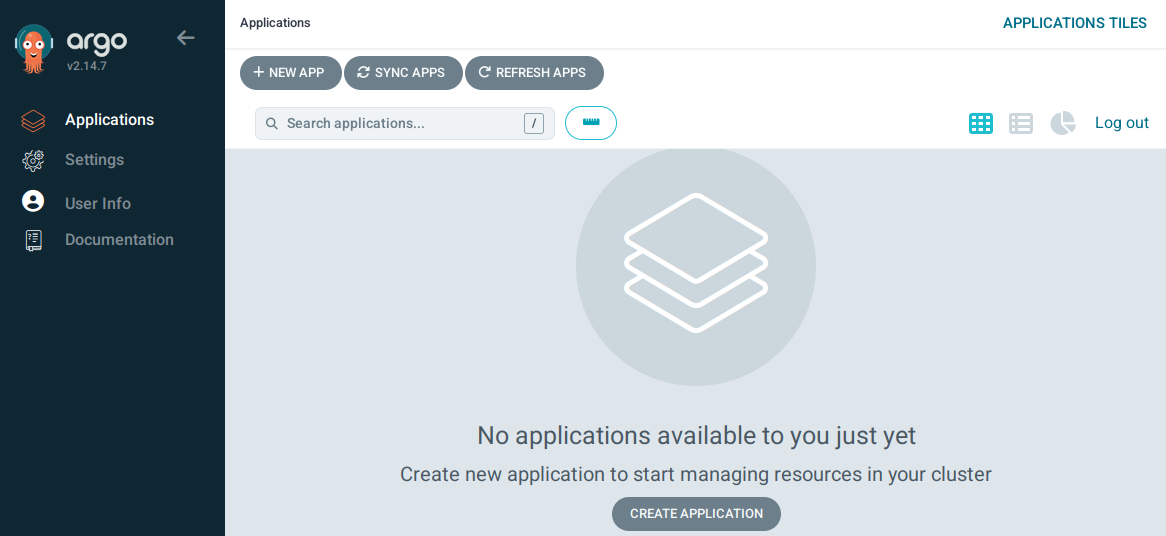

When prompted to authorize access, click on Allow selected permissions. The ArgoCD console will be shown.

Deploy the Argo CD Instance

Let’s deploy the Argo CD instance. In this case we are going to deploy the default Argo CD instance, but in a real world scenario , you might have to design for multitenancy, in which case you might end up needing more than one instance. Run the following commands.

$ export gitops_repo=https://github.com/juliovp01/etx-virt_delivery.git

$ export cluster_base_domain=$(oc get ingress.config.openshift.io cluster --template={{.spec.domain}} | sed -e "s/^apps.//")

$ export platform_base_domain=${cluster_base_domain#*.}

$ envsubst < content/gitops/bootstrap/argocd.yaml | oc apply -f -

Warning: resource argocds/openshift-gitops is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by oc apply. oc apply should only be used on resources created declaratively by either oc create --save-config or oc apply. The missing annotation will be patched automatically.

argocd.argoproj.io/openshift-gitops configured

configmap/setenv-cmp-plugin created

configmap/environment-variables created

group.user.openshift.io/cluster-admins createdDeploy the gitops root Application

We are going to deploy two applications in the same namespace: one VM and one pod. The pod will be able to talk to the VM, but the VM will not be able to talk to the pod.

To do so we are going to employ the app of apps pattern. In this pattern, we have a root application that deploys other applications.

The root application will look for applications to deploy in the content/gitops/applications directory.

Deploy the root application by running the following command.

$ envsubst < content/gitops/bootstrap/appset.yaml | oc apply -f -

applicationset.argoproj.io/root-applications createdNotice that we have implemented the app of apps pattern with an ApplicationSet.

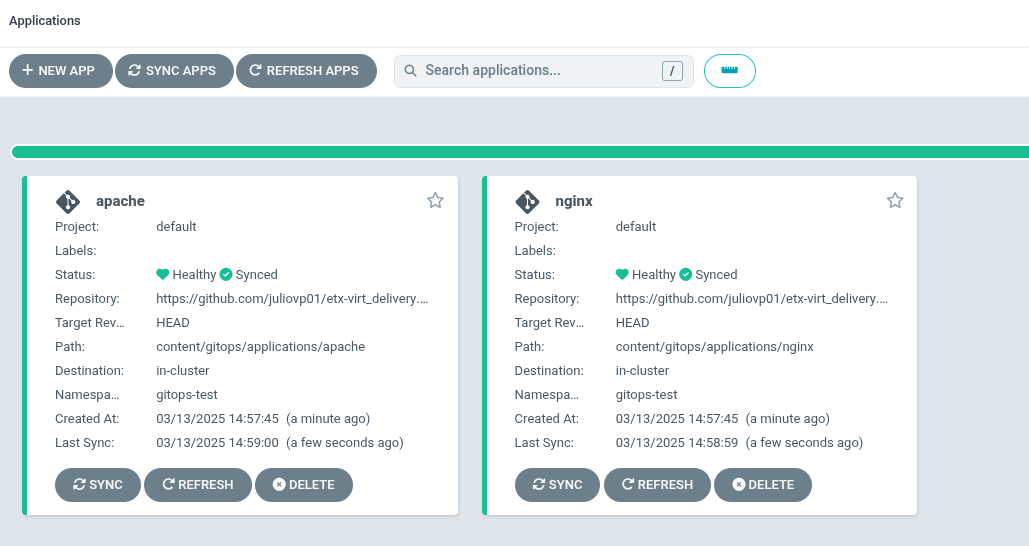

In the ArgoCD console, verify that the two child applications have been correctly deployed (It may take a minute or so for the status of both applications to become healthy and synced).

Verify the deployed applications and traffic policies

Examine the network policies that were created

$ oc get networkpolicy -n gitops-test

NAME POD-SELECTOR AGE

allow-from-ingress-to-nginx app=nginx 10m

apache-network-policy app=apache 10m-

The nginx pod only accepts traffic from the openshift-ingress namespace. This means that we can create a route for it.

-

The apache VM only accepts traffic from pods in the same namespace, including the nginx pod.

Within your OpenShift console, log in to the console of the fedora-apache VM in the gitops-test namespace, and run the following commands:

$ sudo -i # to become root

# dnf install -y httpd

# systemctl enable httpd --nowNow the VM is listening on port 80.

From your bastion host, run the following commands to get the service IPs:

$ export nginx_ip=$(oc get svc nginx -n gitops-test -o jsonpath='{.spec.clusterIP}')

$ export apache_ip=$(oc get svc apache -n gitops-test -o jsonpath='{.spec.clusterIP}')Verify that the nginx pod can talk to the apache VM.

$ export nginx_pod_name=$(oc get pod -n gitops-test -l app=nginx -o jsonpath='{.items[0].metadata.name}')

$ oc exec -n gitops-test $nginx_pod_name -- curl -s http://${apache_ip}:80To verify that the apache VM cannot talk to the nginx pod, you will need the IP address of the nginx service:

$ echo $nginx_ip

172.31.208.10|

The IP address of the nginx service will be different in your environment. Be sure to copy the IP address from the last command to use in the next step. |

Then go to OpenShift web interface, log in to the console of the fedora-apache VM in the gitops-test namespace, and run the following command:

$ curl -v http://{replace_with nginx_ip}:8080No output should be returned.

Using FQDNs of the Kubernetes services

In this example, one can also use the K8s services' fully qualified domain names instead of omitting name resolution by using explicitely the IP addresses.

nginx is a regular container workload, the VM workload itself is only in the pod network. Hence we can also make use of the service FQDNs.

The service FQDN follows the following naming pattern: svc-name.namespace.svc.cluster.local.

Typically the following search domains are included in a pod in the default pod network:

-

<namespace>.svc.cluster.local -

svc.cluster.local -

cluster.local

So you can e.g. reach the apache by calling the following endpoints from within the same namespace:

-

apache→ the resolver ends up using thegitops-test.svc.cluster.localsearch domain -

apache.gitops-test→ the resolver ends up using thesvc.cluster.localsearch domain

So, you can also run the following command, that only relies on names, not on actual IP addresses:

oc exec -n gitops-test $nginx_pod_name -- curl -s http://apache:80Earlier we’ve verified that the network policies are in place, so that nginx, can only be reached via route.

To create that route run the following command:

oc create route edge --service nginx --port 8080 nginx -n gitops-testTo retrieve the hostname run:

export nginx_route_host=`oc get route nginx -n gitops-test -ojsonpath='{.spec.host}'`To verify you can reach it via route, run:

curl -s https://${nginx_route_host}