Introduction and Overview of the Workshop

Infrastructure as a Service can be costly

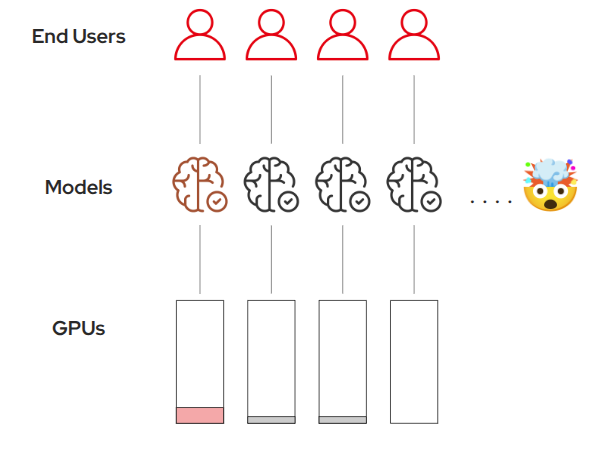

Self-Service is good for plentiful resources & small teams… But:

-

Throwing GPUs at the problem is risky

-

Few people know how to use them correctly

-

Leads to duplication and under-utilization

-

Leads to high costs

-

Most people want an LLM endpoint, not a GPU

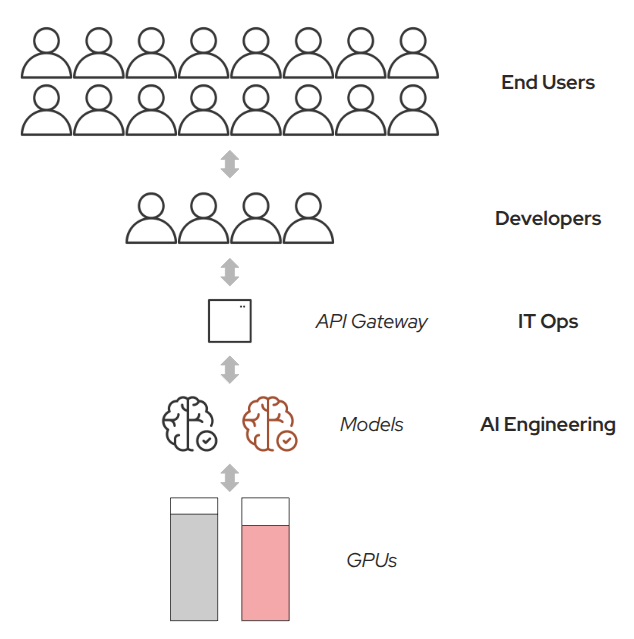

Models as a Service

Offering AI models as a service to a larger audience, especially Large Language Models (LLMs), solves many of these problems:

-

IT serves common models centrally

-

Generative AI focus, applicable to any model

-

Centralized pool of hardware

-

Platform Engineering for AI

-

AI management (versioning, regression testing, etc)

-

-

Models available through API Gateway

-

Developers consume models, build AI applications

-

For end users (private assistants, etc)

-

To improve products or services through AI

-

-

Shared Resources business model keeps costs down

Workshop Goals

In this workshop, we will:

-

Get an overview of the OpenShift AI platform

-

Deploy a Generative AI model on OpenShift AI

-

Configure 3Scale API Gateway to expose the model

-

Use the published model in a chat application

-

Get some usage statistics from 3Scale

At the end of the workshop, you will have learned how to deploy and manage models on OpenShift AI, especially LLMs, and make them available to a whole organization in a secure, private, and efficient way.