Connect App to LLM as a Service Model

In this module, we will learn how to connect an application to a large language model (LLM) as a service. We will explore the steps required to set up the connection and how to interact with the LLM. The application we will be using is AnythingLLM, which is a powerful tool for working with LLMs.

Creating a Connection

To ease the process of creating our application, we will use a custom connection type that has already been created in OpenShift AI.

This connection type is called "Anything LLM" and is specifically designed to work with the AnythingLLM application.

-

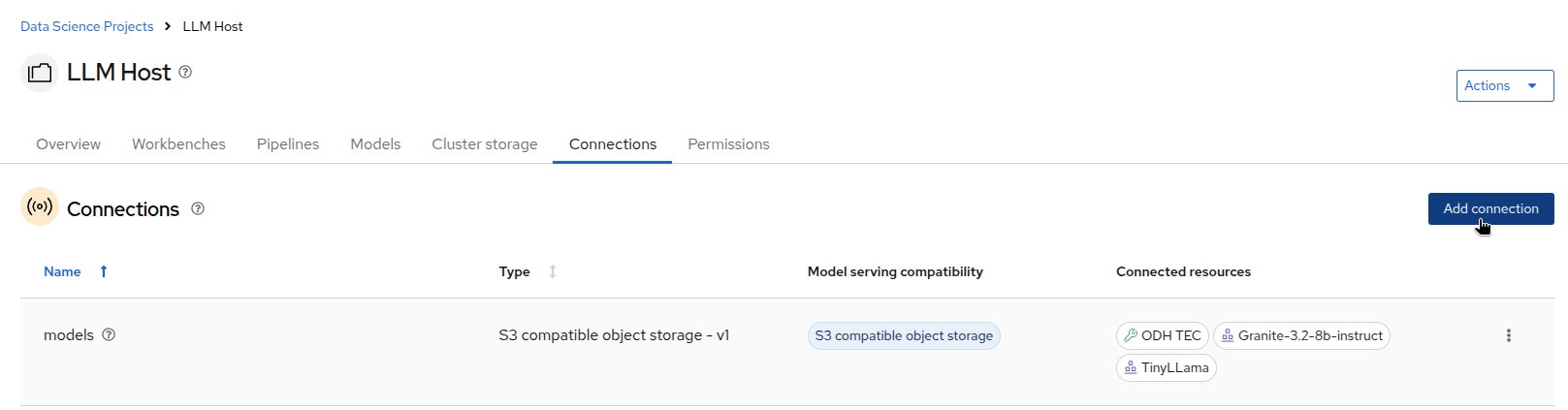

In the OpenShift AI dashboard, navigate to the

Connectionssection and click onAdd connection.

-

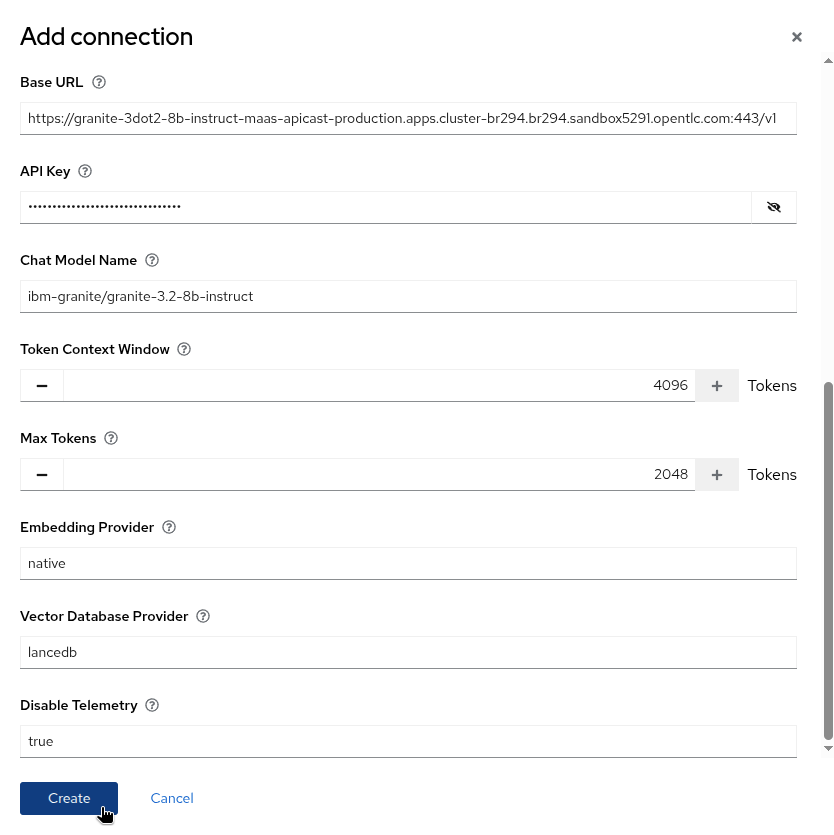

In the

Add connectiondialog, select theAnything LLMconnection type from the list of available connection types.

-

Before filling in the required fields, you’ll need to get some information from the developer portal.

-

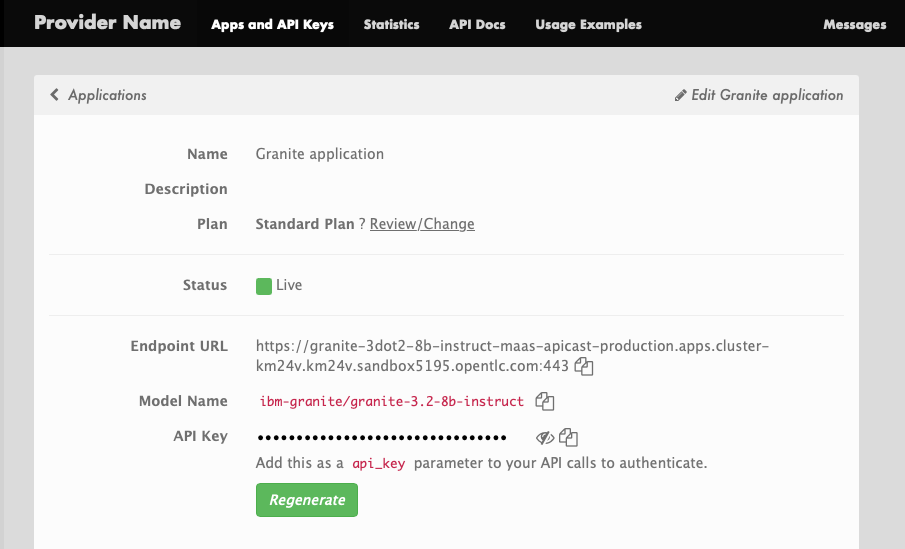

In the developer portal, open your Granite or Tinyllamma application, depening on which model you want to use.

-

Now back to add connection in OpenShift AI, fill in the required fields for the connection, including the connection name and any other necessary parameters. You can choose to connect to the Granite or the Tinyllama model. Use the information you got from the 3Scale developer portal to fill in the required fields.

-

Connection name:

GraniteorTinyllama. -

LLM Provider Type:

generic-openai(don’t change this default value). -

Base URL: enter the Endpoint URL from 3Scale (in the developer portal), adding

/v1at the end (OpenAI API standard). -

API Key: enter the API key from 3Scale (in the developer portal).

-

Chat Model Name: enter the model name from 3Scale, for example:

ibm-granite/granite-3.2-8b-instructortinyllama/tinyllama-1.1b-chat-v1.0. -

Leave all other fields as default.

-

Click

Createto create the connection.

-

This connection is now ready to be attached to a Workbench to inject those values as environment variables and configure AnythingLLM.

Connection with AnythingLLM

We will create a new Workbench based on a custom image and attach the connection we just created.

-

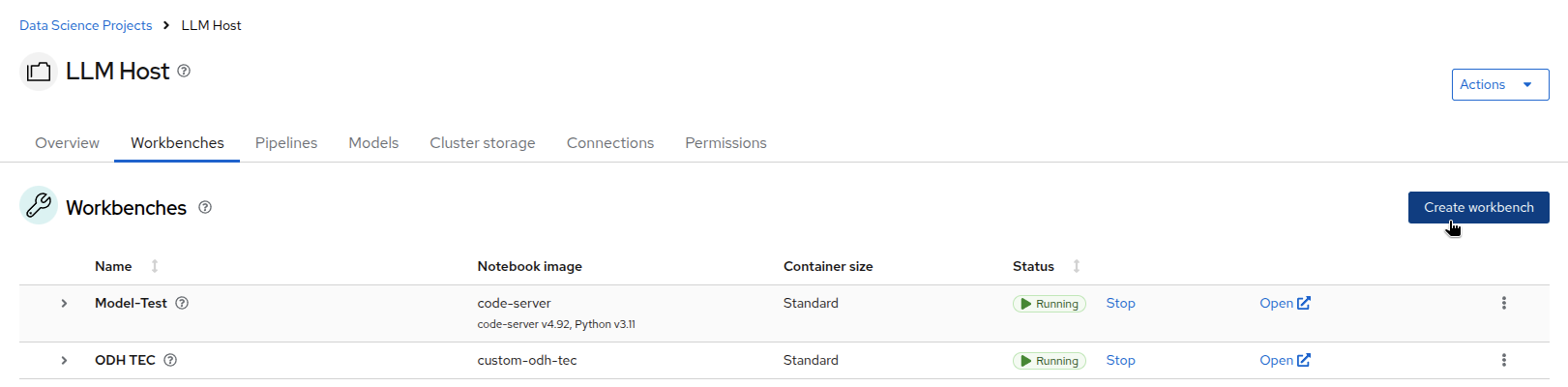

In the OpenShift AI dashboard, navigate to the

Workbenchessection and click onCreate Workbench.

-

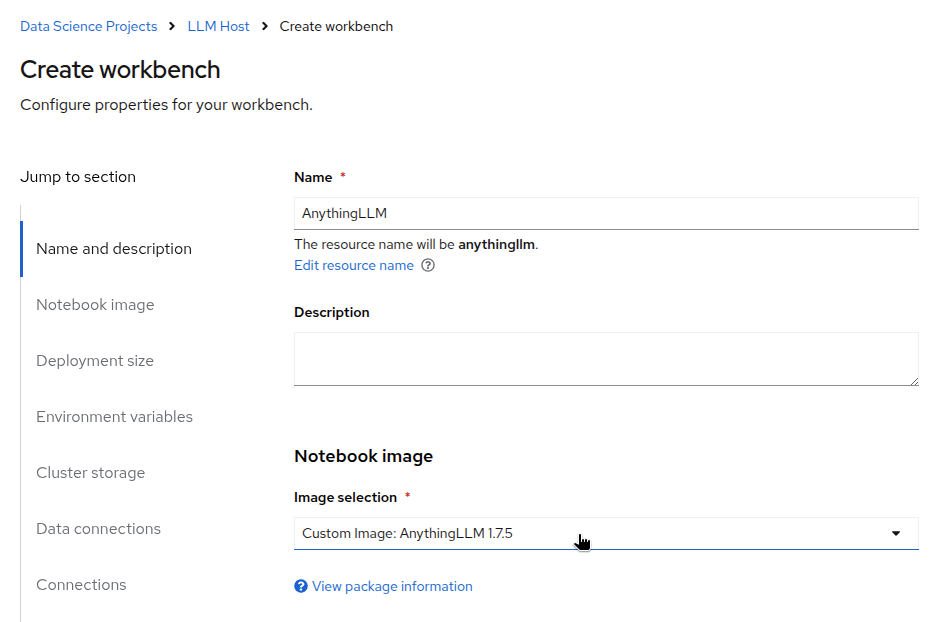

In the

Create Workbenchform, give a name your workbench and select theCustom Image: AnythingLLM 1.7.5image from the list of available images.

-

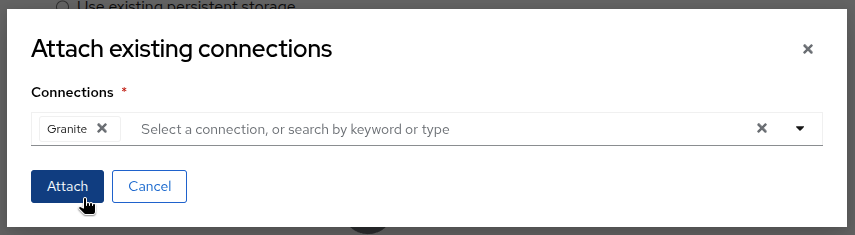

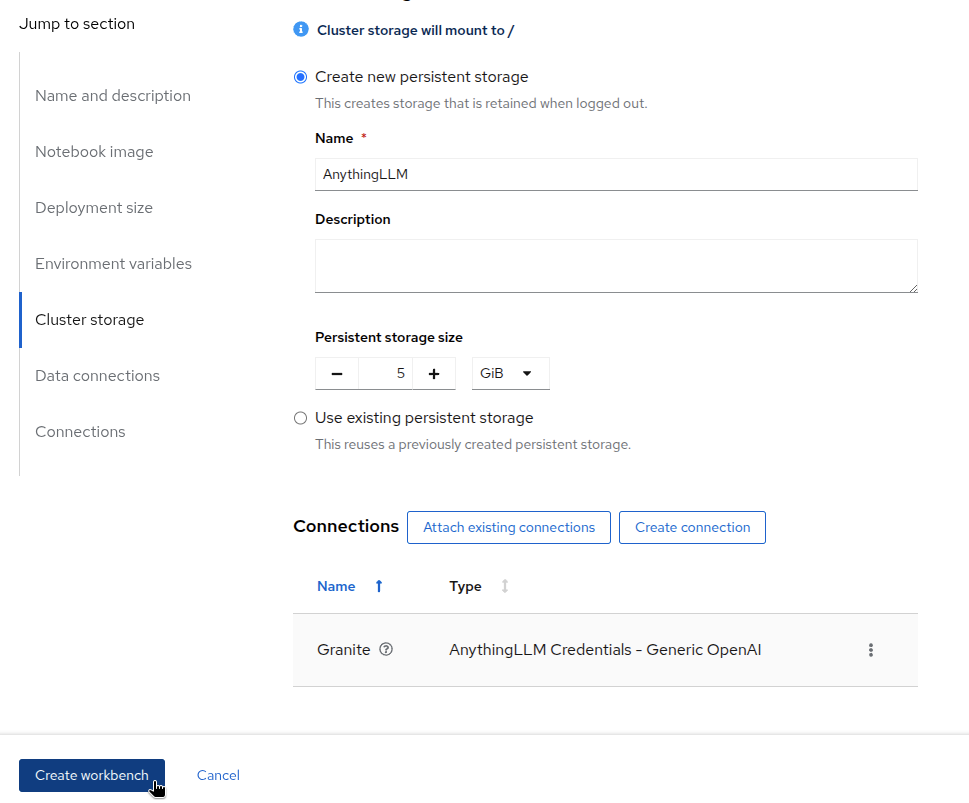

Leave the default values for the other fields up to the Connection section. Here click on

Attach existing connectionand select the connection you created in the previous step.

-

Click on

Create workbench.

-

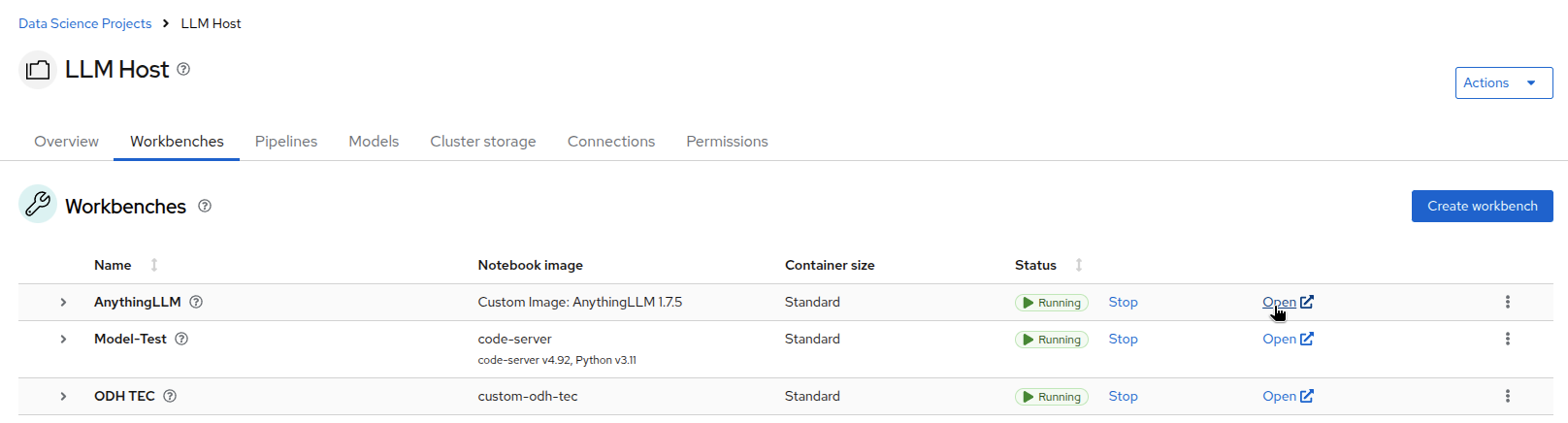

Once the workbench is started (this should take 20 seconds), click on

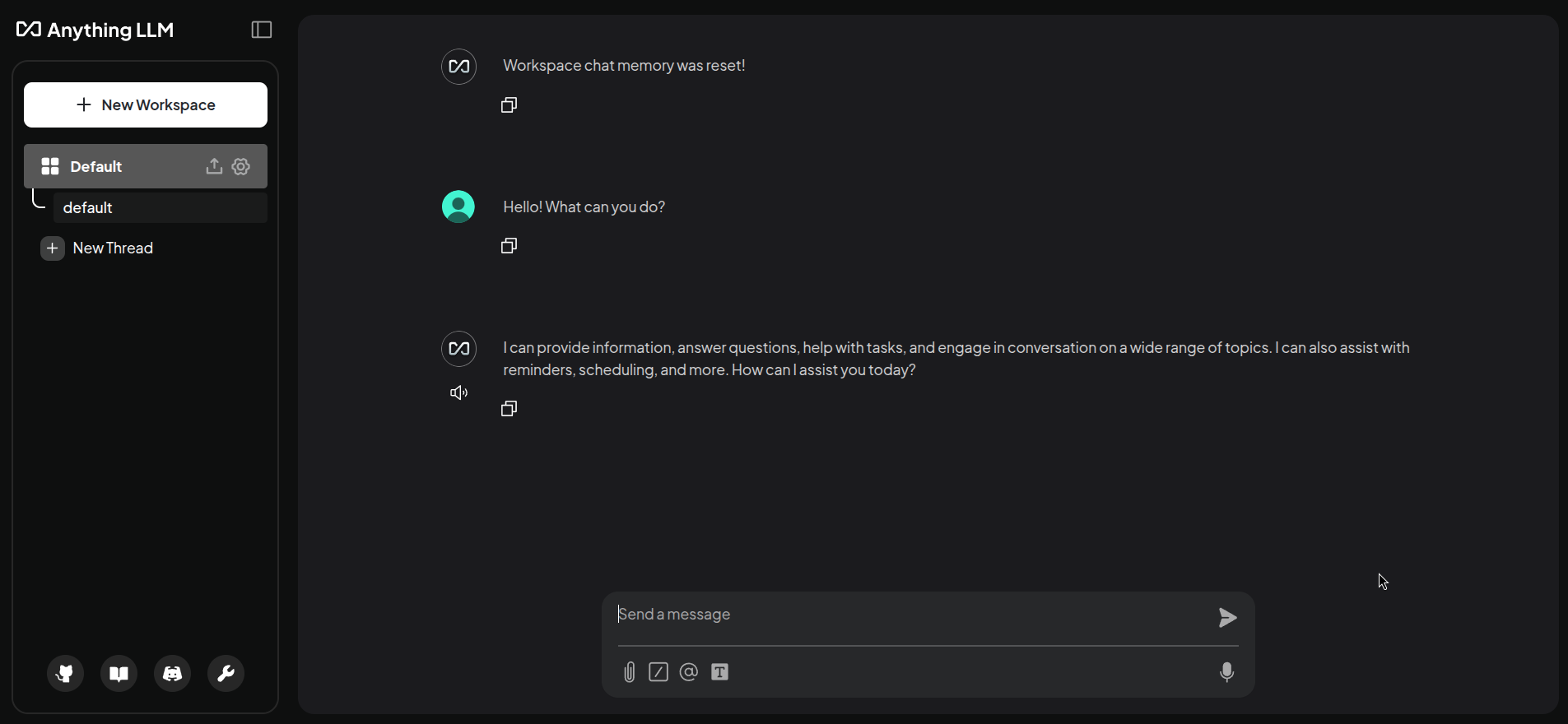

Opento access the AnythingLLM environment.

-

In the AnythingLLM, click on

New Workspaceto create a new workspace.

-

Give the workspace a name and click on

Save. You can now chat with your model!

Well done! You have successfully chatted with your model using the AnythingLLM application. You can now use this application to interact with your LLM and explore its capabilities.

Analytics

In this section, we will explore the analytics capabilities of the 3Scale API Gateway. We will learn how to monitor the usage of our models.

The Developer View

-

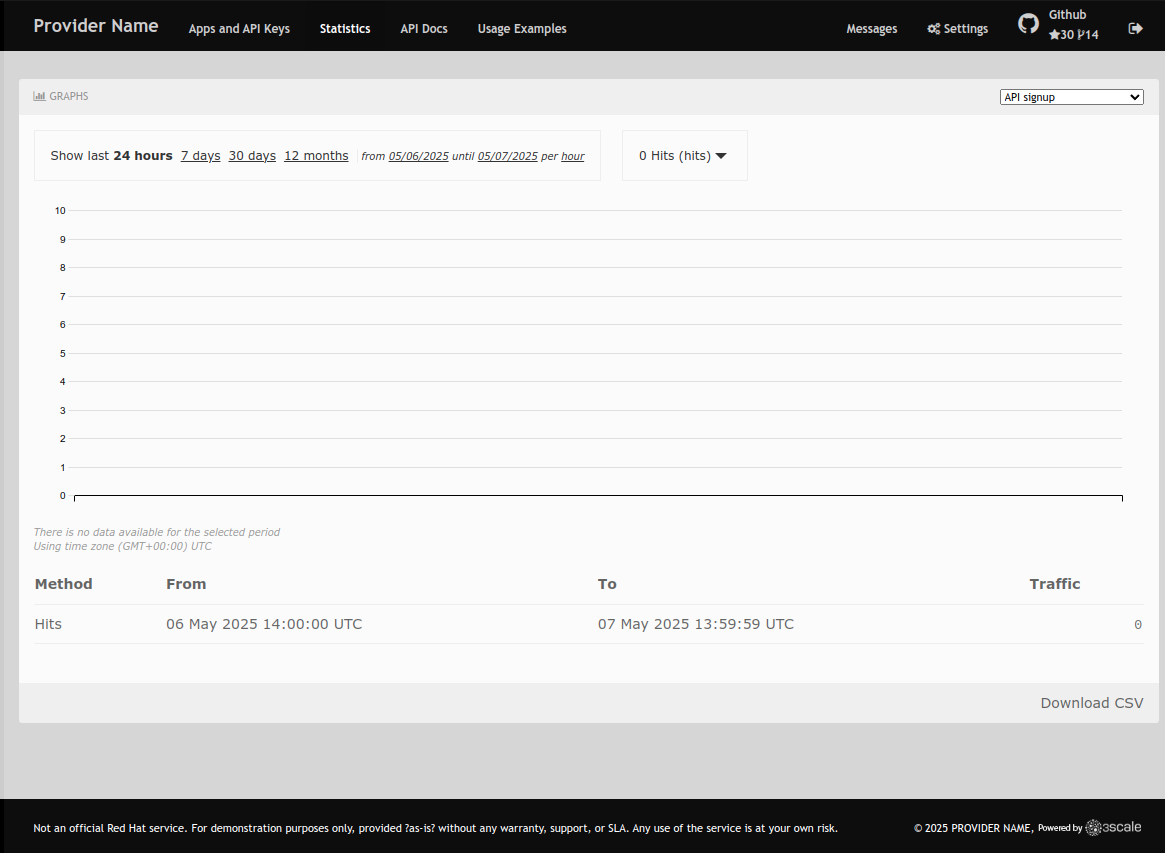

In the 3Scale developer portal, navigate to the

Statisticstab at the top.

-

You have a drop-down with all the applications you have created. Click on any to view its statistics.

As a developer, you can view the statistics of your applications and monitor their usage. You can also view the number of calls made to the API, and the different methods use.

The Admin View

-

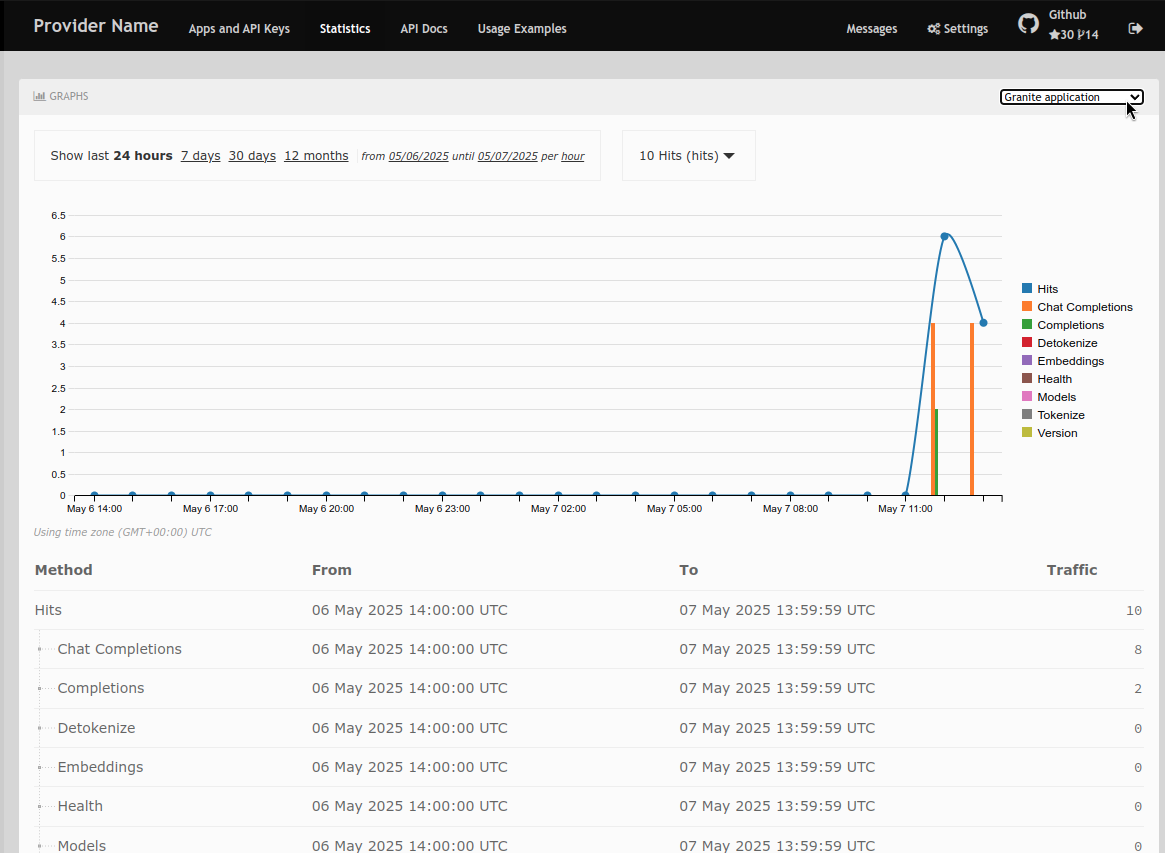

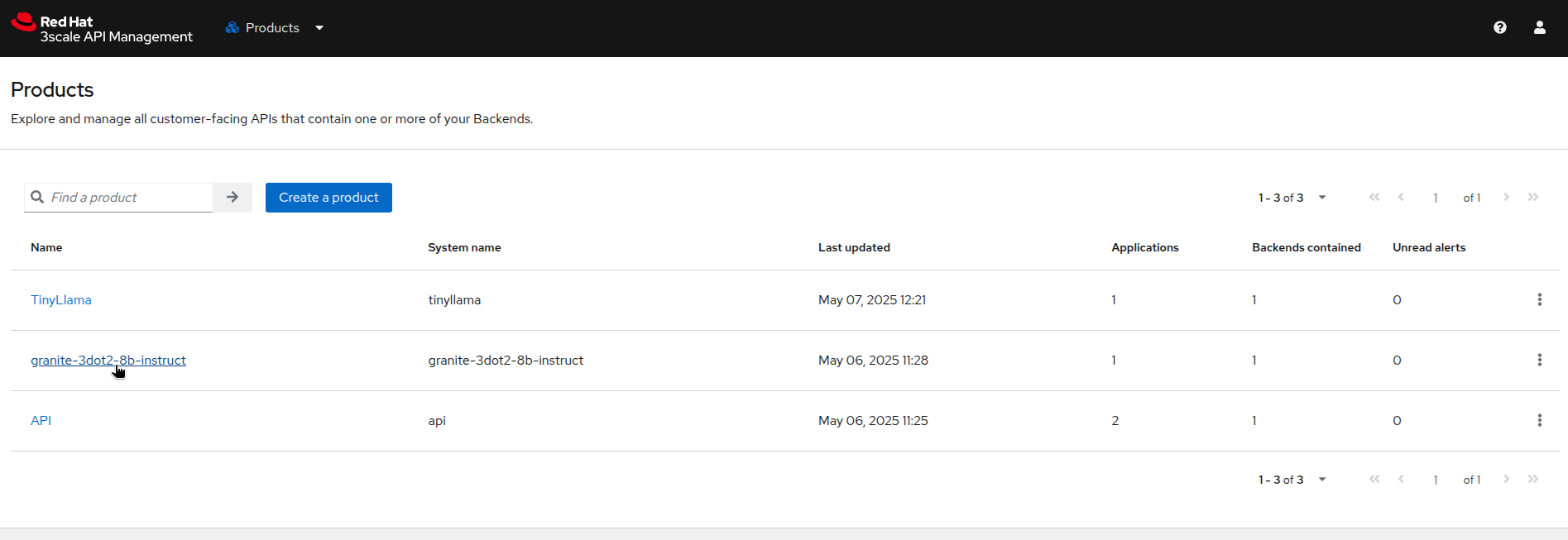

In the 3Scale admin portal, navigate to the

Productsand select one (Granite in this example).

-

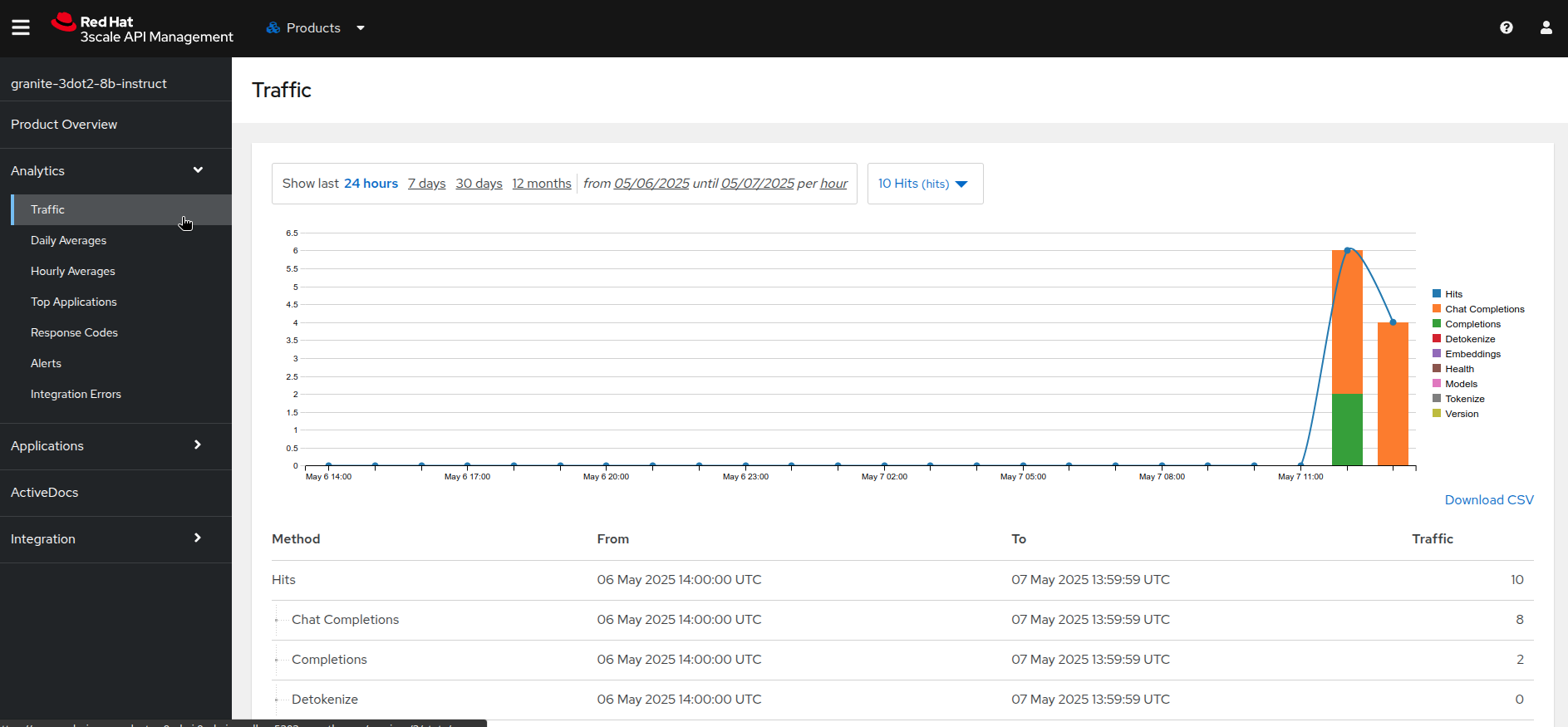

Click on the

Analytics→Trafficmenu to view the statistics for the selected product.

From this admin perspective, you can view the statistics of all the applications that are using the selected product. You can also view the number of calls made to the API, and the different methods used.

You can test the different views to see which are the top applications using the API, and which are the most used methods, when they are mostly used, etc.

Congratulations! You have successfully connected your application to a large language model (LLM) as a service and explored the analytics capabilities of the 3Scale API Gateway. You can now use this knowledge to build powerful applications that leverage the capabilities of LLMs.