Performance, Scalability, and Troubleshooting

Introduction

Our journey begins with a major challenge; the annual "Mega-Sale Monday" event. Our flagship e-commerce website, now partially migrated to VM-based services on OpenShift Virtualization, is anticipating an unprecedented surge in traffic. Last year, our website crashed due to unexpected traffic spikes, leading to significant lost sales and frustrated customers. This year, while we are determined to be prepared, we need to know how to recognize unexpected issues in our environment and how to address them to ensure that the scenario from last year does not repeat itself. In this module you will learn how to discover a high-load scenario on your cluster, how to mitigate and remediate the cluster by scaling resources both vertically and horizontally, and finally learn how to balance the resources on your cluster for future events.

Credentials for the Red Hat OpenShift Console

Your OpenShift cluster console is available here.

Your local admin login is available with the following credentials:

-

User:

username -

Password:

password

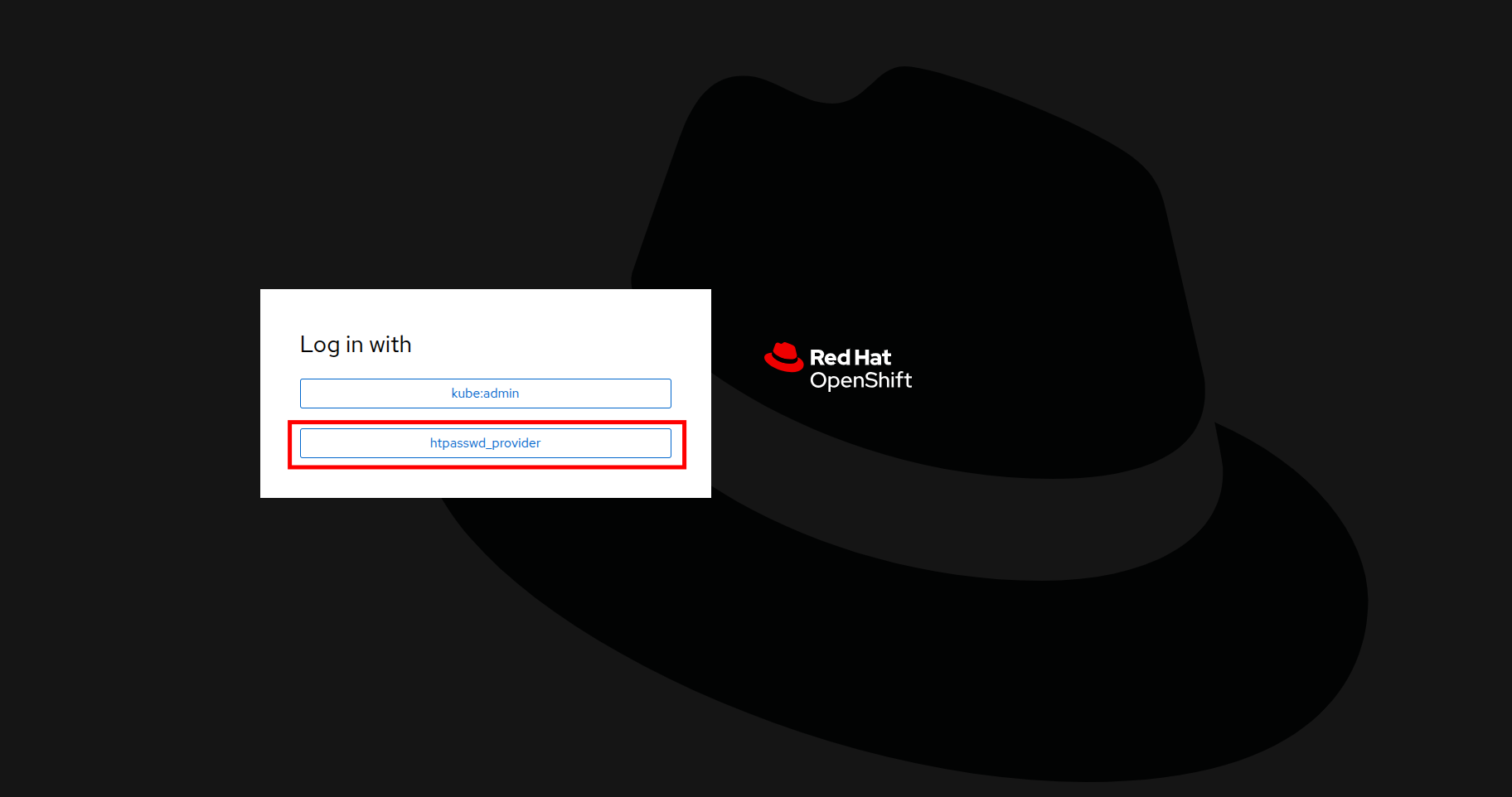

You will first see a page that asks you to choose an authenication provider, click on htpasswd_provider.

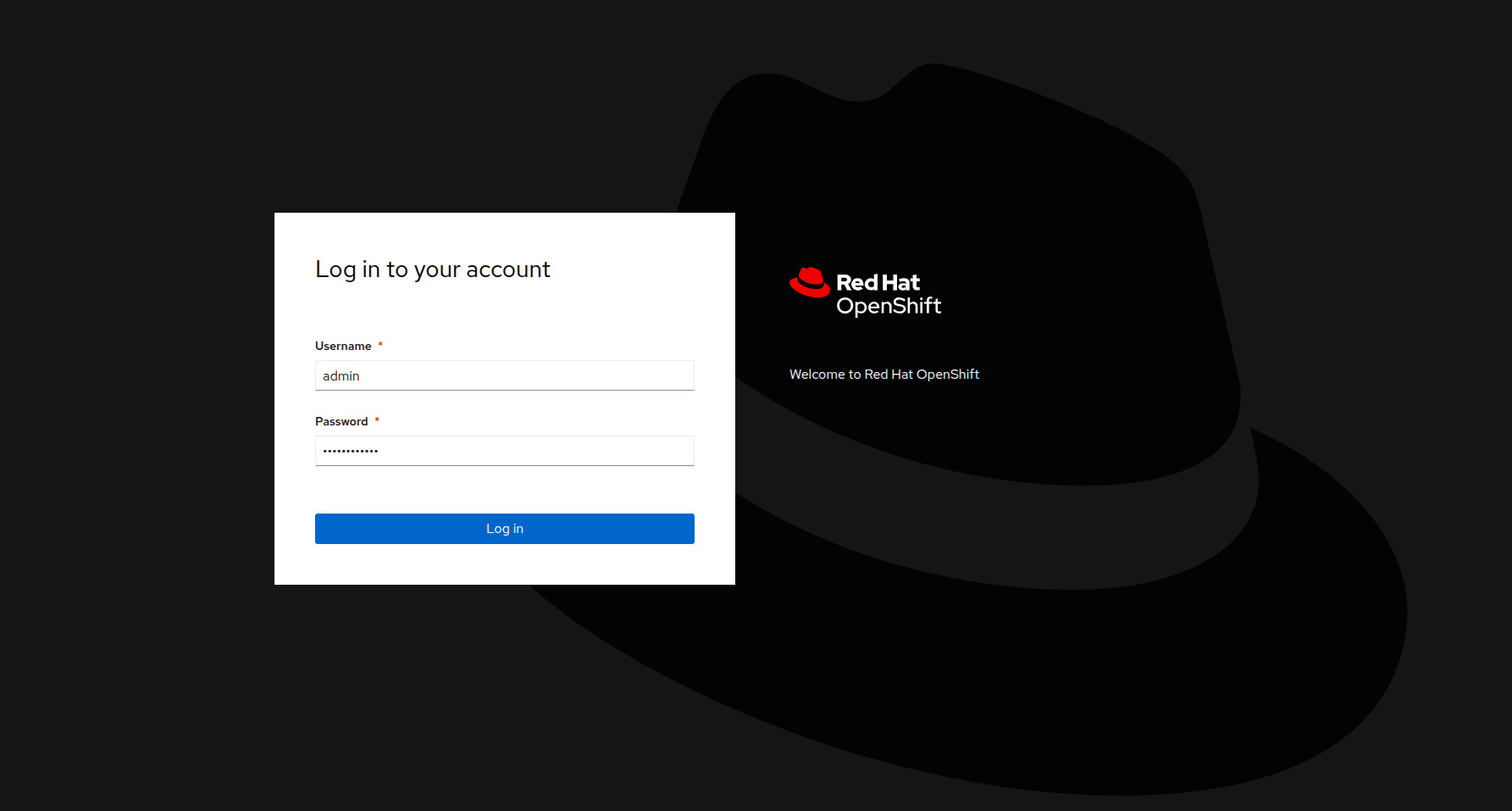

You will then be presented with a login screen where you can copy/paste your credentials.

Module Setup

-

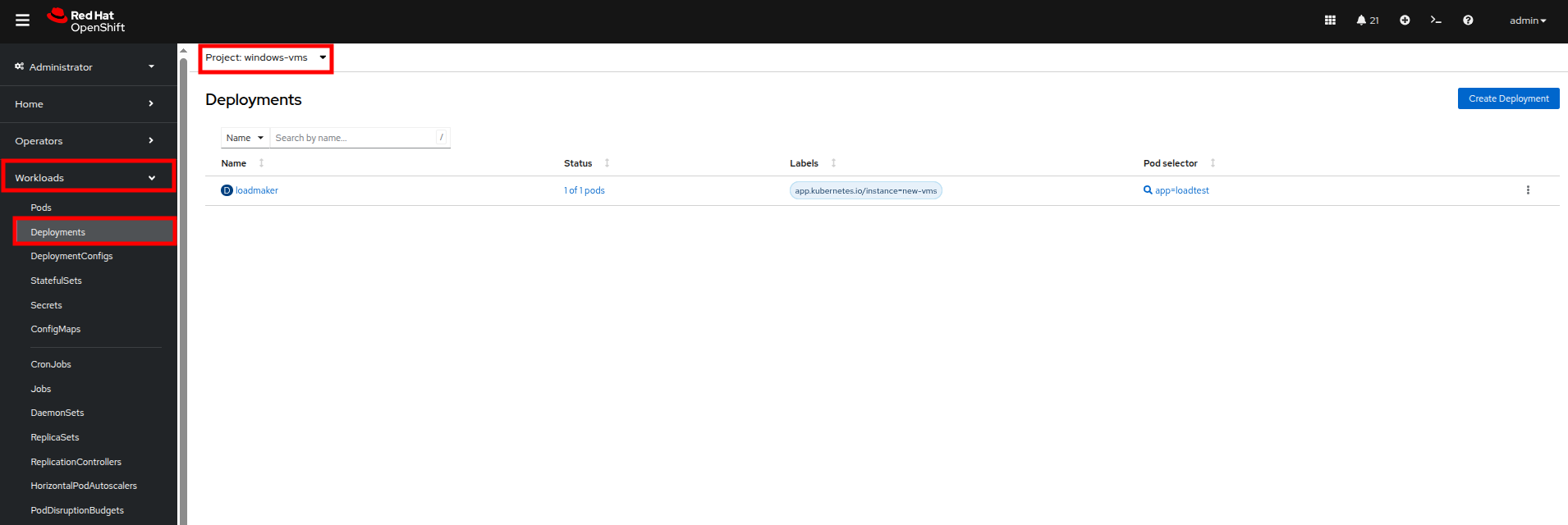

Using the left side navigation menu click on Workloads followed by Deployments.

-

Make sure to ensure you are in Project: webapp-vms.

-

You should see one pod deployed here called loadmaker.

-

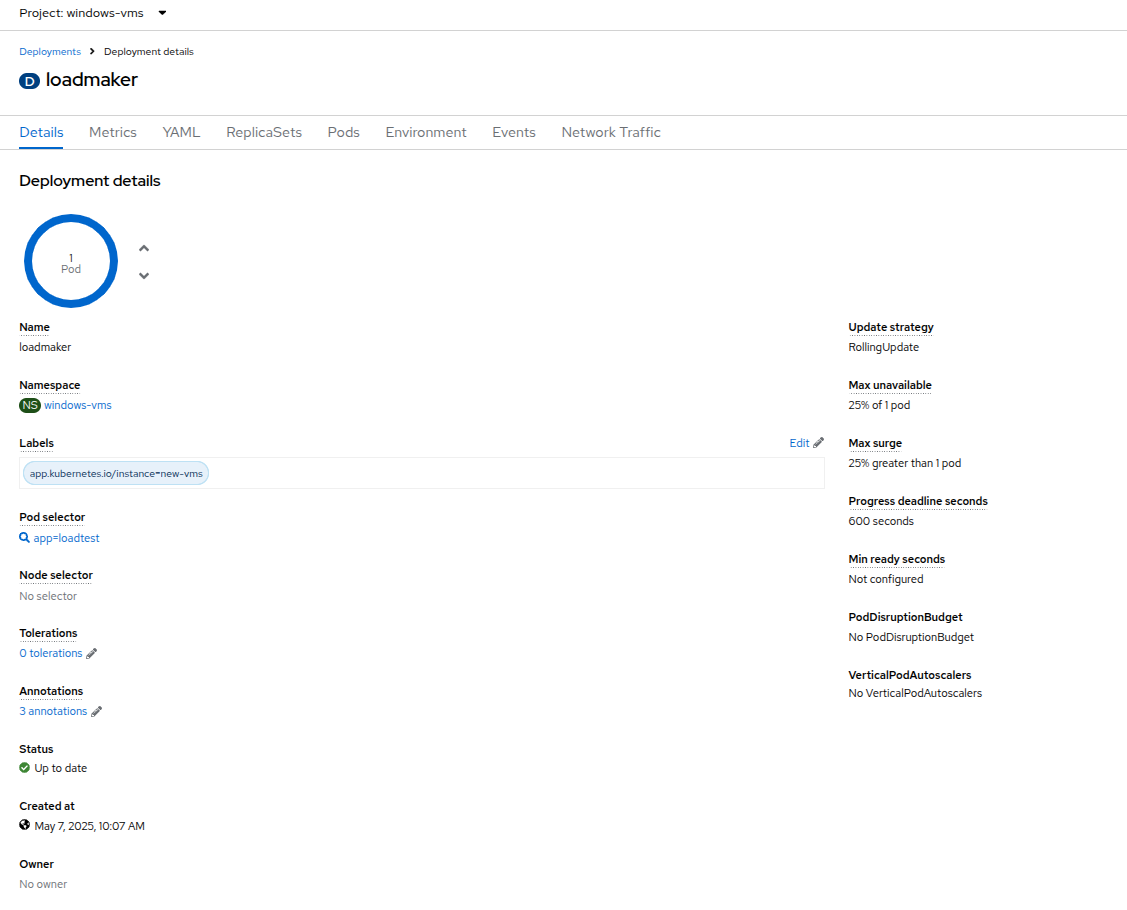

Click on loadmaker and it will bring up the Deployment details page.

-

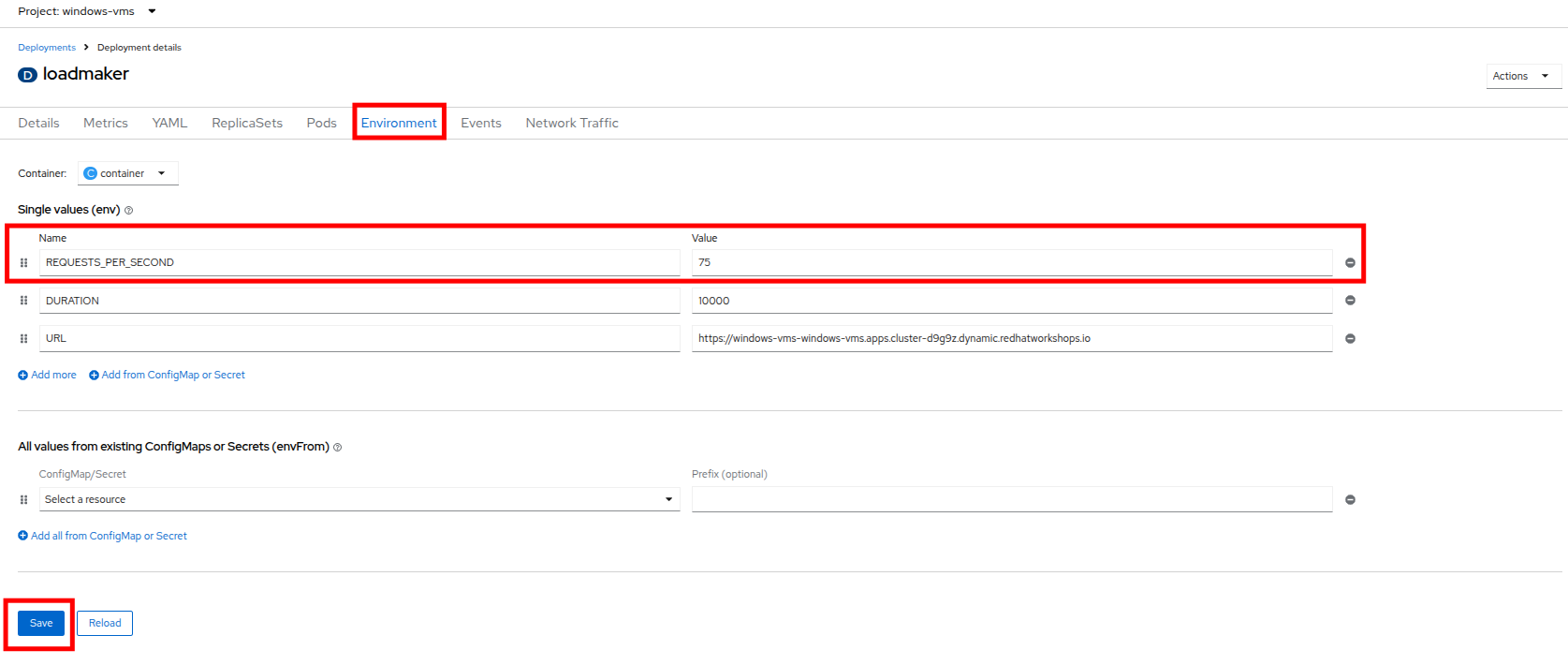

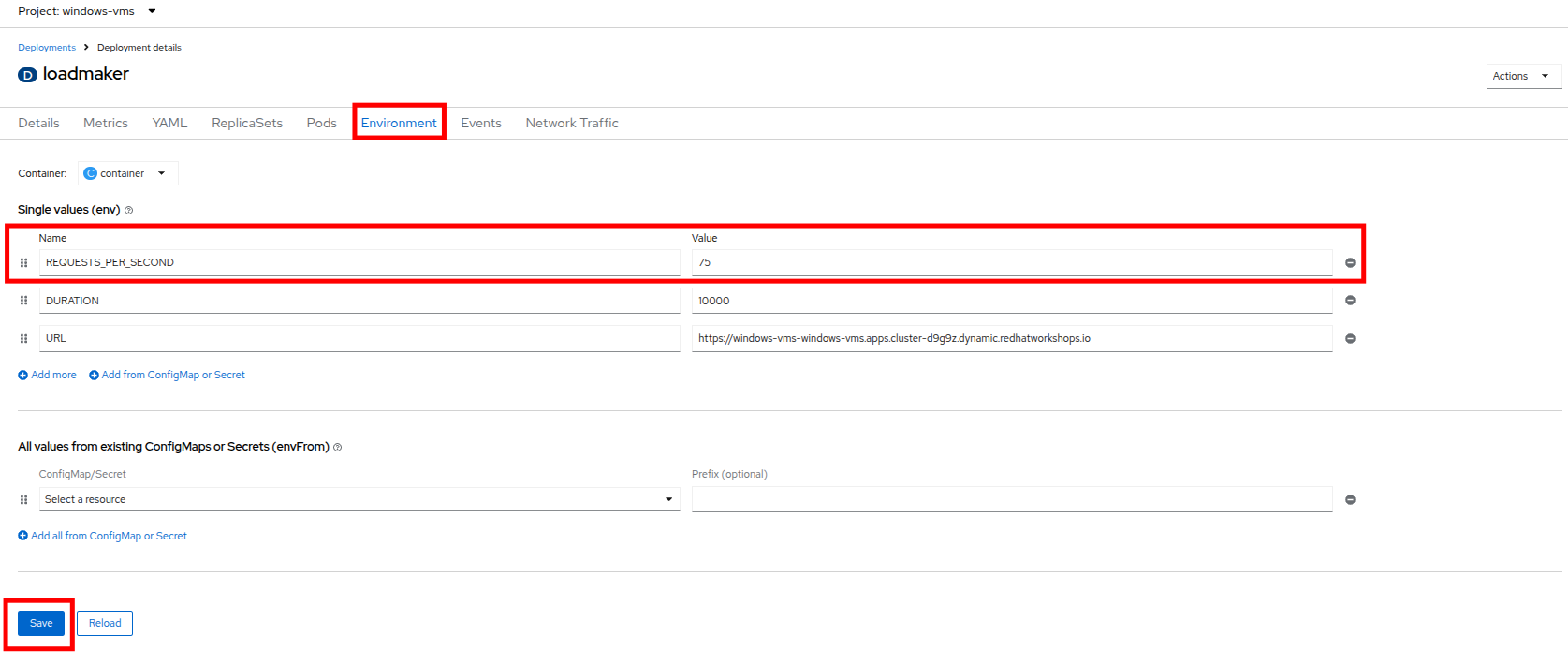

Click on Environment, you will see a field for REQUESTS_PER_SECOND, change the value in the field to

75if it is not already set, and click the Save button at the bottom. -

Now lets go check on the VMs that we are generating load against.

-

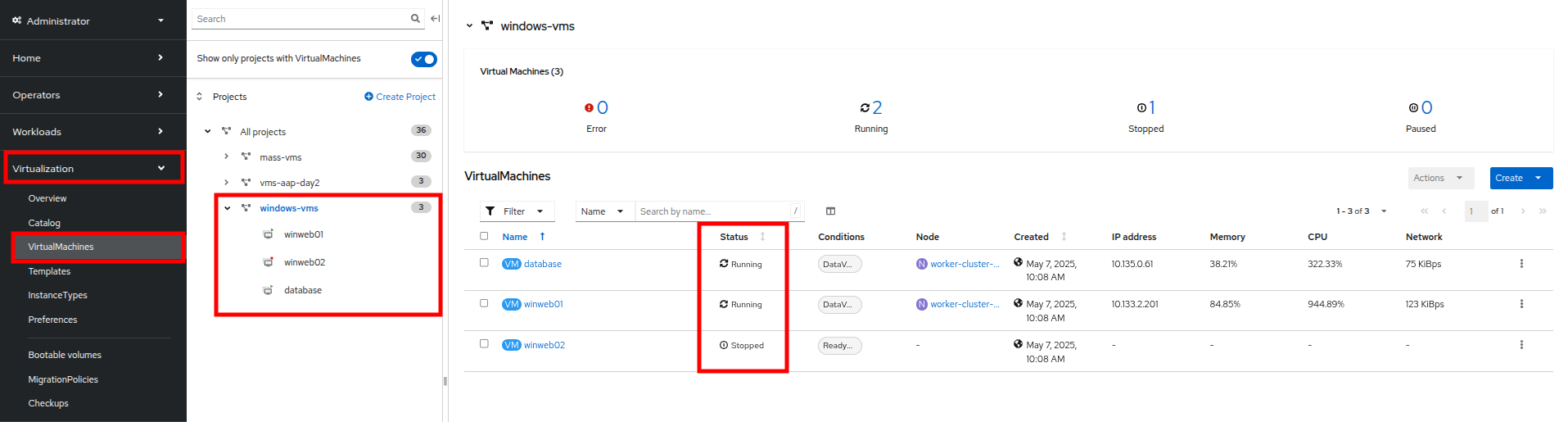

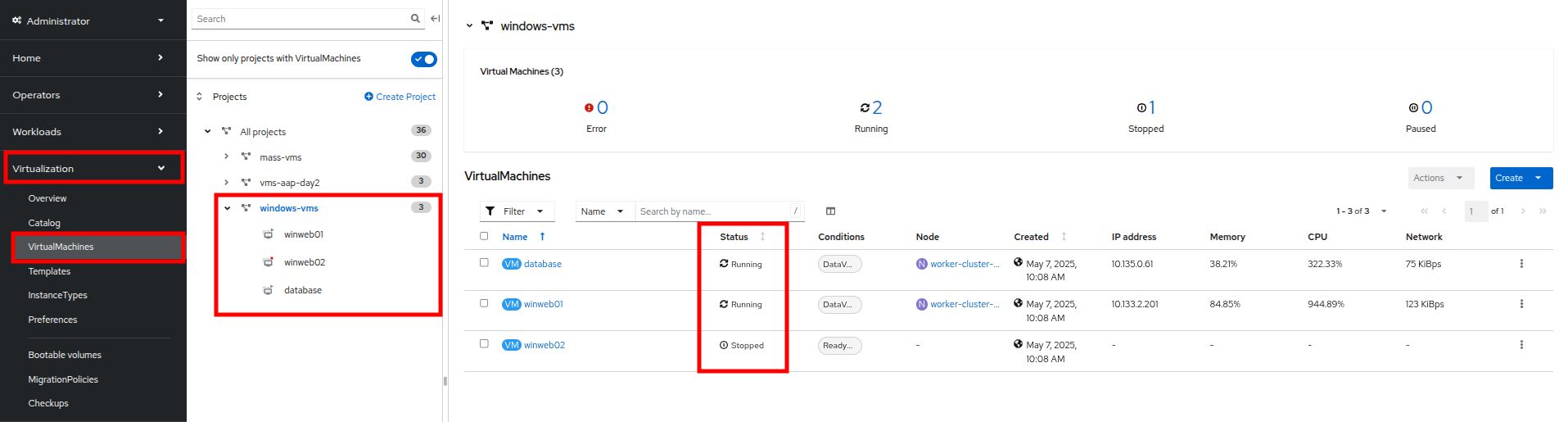

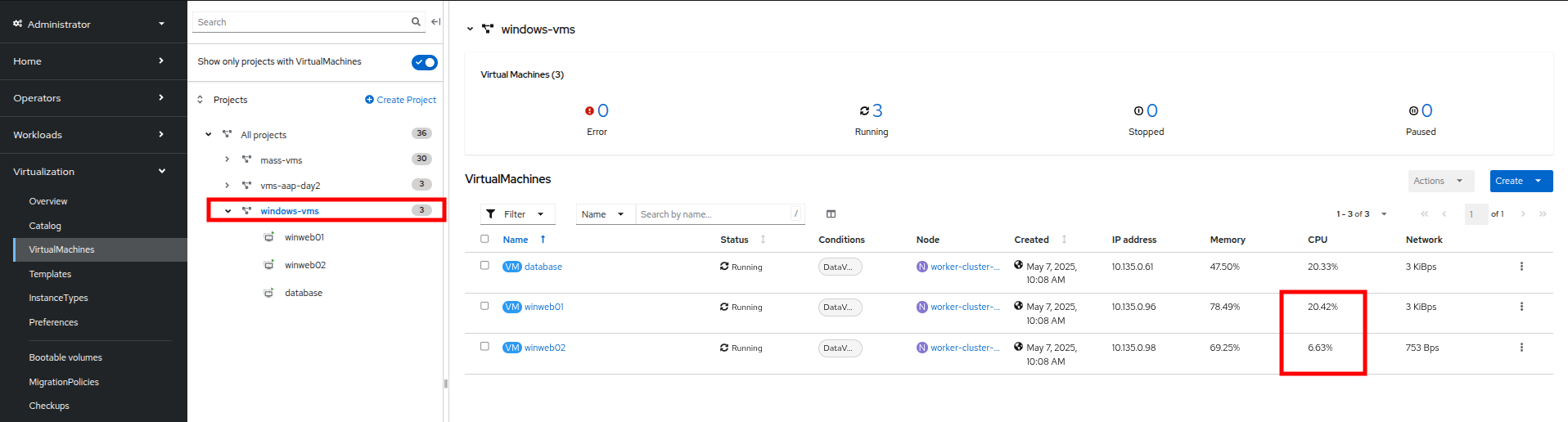

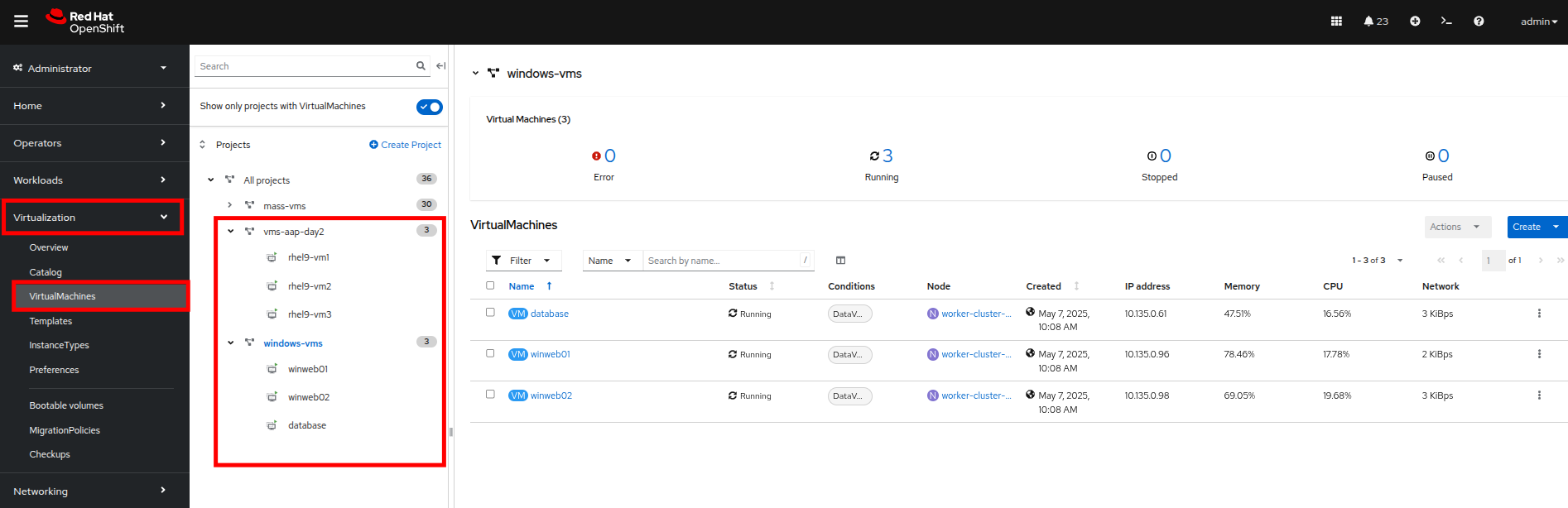

On the left side navigation menu click on Virtualization and then VirtualMachines. Select the windows-vms project in the center column. You should see three virtual machines: winweb01, winweb02, and database.

At this point in the lab only database and winweb01 should be powered on. If they are off, please power them on now. Do not power on winweb02 for the time being.

Enable and Explore Alerts, Graphs, and Logs

Another important task for administrators is often to be able to assess cluster performance. These performance metrics can be gathered from the nodes themselves, or the workloads that are running within the cluster. OpenShift has a number of built-in tools that assist with generating alerts, aggregating logs, and producing graphs that can help an administrator visualize the performance of their cluster.

Node Alerts and Graphs

To begin, lets look at the metrics for the nodes that make up our cluster.

-

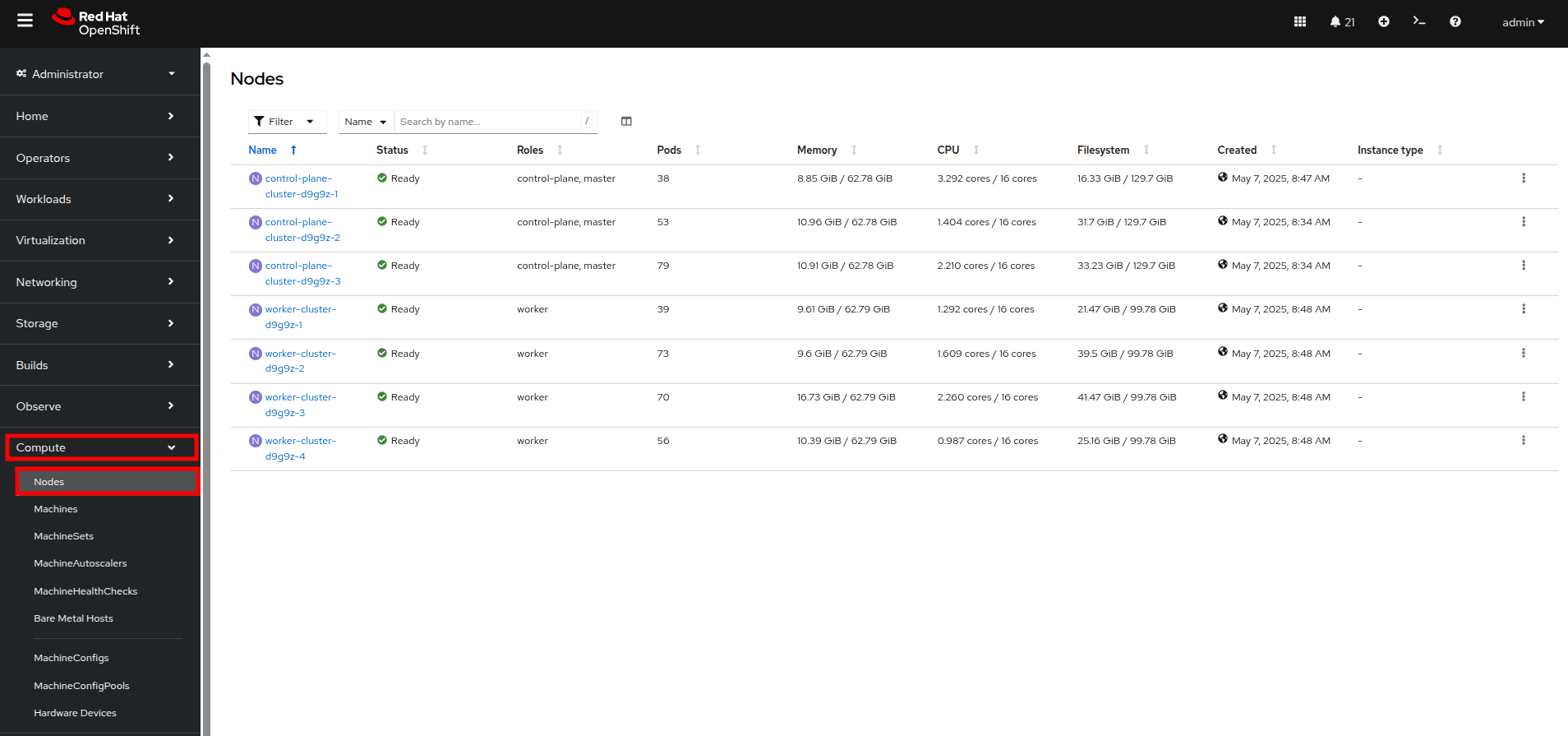

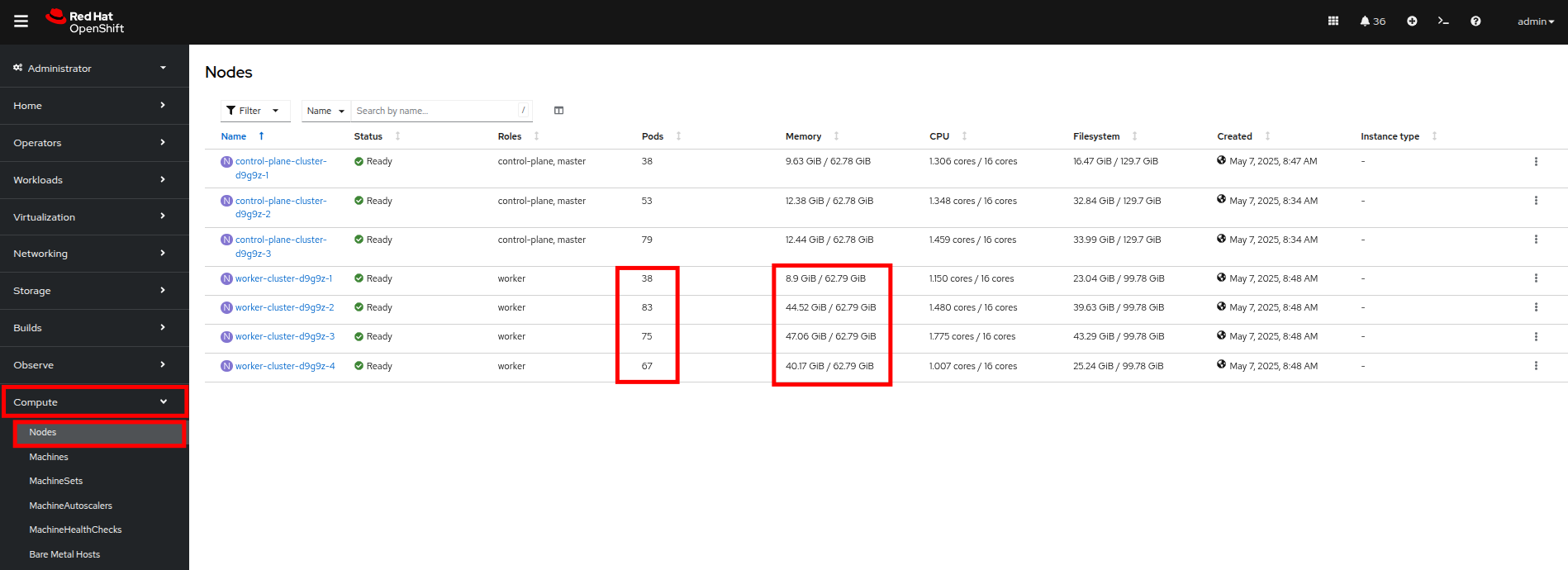

On the left side navigation menut click on Compute, and then click on Nodes.

-

From the Nodes page, you can se each node in your cluster, their status, role, the number of pods they are currently hosting, and physical attributes like memory and cpu utilization.

-

Click on your worker node 4 in your cluster. The Node details page comes up where you can see more detailed information about the node.

-

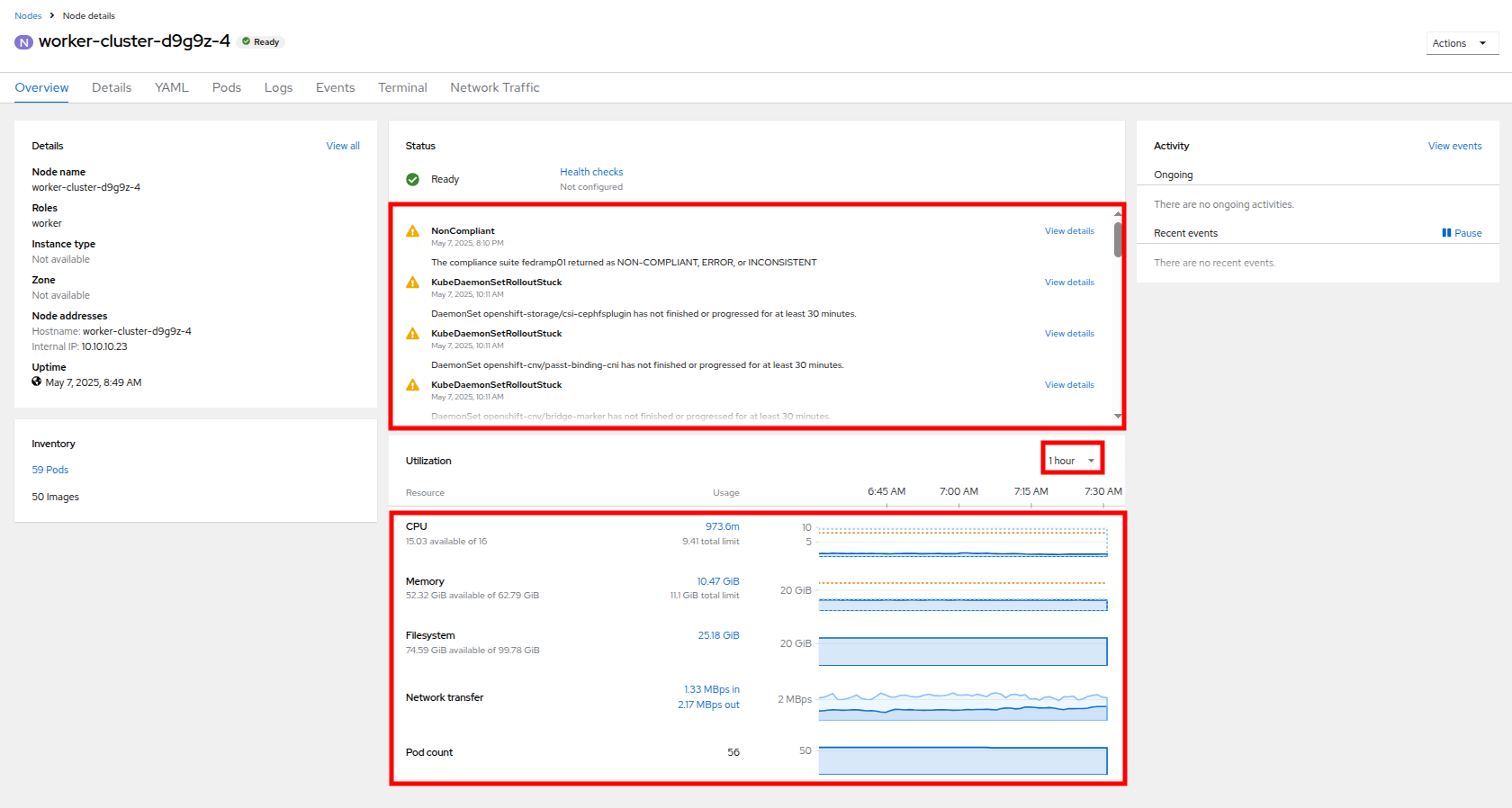

The page shows alerts that are being generated by the node at the top-center of the screen, and provides graphs to help visualize the utilization of the node by displaying CPU, Memory, Storage, and Network Throughput graphs at the bottom-center of the screen.

-

You can change the review period for these graphs to periods of 1, 6, or 24 hours by clicking on the dropdown at the top-right of the utilization panel.

Virtual Machine Graphs

Outside of the physical cluster resources, it’s also very important to be able to visualize what’s going on with our applications and workloads like virtual machines. Lets examine the information we can find out about these.

| For this part of the lab, we are going to use an application to generate additional load on some of our virtual machines so that we can see how graphs are generated. |

-

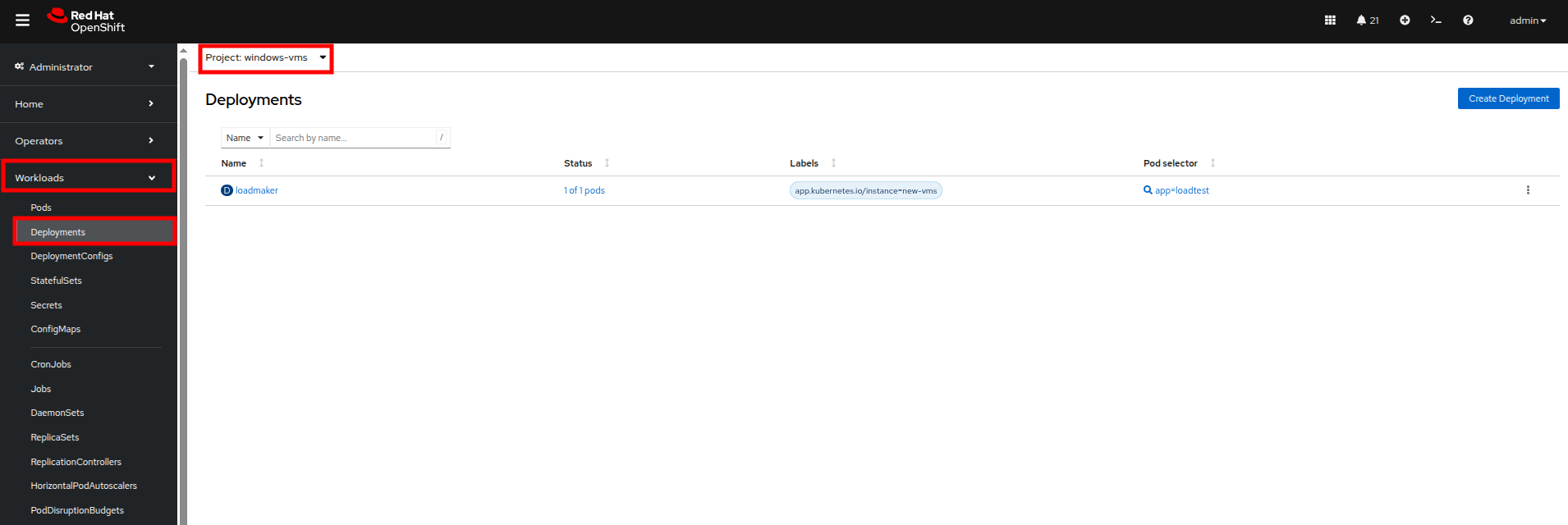

Using the left side navigation menu click on Workloads followed by Deployments.

-

Make sure to ensure you are in Project: windows-vms.

-

You should see one pod deployed here called loadmaker.

-

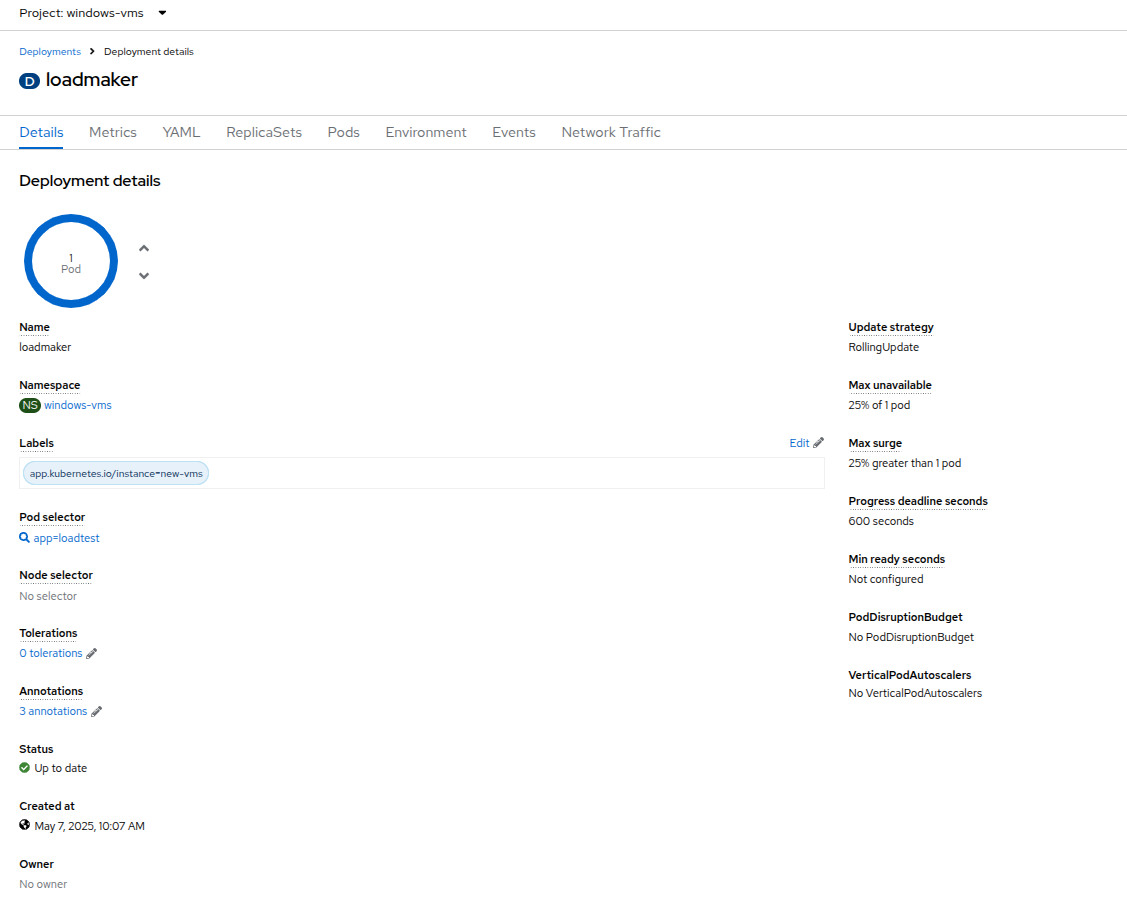

Click on loadmaker and it will bring up the Deployment details page.

-

Click on Environment, you will see a field for REQUESTS_PER_SECOND, change the value in the field to

75and click the Save button at the bottom. -

Now lets go check on the VM’s that we are generating load against.

-

On the left side navigation menu click on Virtualization and then VirtualMachines. Select the windows-vms project in the center column. You should see three virtual machines: winweb01, winweb02, and database.

At this point in the lab only database and winweb01 should be powered on. If they are off, please power them on now. Do not power on winweb02 for the time being. -

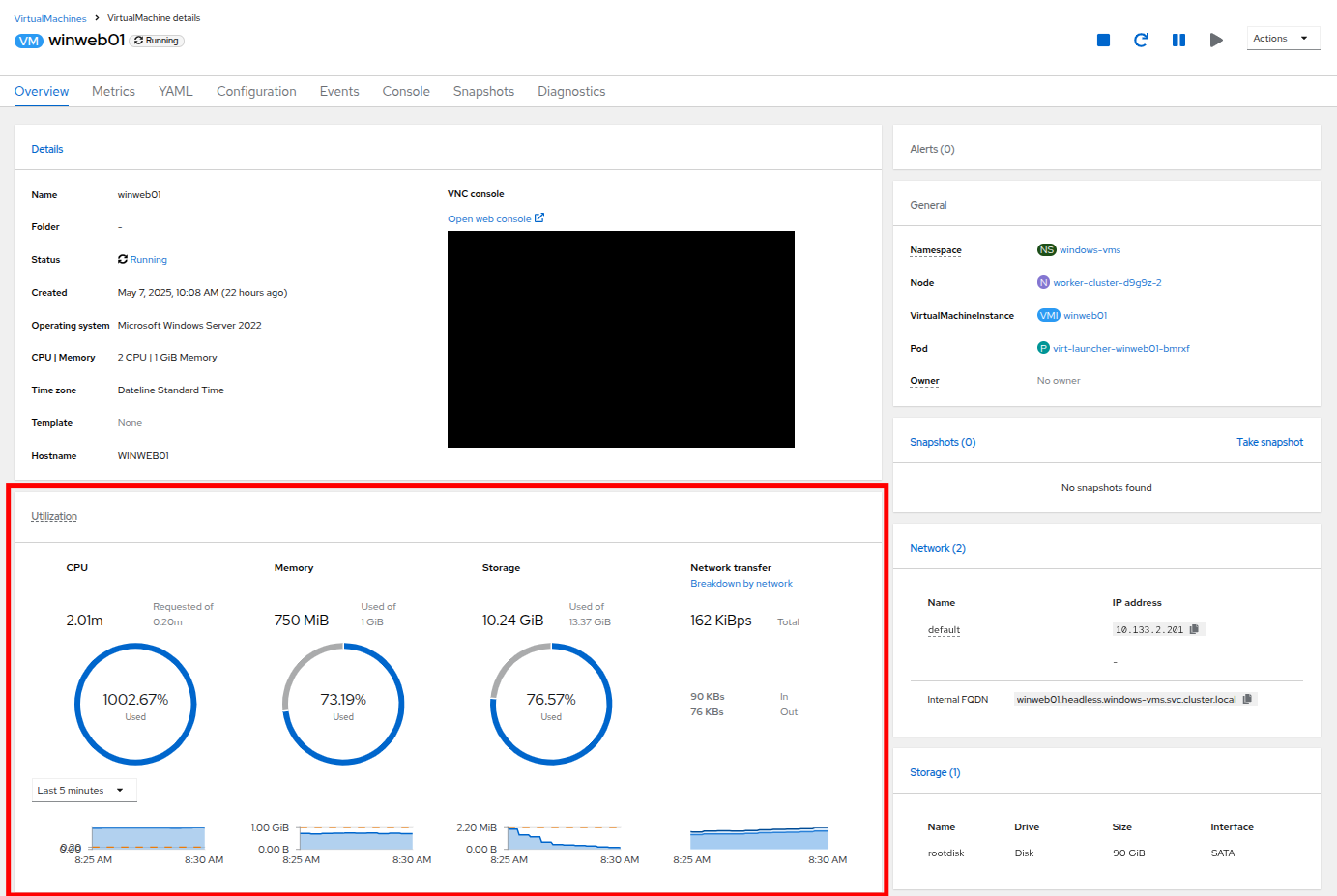

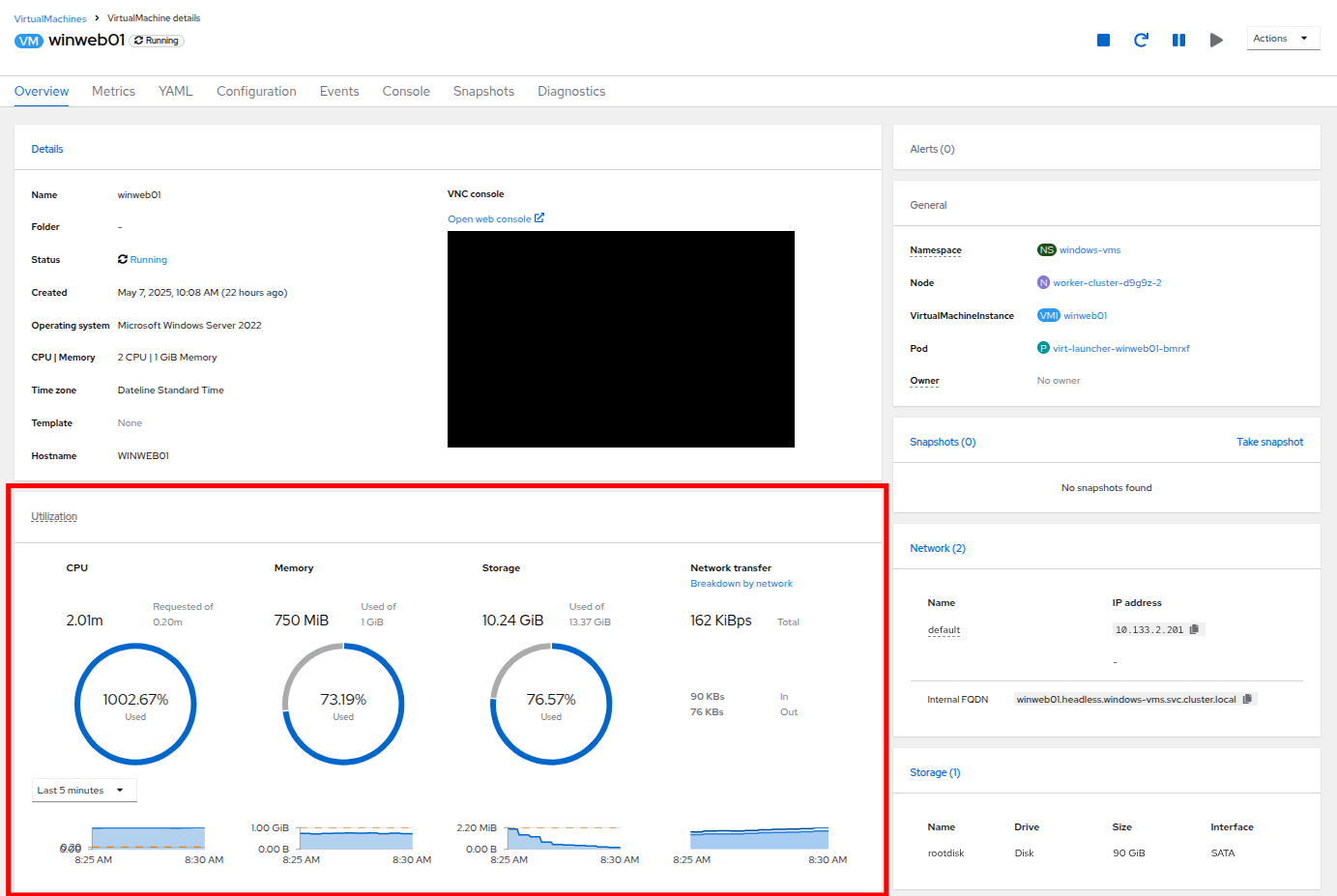

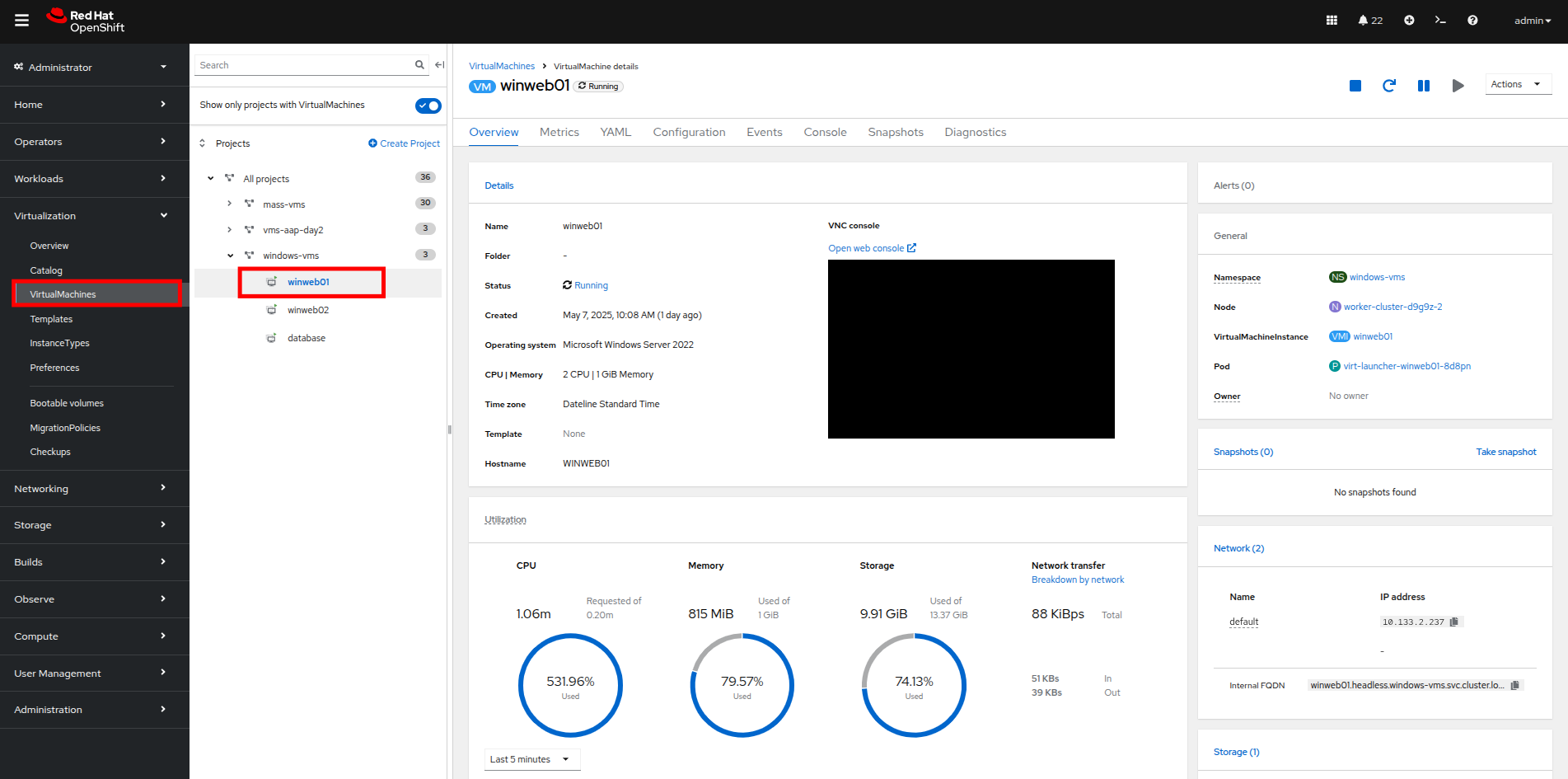

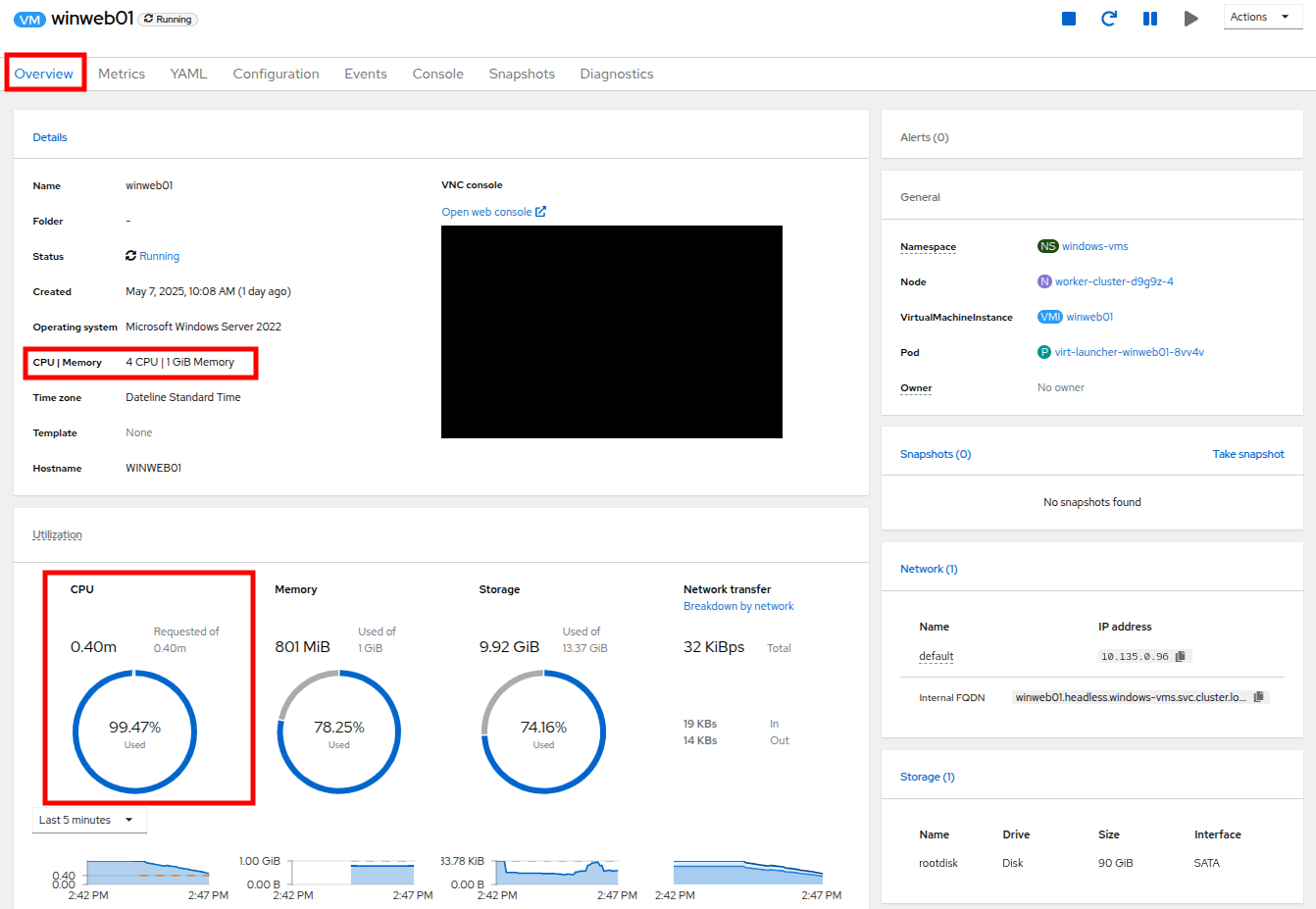

Once the virtual machines are running, click on winweb01. This will bring you to the VirtualMachine details page.

-

On this page there is a a Utilization section that shows the following information:

-

The basic status of the VM resources (cpu, memory, storage, and network transfer) which are updated every 15 seconds.

-

A number of small graphs which detail the VM performance over a recent time period, by default this is the last 5 minutes, but we can select a value up to 1 week from the drop down menu.

-

-

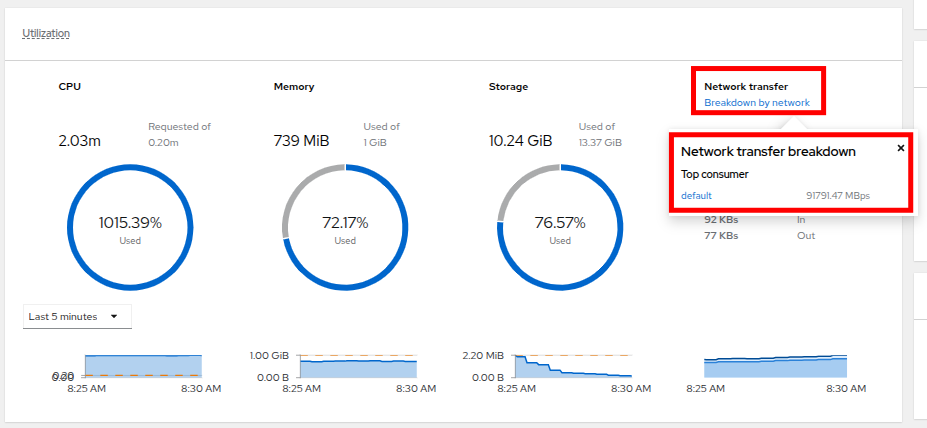

Taking a closer look at Network Transfer by clicking on Breakdown by network you can see how much network traffic is passing through each network adapter assigned to the virtual machine. In this case, the one default network adapter.

-

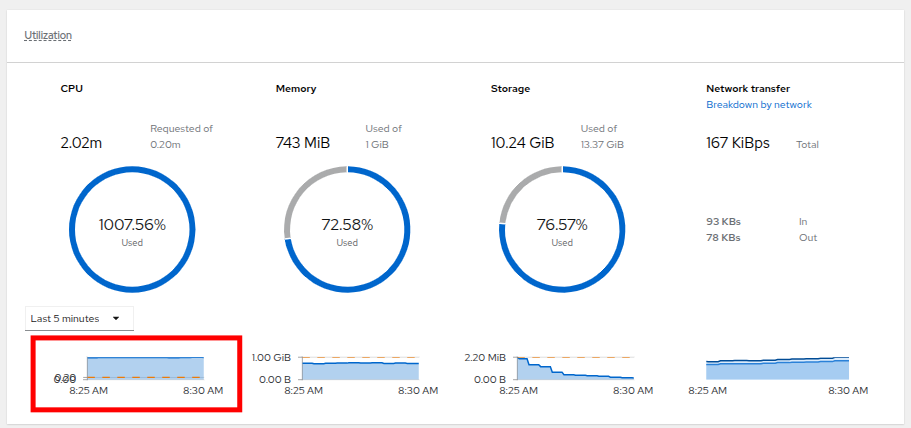

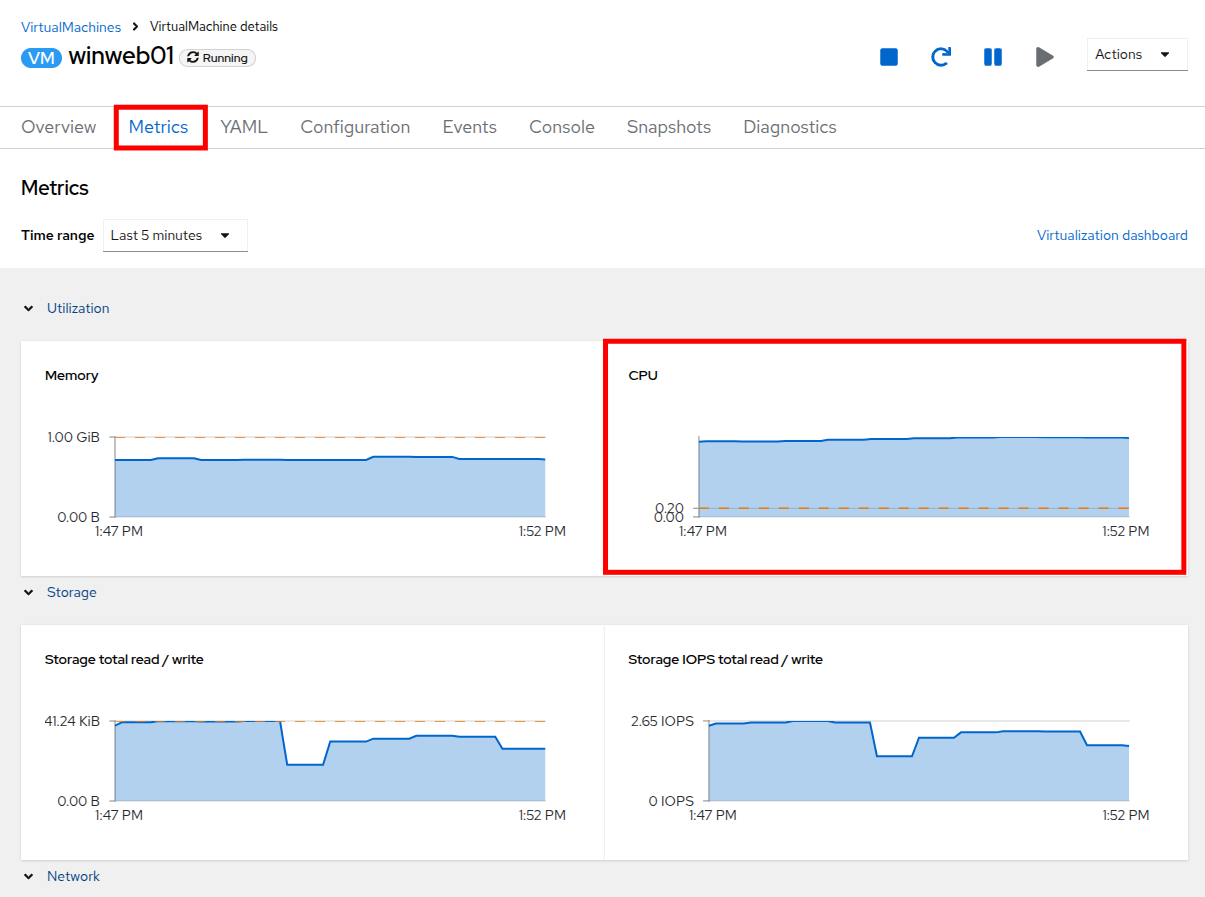

When you are done looking at the network adapter, click on the graph showing CPU utilization.

-

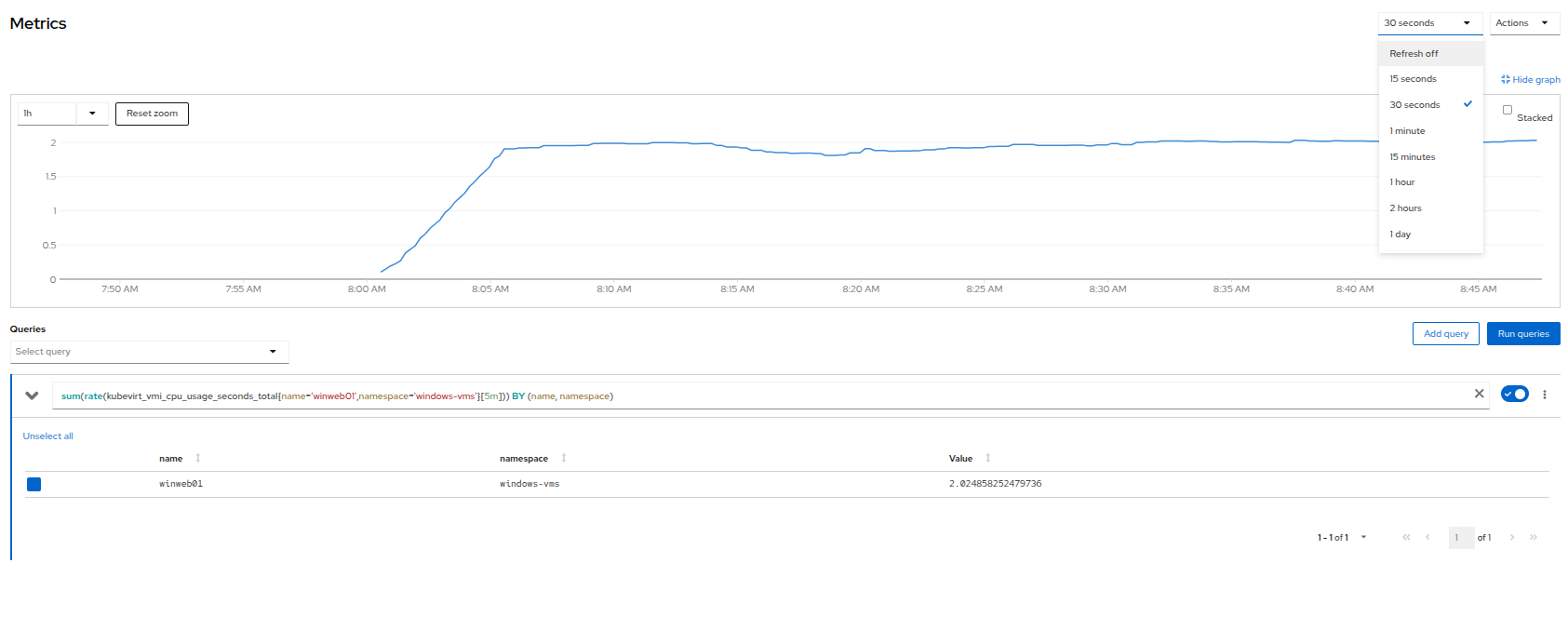

This will launch the Metrics window which will allow you to see more details about the CPU utilization. By default this is set to 30 minutes, but you can click on the drop down and change that to 1 hour to see the spike in the graph once we turned on the load generator.

-

You can also modify the refresh timing in the upper right corner.

-

You can also see the query that is being run against the VM in order to generate this graph, and create your own using the Add Query button.

-

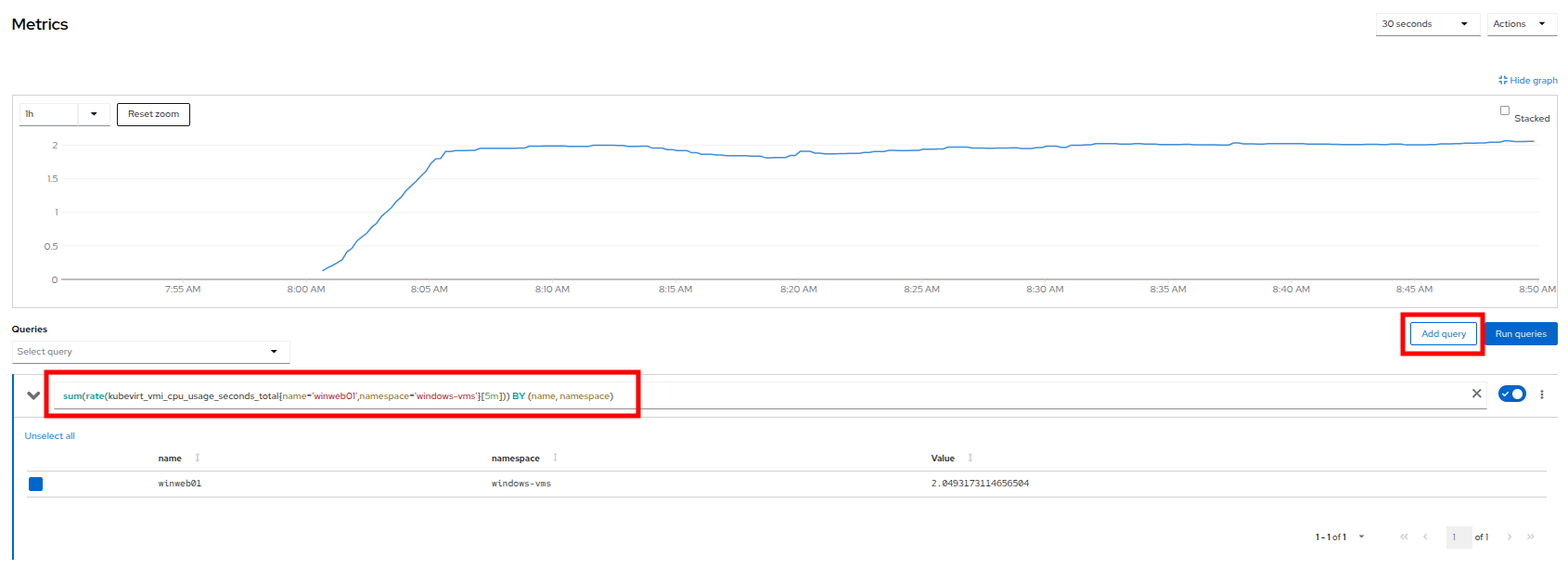

As an exercise, lets add a custom query that will show the amount of vCPU time spent in IO/wait status.

-

Click the Add Query button, and on the new line that appears, paste the following query:

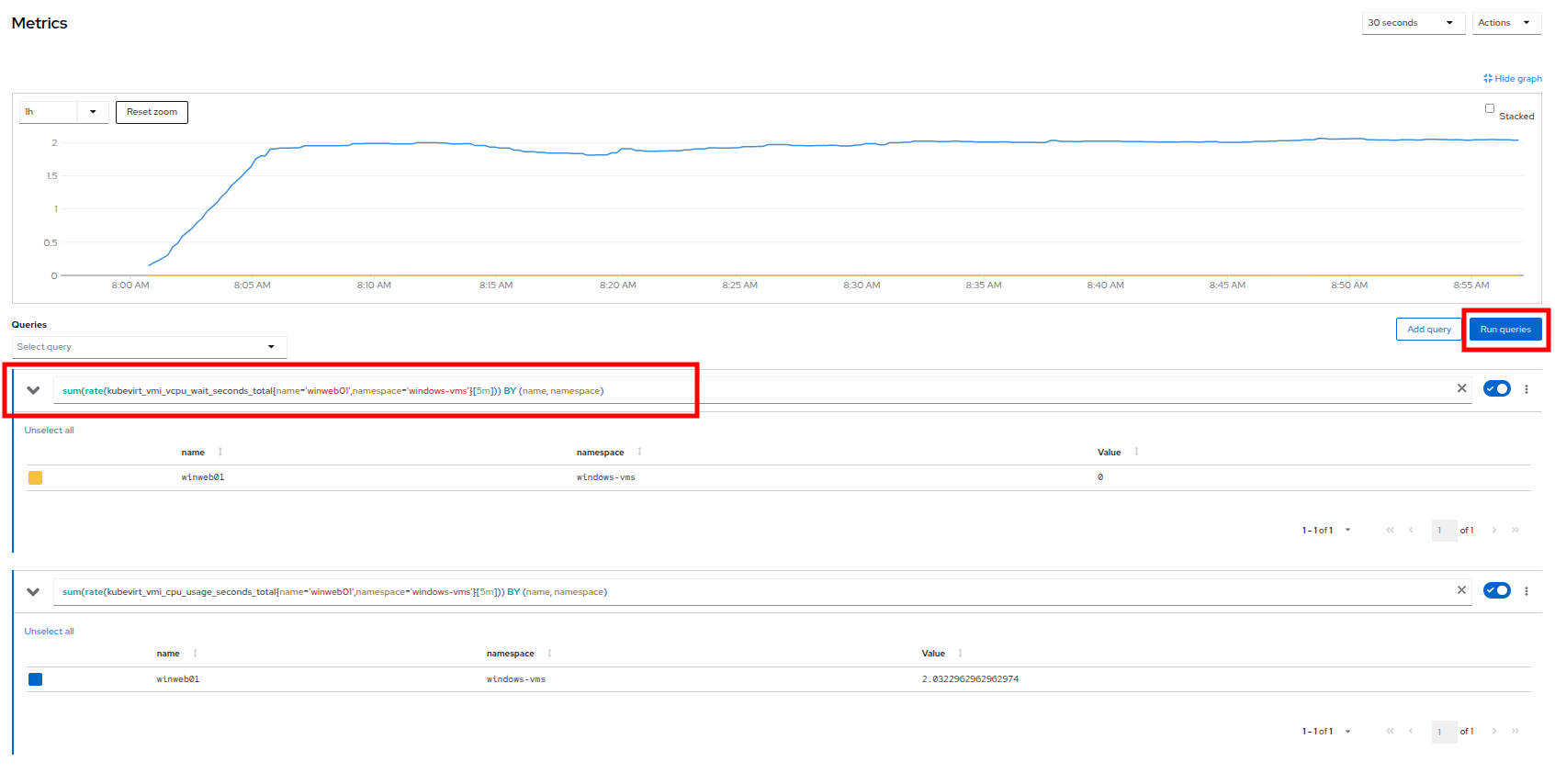

sum(rate(kubevirt_vmi_vcpu_wait_seconds_total{name='winweb01',namespace='windows-vms'}[5m])) BY (name, namespace) -

Click the Run queries button and see how the graph updates. A new line graph will appear showing that the vCPU on the guest is never not under load.

Examining Dashboards

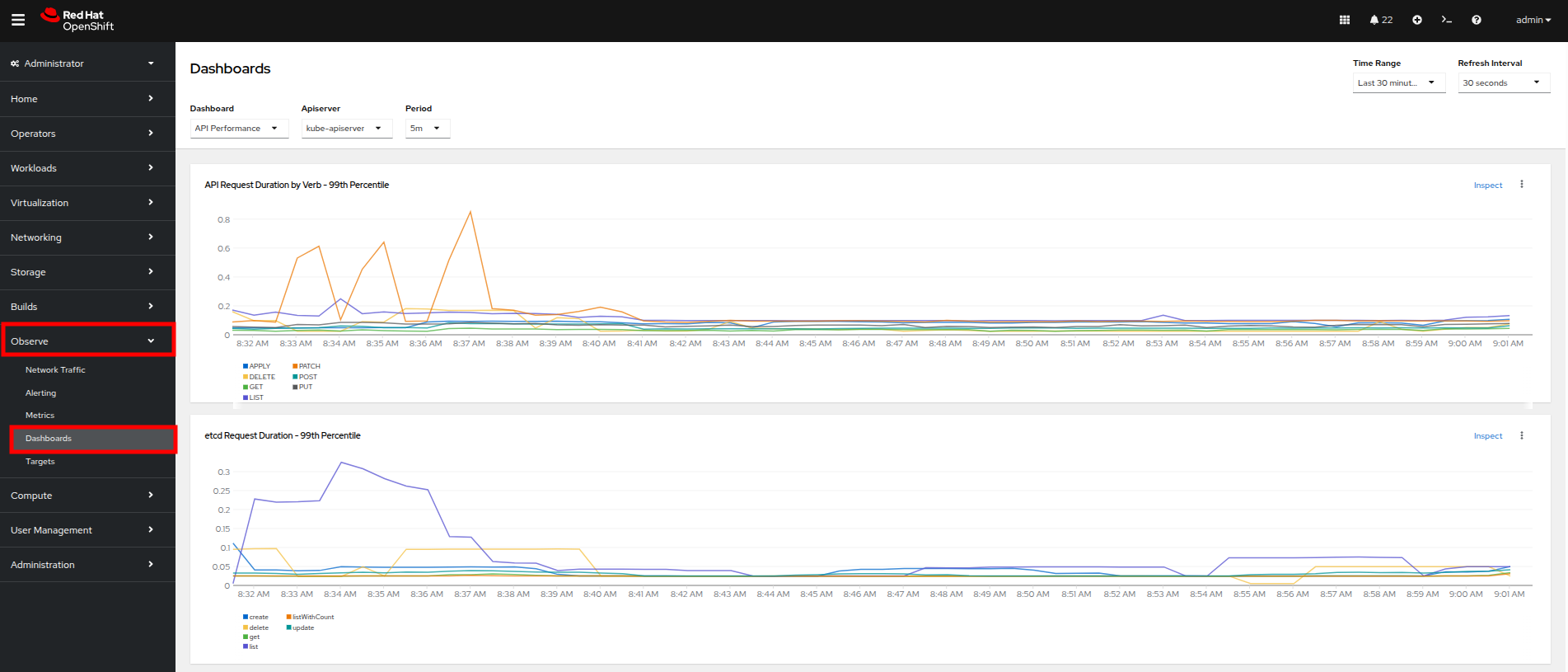

Another powerful feature of OpenShift is being able to use the Cluster Observability Operator to display detailed dashboards of cluster performance. Lets check some of those out now.

-

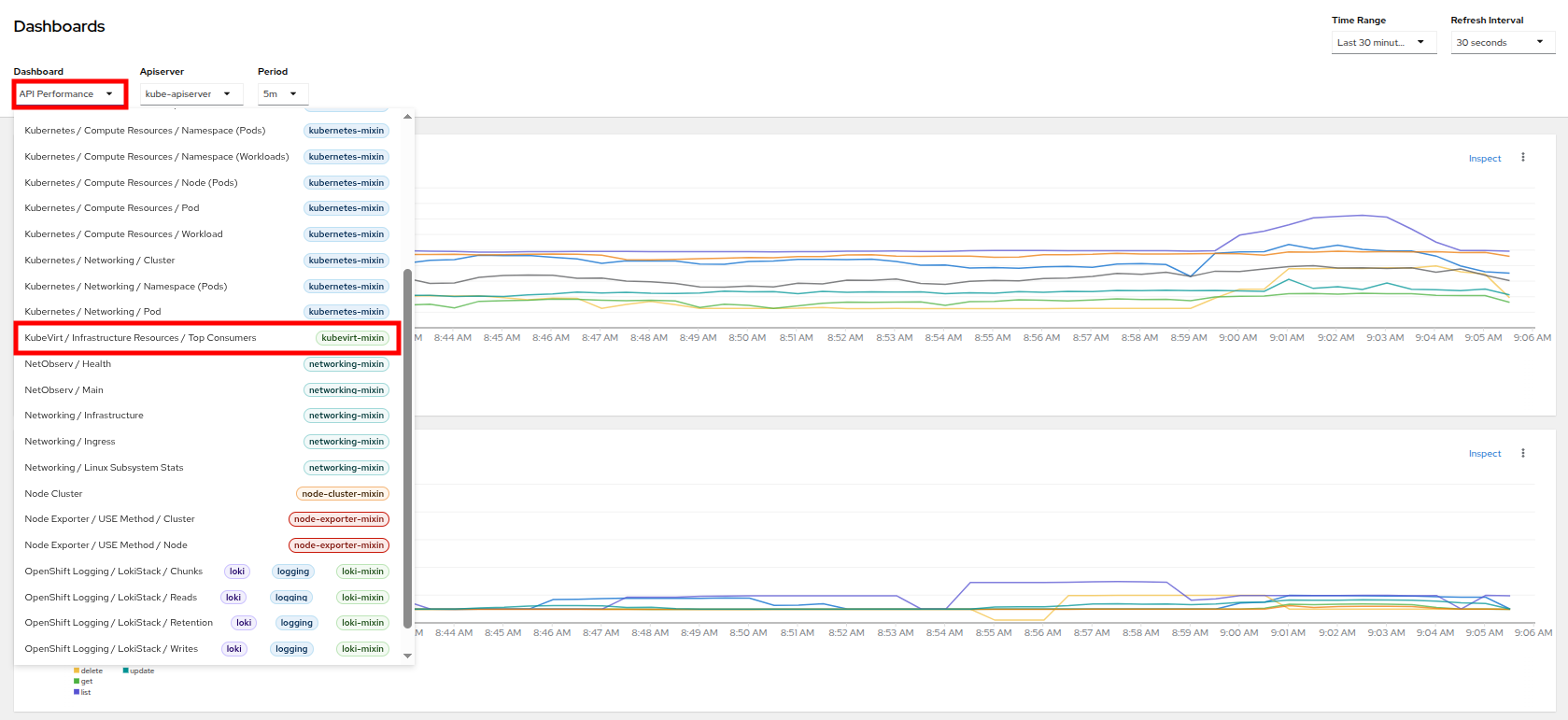

From the left side navigation menu, click on Observe, and then Dashboards.

-

Click on API Performance and search for KubeVirt/Infrastructure Resources/Top Consumers

-

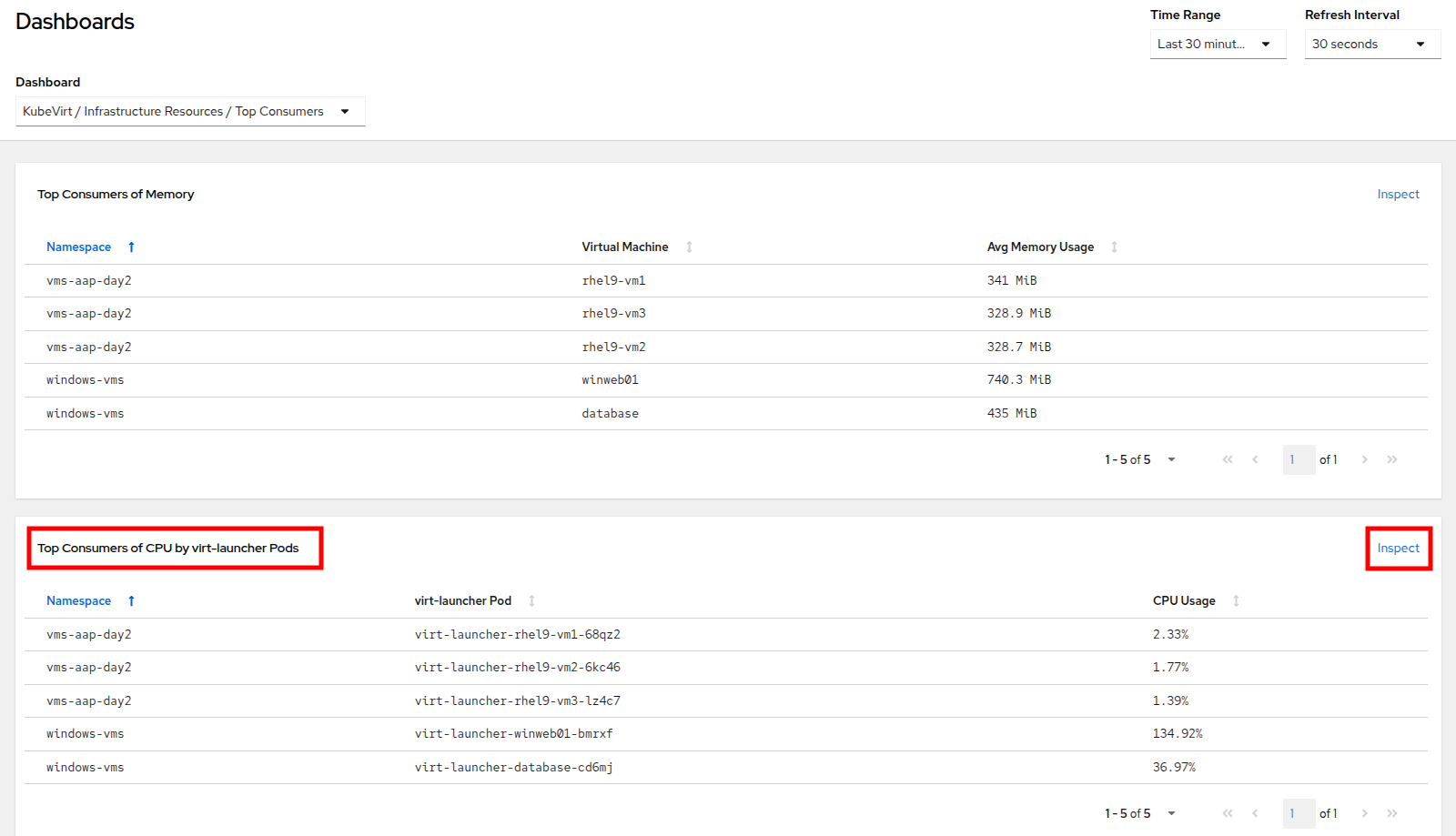

This dashboard will display the top consumers for all of the virtual machines running on your cluster. Look at the Top Consumers of CPU by virt-launcher Pods panel and click the Inspect link in the upper right corner.

-

You can can select the VMs you want to see in the graph by checking the boxes next to each VM displayed.

-

Try it now by turning some of the lines off. The associated colored line will disappear from the graph when disabled.

Now that we have completed this section determining how to locate and display alerts, performance metrics, and graphs about our nodes and workloads, we can leverage these skills in the future in order to troubleshoot our own OpenShift Virtualization environments.

Examining Resource Utilization on Virtual Machines

The winweb01, winweb02, and database servers work together to provide a simple web-based application that load-balances web requests between the two web servers to reduce load and increase performance. At this time, only one webserver is up, and is now under high demand. In this lab section we will see horizontally scaling the webservers can help reduce load on the VMs, and how to diagnose this using metrics, and graphs that are native to OpenShift Virtualization.

-

Click on winweb01 which should currently be running. This will bring you to the VirtualMachine details page.

-

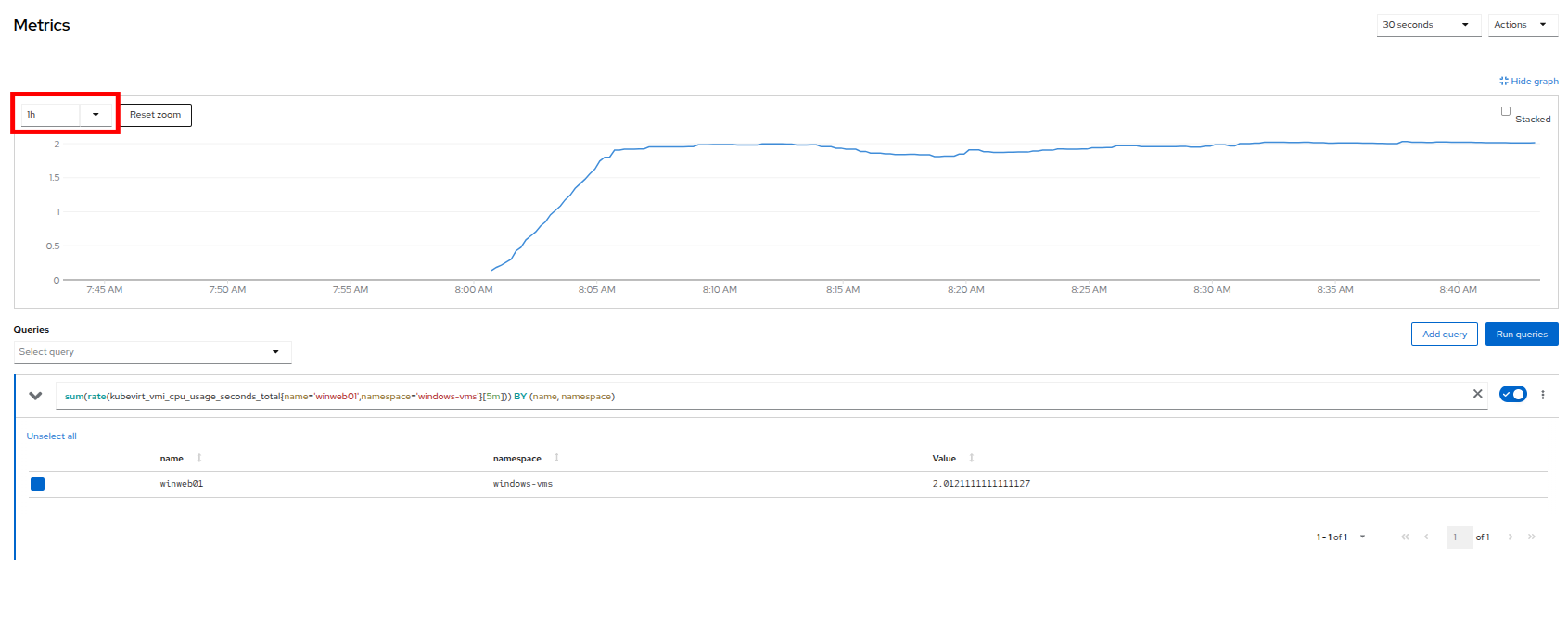

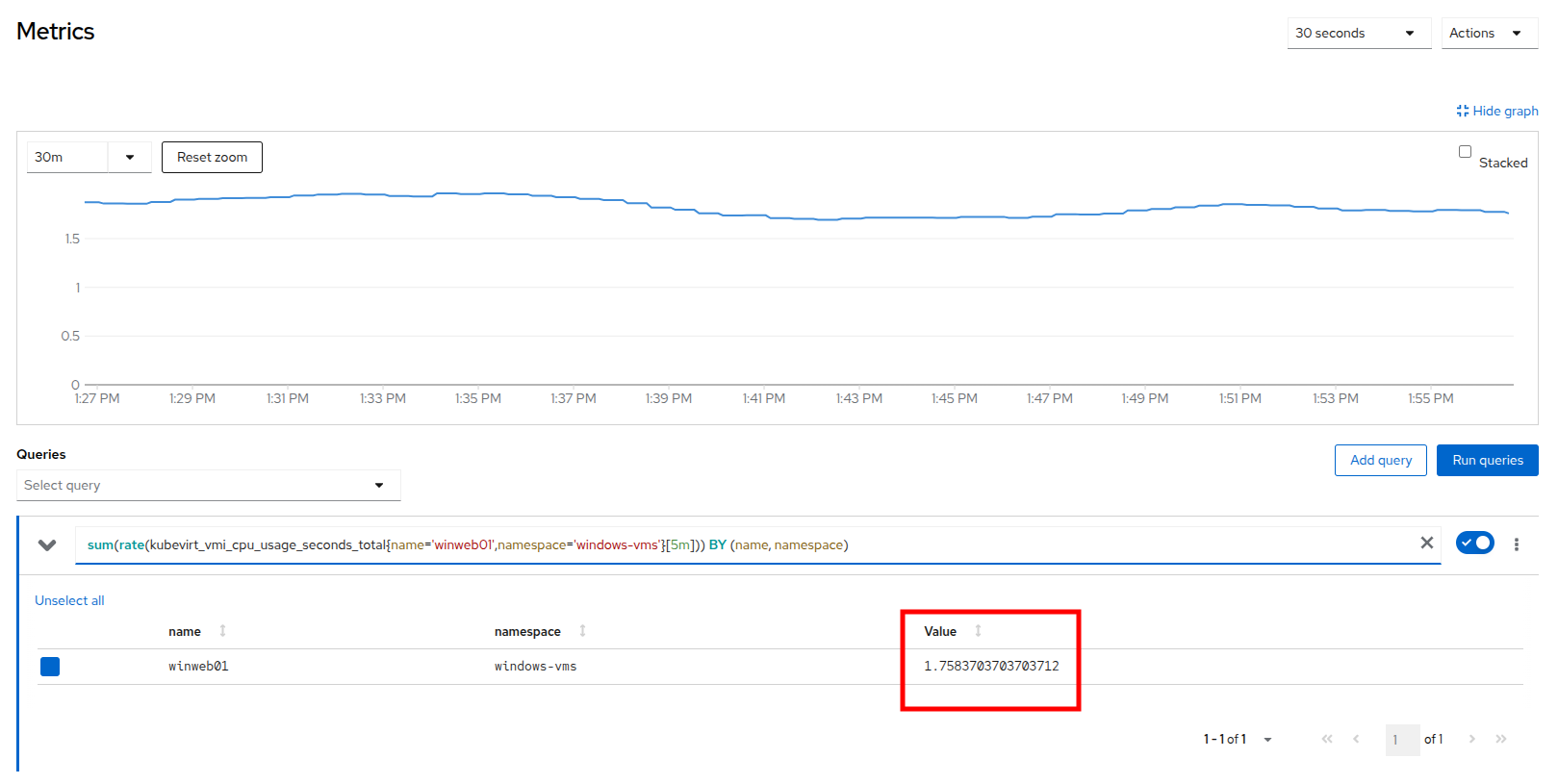

Click on the metrics tab and take a quick look at the CPU utilization graph, it should be maxed out.

-

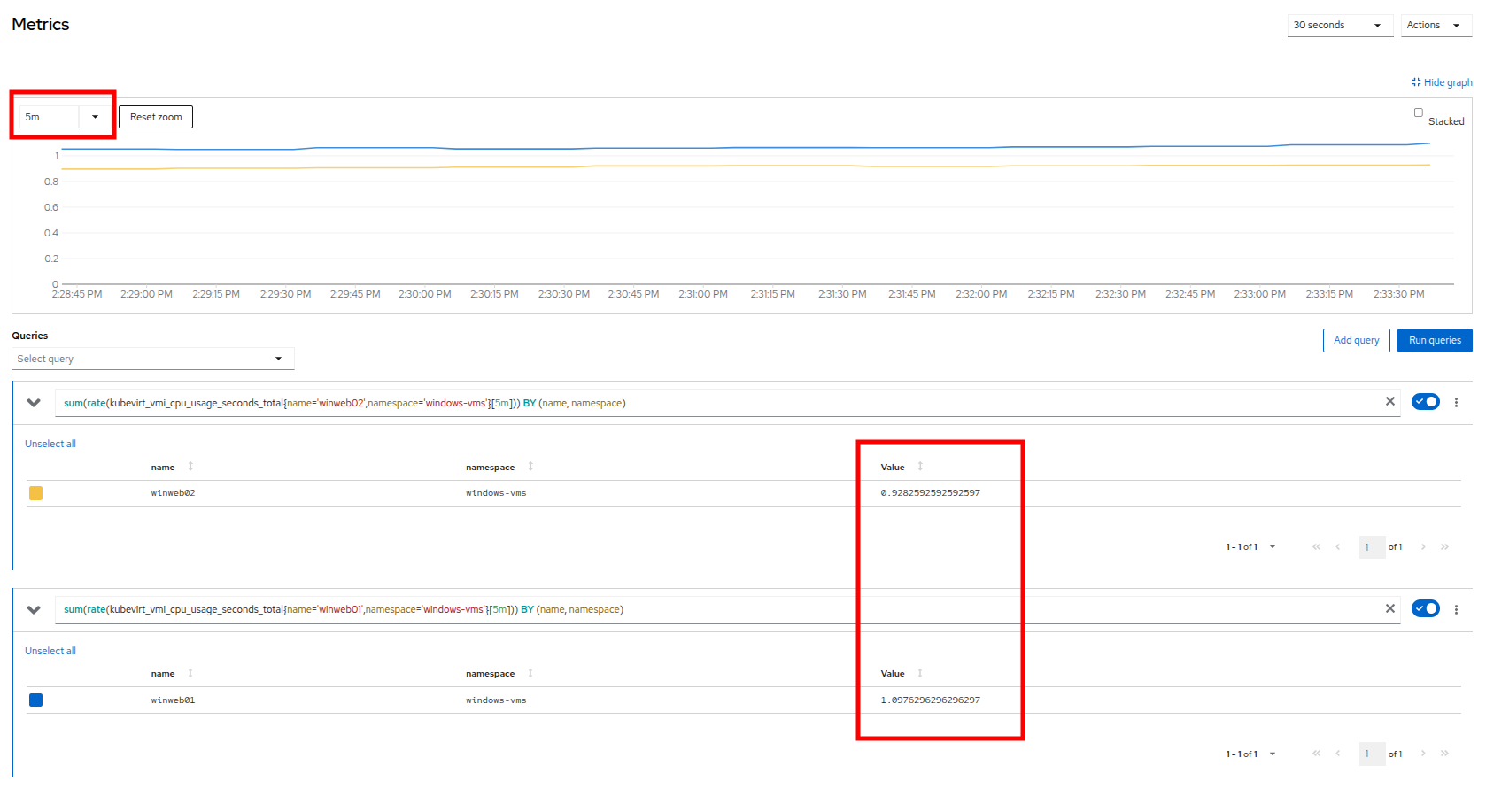

Click on the CPU graph itself to see an expanded version. You will notice that the load on the server is much higher than 1.0, which indicates 100% CPU utilization, and means that the webserver is severely overloaded at this time.

Horizontally Scaling VM Resources

-

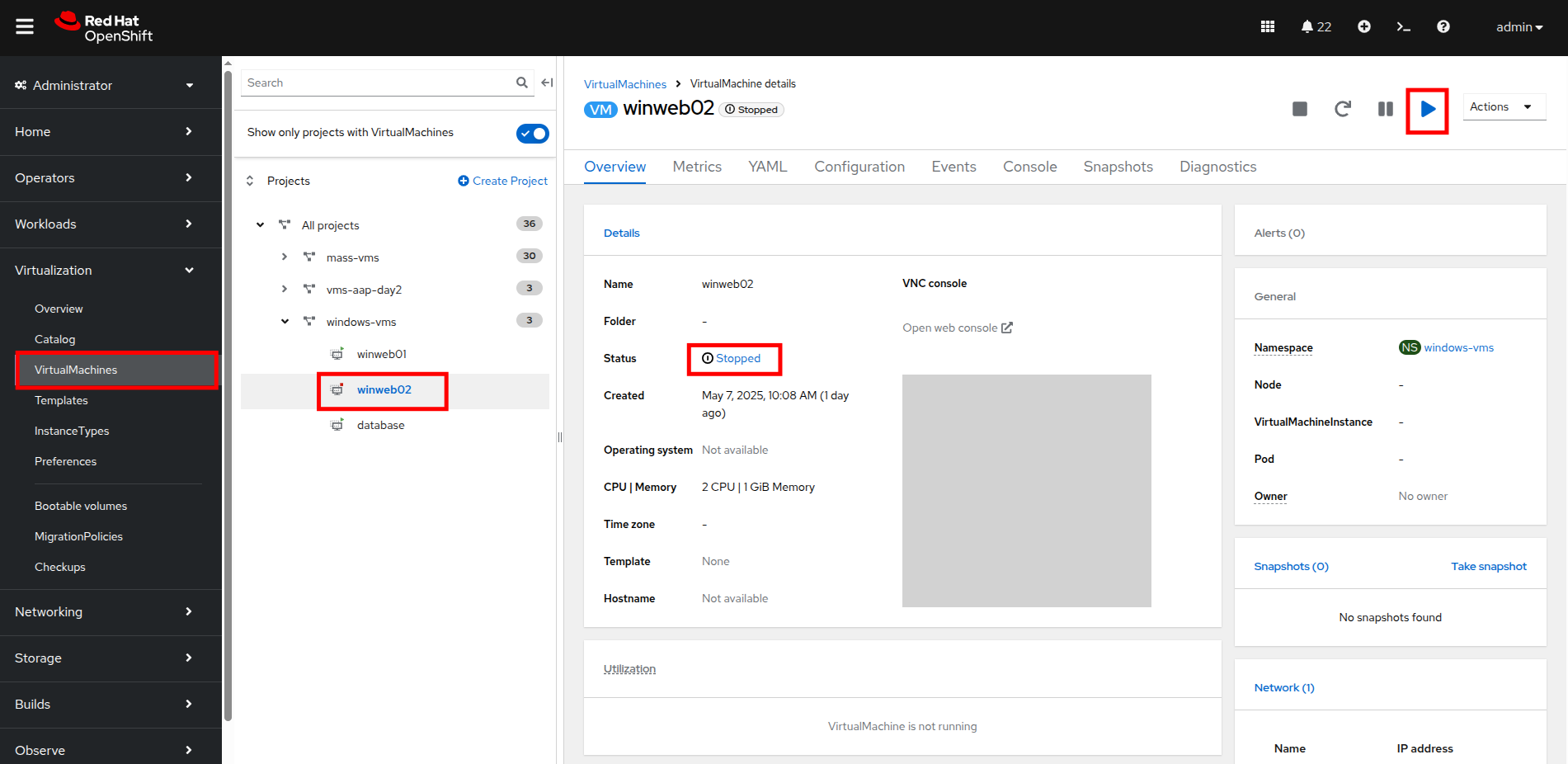

Return to the list of virtual machines by clicking on VirtualMachines in the left side navigation menu, and click on the winweb02 virtual machine. Notice the VM is still in the Stopped state. Use the Play button in the upper right corner to start the virtual machine.

-

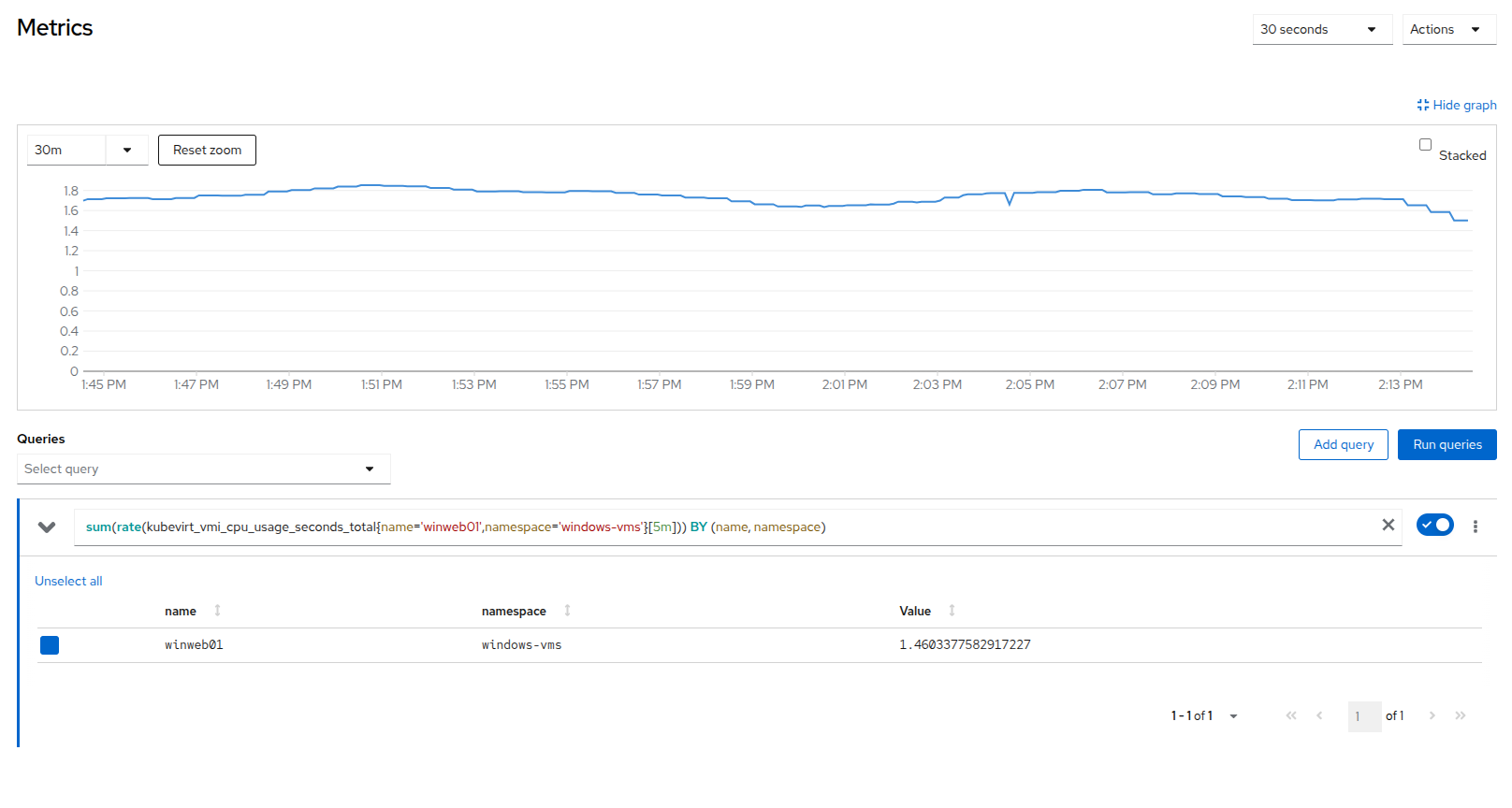

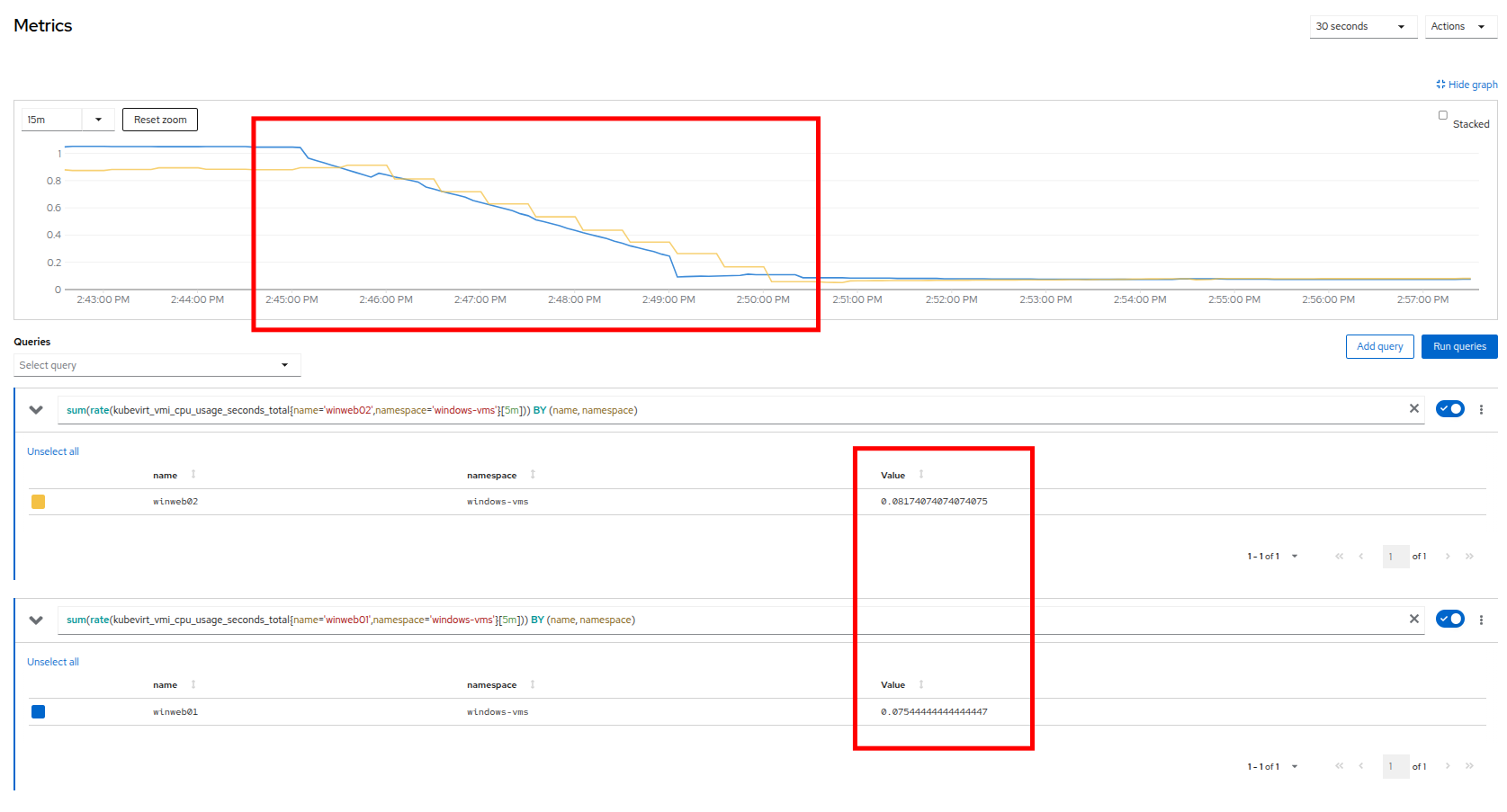

Return to the Metrics tab of the winweb01 virtual machine, and click on it’s CPU graph again. We should see the load begin to gradually come down.

-

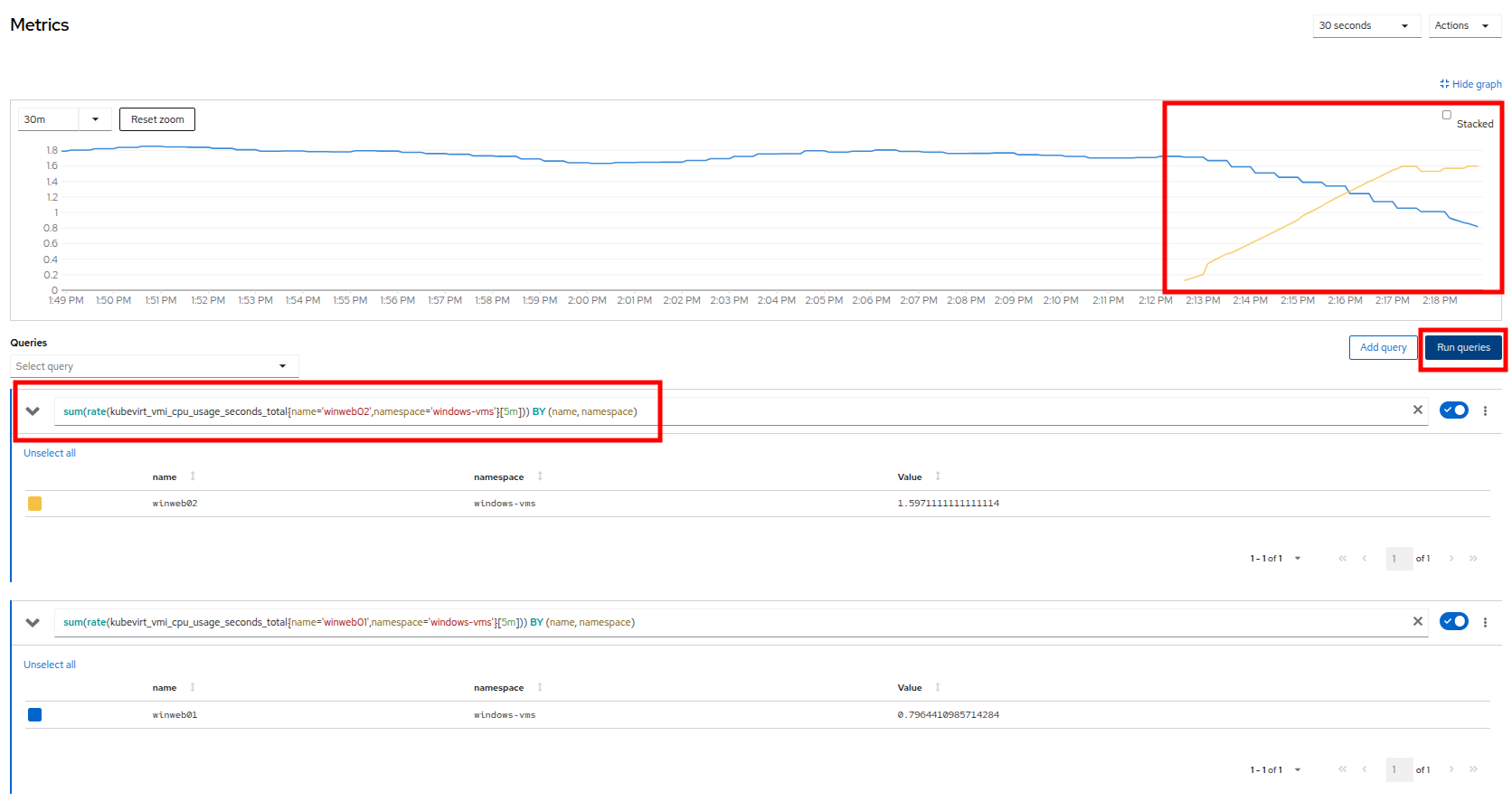

Add a query to also graph the load on winweb02 at the same time by clicking the Add query button, and pasting the following syntax:

sum(rate(kubevirt_vmi_cpu_usage_seconds_total{name='winweb02',namespace='windows-vms'}[5m])) BY (name, namespace) -

Click the Run queries button and examine the updated graph that appears.

We can see by examining the graphs that winweb02 is now under much more load than winweb01 but over the next 5 minutes or so the load will level out and balance on the two virtual machines.

Vertically Scaling VM Resources

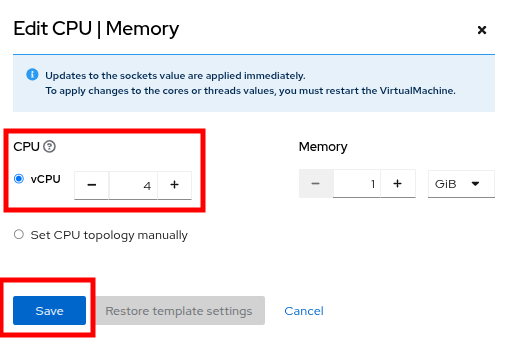

Even with the load evening out on the VM’s over a 5 minute interval, we can still see that they are under fairly high load. Without the ability to scale further horizontally the only option that remains is to scale vertically by adding CPU and Memory resources to the VMs. Luckily as we explored in the previous module, this can be done by hot-plugging these resources, and not affect the workload as it’s currently running.

-

Start by examining the graph on the metrics page from the previous section. You can set the refresh interval to the last 5 minutes with the dropdown in the upper left corner. Note that the load on the two virtual guests is holding steady near 1.0, which signifies that both guests are still pretty overwhelmed.

-

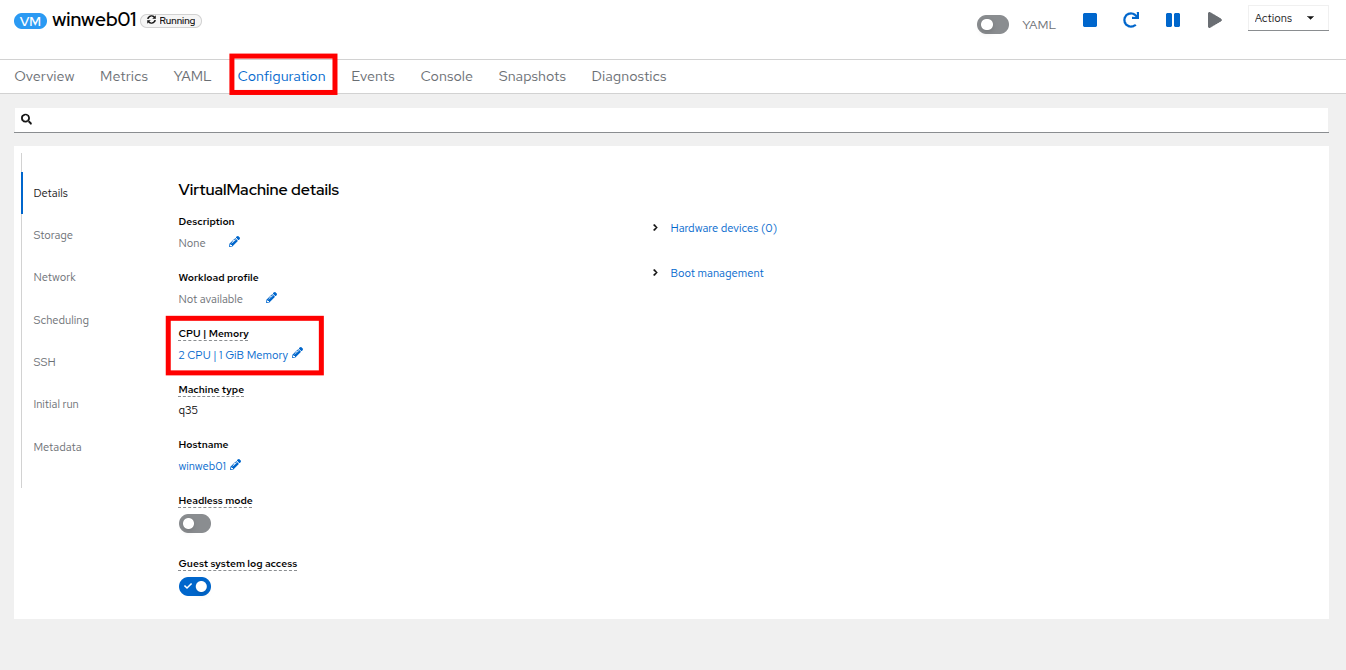

Navigate back to the virtual machine list by clicking on VirtualMachines on the left side navigation menu, and click on winweb01.

-

Click on the Configuration tab for the VM, and under VirtualMachine details find the section for CPU|Memory and click the pencil icon to edit.

-

Increase the vCPUs to 4 and click the Save button.

-

Click back on the Overview tab. You will see that the CPU | Memory section in the details has been updated to the new value, and that the CPU utilization on the guest gradually drops quite quickly.

-

Repeat these steps for winweb02.

-

Once both vms are upgraded, click on windows-vms project. You will see that the CPU usage dropped dramatically.

-

Click on winweb01 and then click on the Metrics tab and the CPU graph to view how the utilization graph now looks. You can also re-add the query from winweb02 and see that both graphs came down quite rapidly after the resources on each guest were increased, and the load on each VM is so much less than before.

Discussing Swap/Memory Overcommit

| This section of the lab is just informative for what we may do in a scenario where we find ourselves out of physical cluster resources. Please read the following information. |

Some times you don’t have the ability to increase CPU or memory resources to a specific workload because you have exhausted all of your physical resources. By default, OpenShift has an overcommit ratio of 10:1 for CPU, however memory in a Kubernetes environment is often a finite resource.

When a normal kubernetes cluster encounters an out of memory scenario due to high workload resource utilization, it begins to kills pods indescriminately. In a container-based application environment, this is usually mitigated by having multiple replicas of an application behind a load balancer service. The application stays available served by other replicas, and the killed pod is reassigned to a node with free resources.

This doesn’t work that well for virtual machine workloads which in most cases are not composed of many replicas, and need to be persistently available.

If you have exhausted the physical resources in your cluster the traditional option is to scale the cluster, but many times this is much easier said than done. If you don’t have a spare physical node on standby to scale, and have to order new hardware, you can often be delayed by procurement procedures or supply chain disruptions.

One workaround for this is to temporarily enable SWAP/Memory Overcommit on your nodes so that you can buy time until the new hardware arrives, this allows for the worker nodes to SWAP and use hard disk space to write application memory. While writing to hard disk is much much slower than writing to system memory, it does allow to preserve workloads until additional resources can arrive.

Scaling a Cluster By Adding a Node.

The primary recourse when you have run out of physical resources on the cluster is the scale the cluster by adding additional worker nodes. This can then allow for workloads that are failing or cannot be assigned to be assigned successfully. This section of the lab is dedicated to just this idea, we will overload our cluster, and then add a new node to allow all VMs to run successfully.

| In this lab environment we are not actually adding an additional physical node, we are simulating the behavior by having a node on standby which is tainted to not allow VM workloads. At the appropriate time we will remove this taint, thus simulating the addition a new node to our cluster. |

-

In the left side navigation menu, click on Virtualization and then VirtalMachines.

-

Ensure that all VMs in vms-aap-day2 and windows-vms projects are powered on.

-

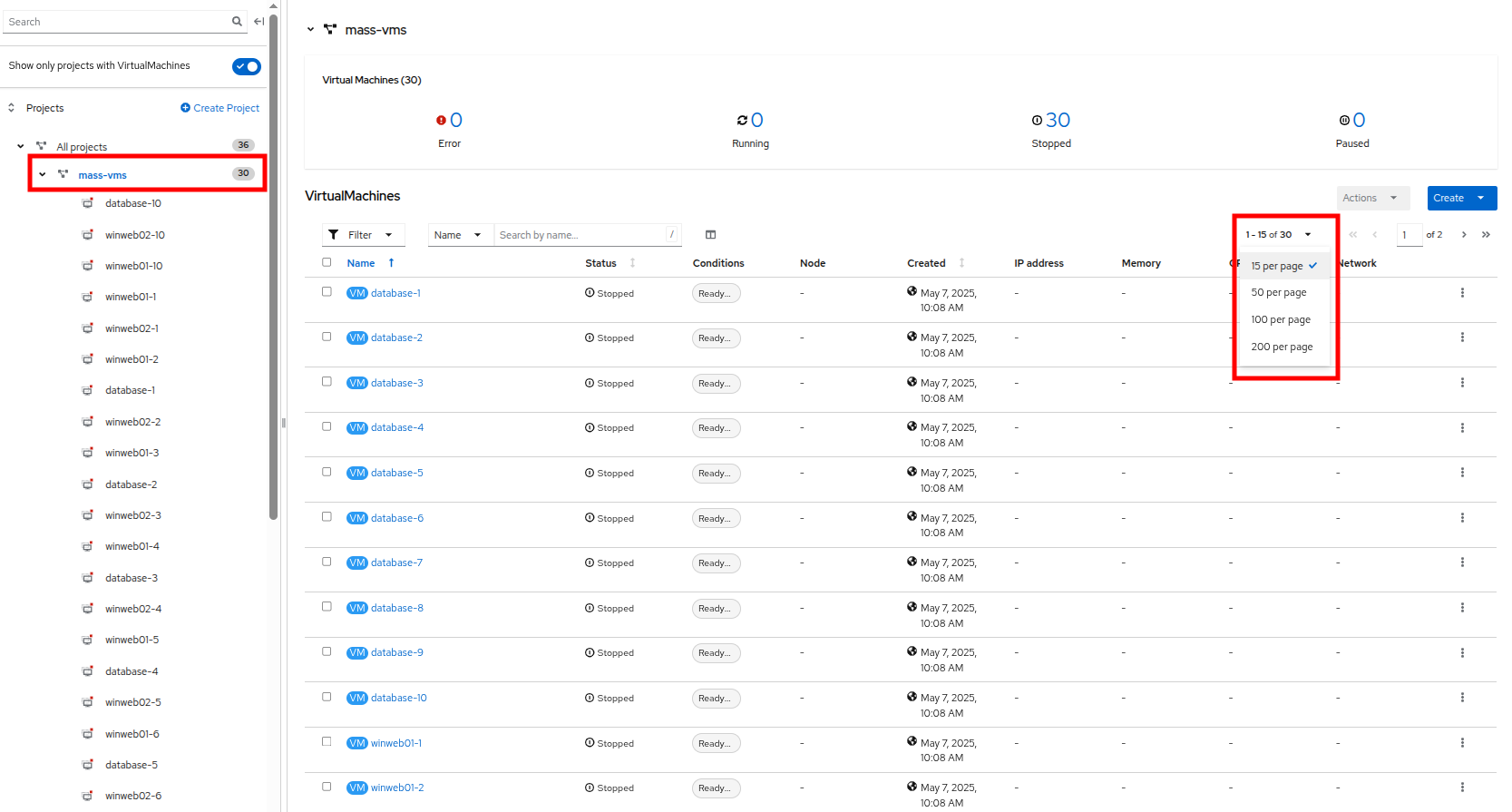

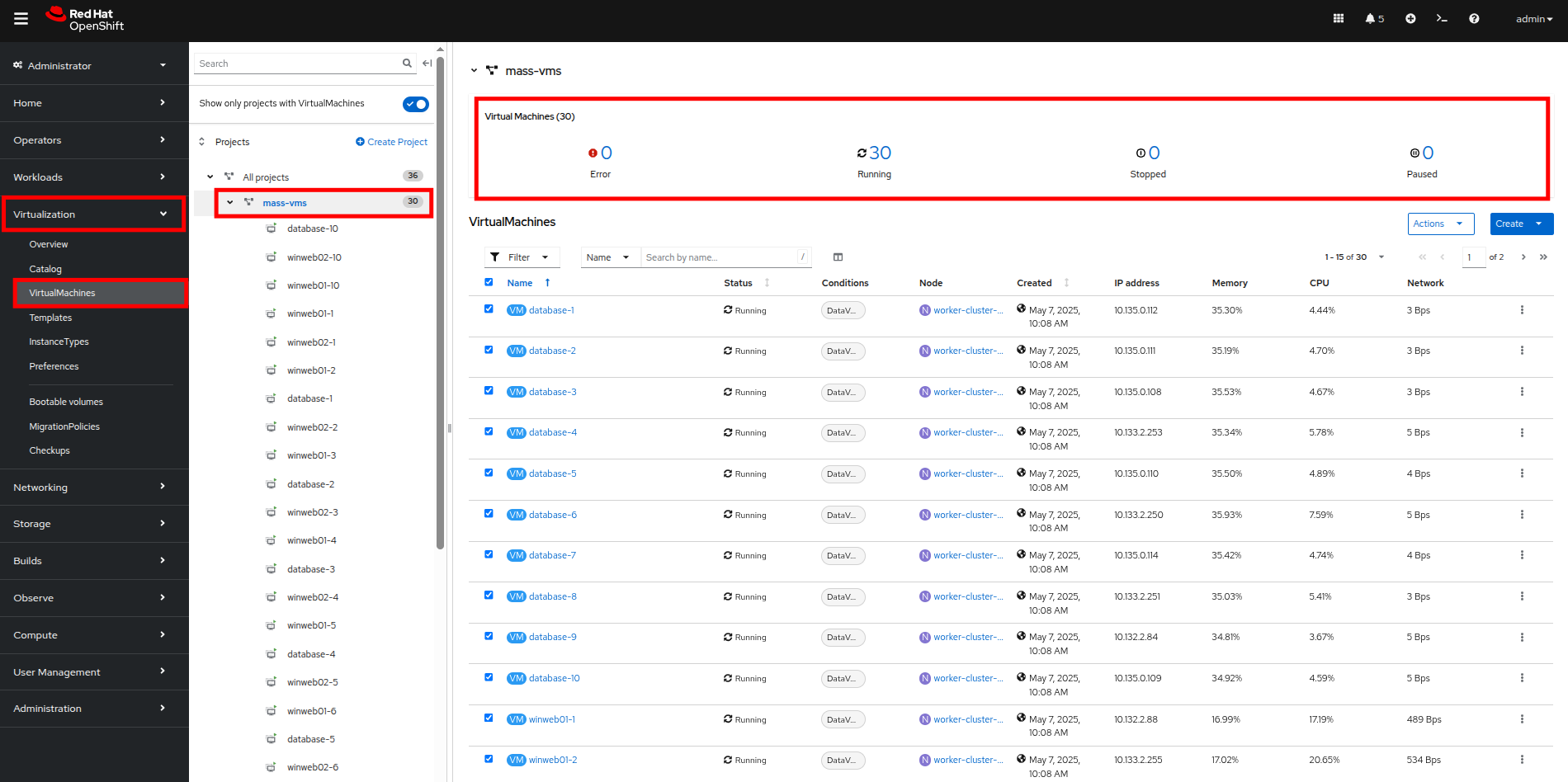

Click on the mass-vm project to list the virtual machines there. Click on 1 - 15 of 30 drop down and change it to 50 per page to display all of the VMs.

-

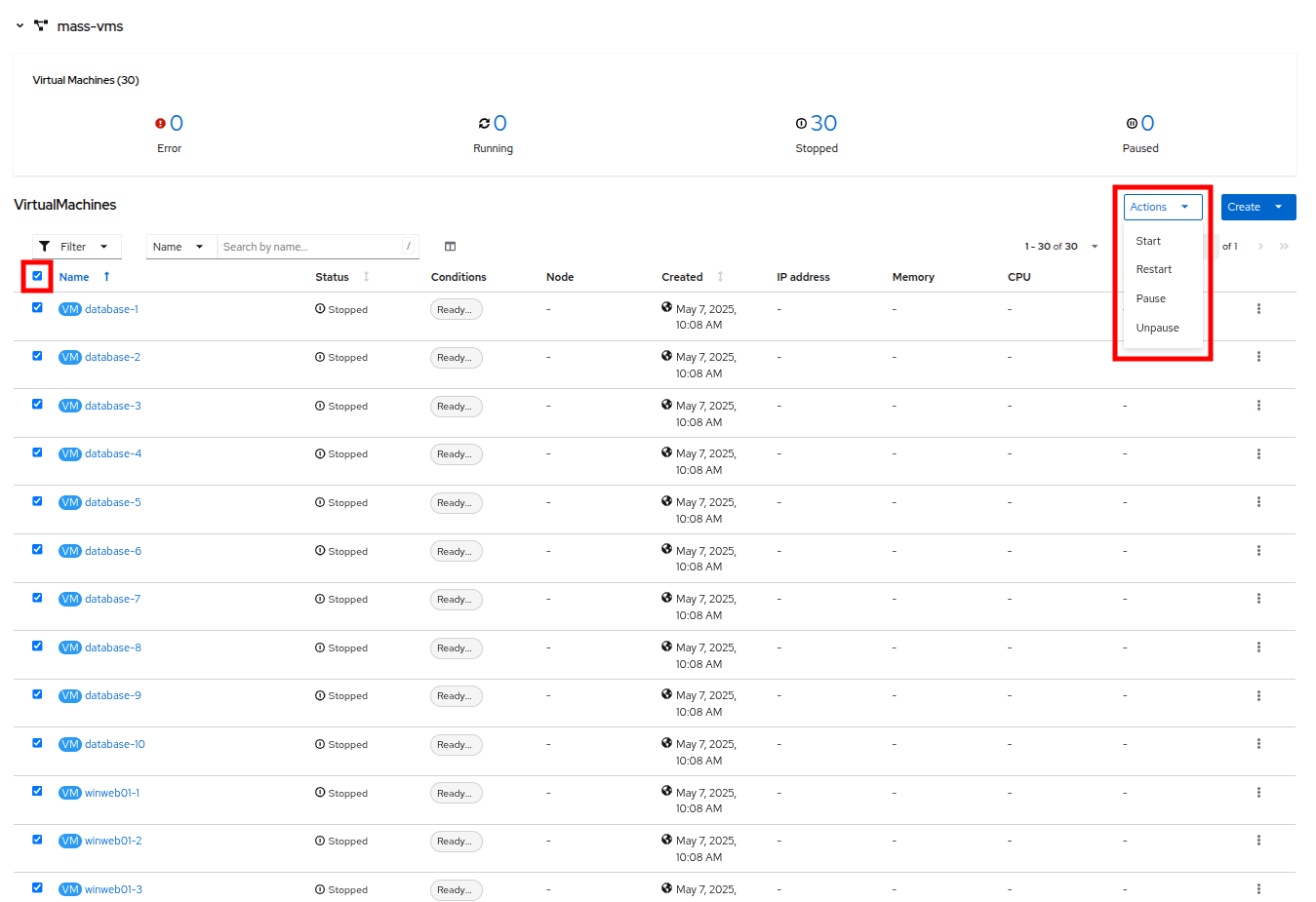

Click on the Check box under the Filter dropdown to select all VMs in the project. Click on the Actions button and select Start from the dropdown menu.

-

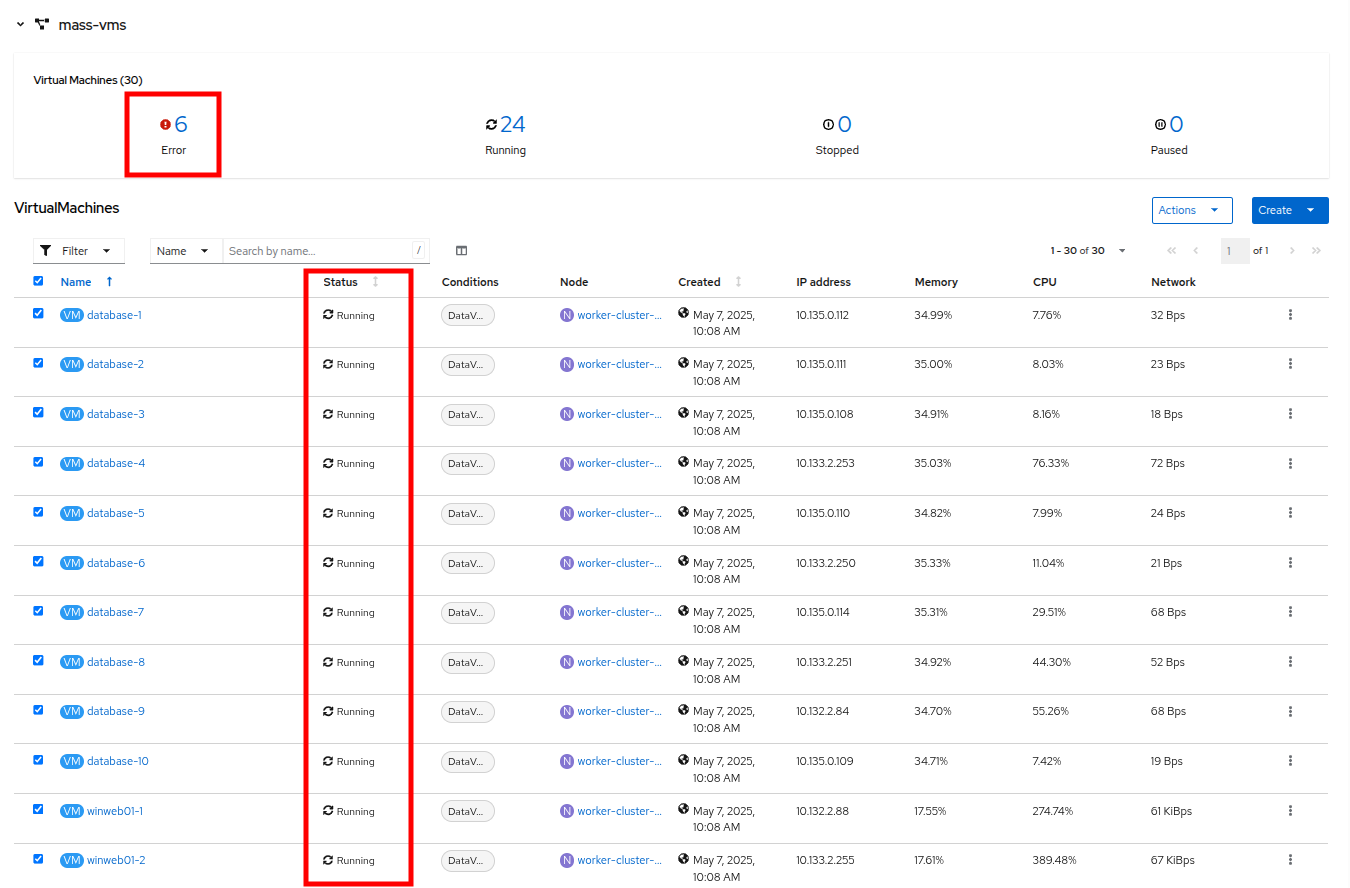

Once all of the VMs attempt to power on, there should be approximently 6-7 VMs that are currently in an error state.

-

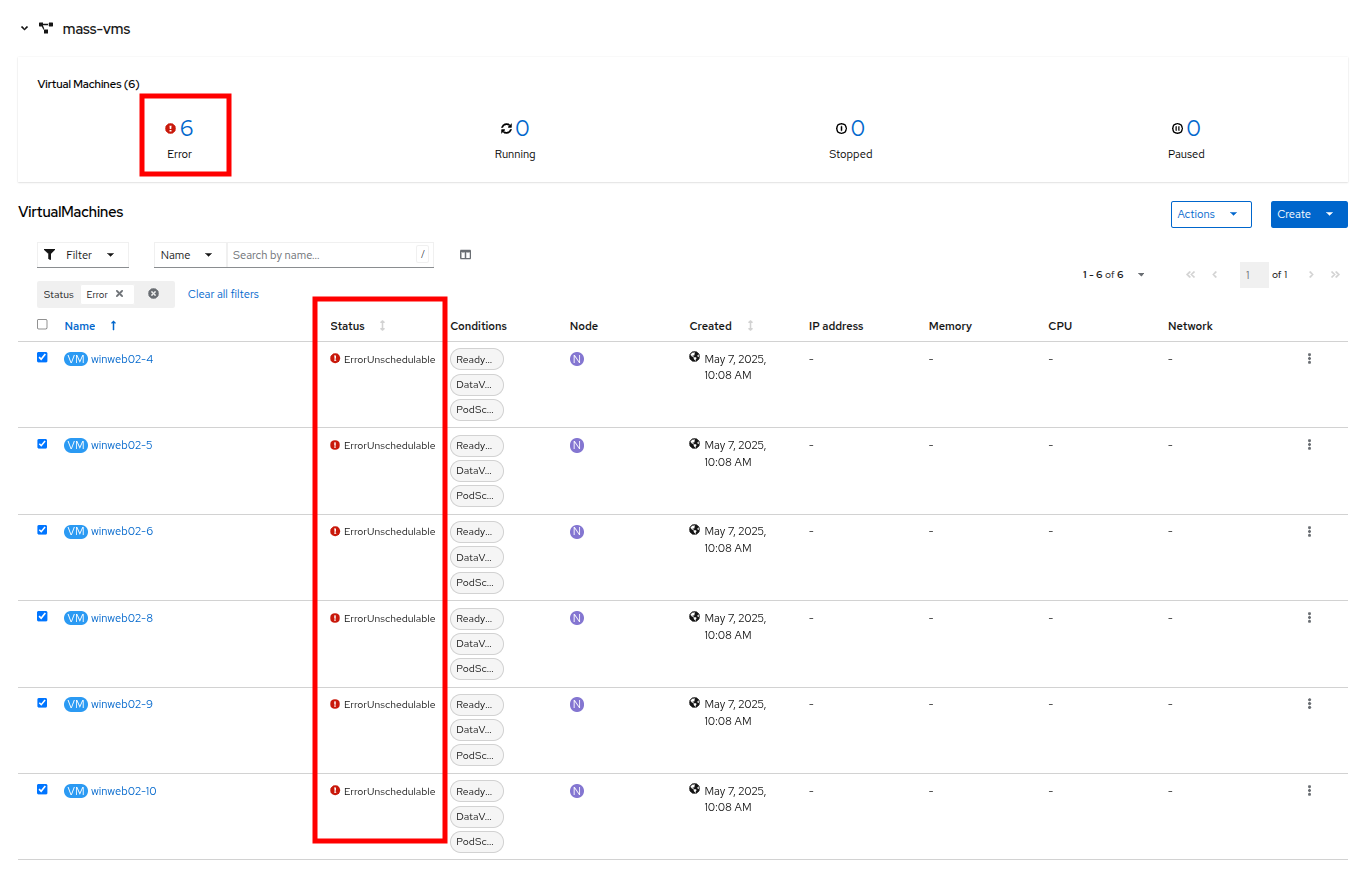

Click on the number of errors to see an explanation for the error state.

-

Each of these VMs will show a ErrorUnschedulable in the status column, because the cluster is out of resources to schedule them.

-

In the left side navigation menu, click on Compute then click on Nodes. See that three of the worker nodes (nodes 2-4) have a large number of assigned pods, and a large amount of used memory, while worker node 1 is using much less by comparison.

In an OpenShift environment, the memory available is calculated based on memory requests submitted by each pod, i n this way the memory a pod needs is guaranteed, even if the pod is not using that amount at the time. This is why each of these worker nodes are considered "full" even though they only show about 75% utilization when we look. -

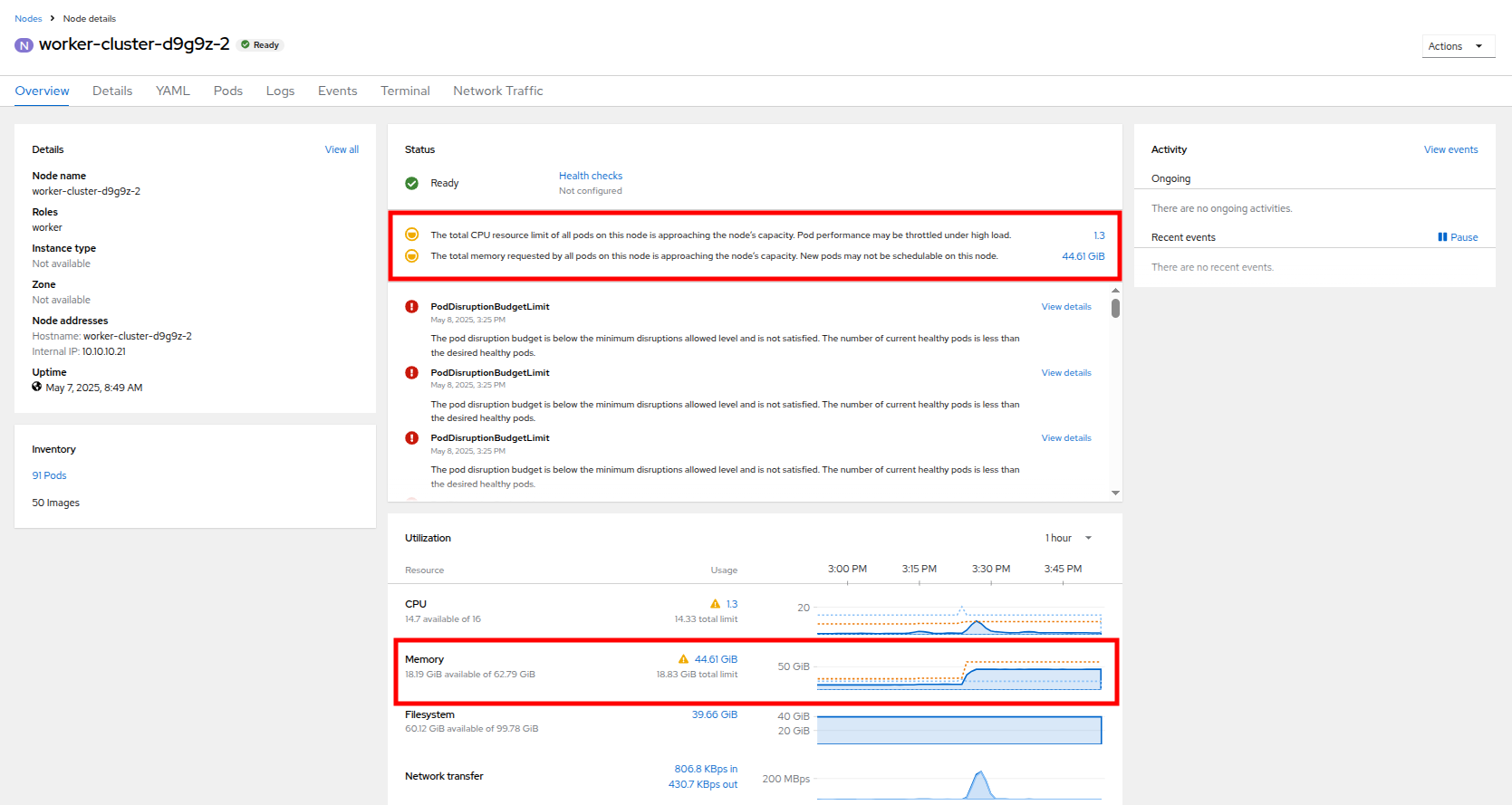

Click on worker node 2, you will be taken to the Node details page. Notice there are warnings about limited resources available on the node. You can also see the graph of memory utilization for the node, which shows the used memory in blue, and the requested amount as an orange dashed line as well.

-

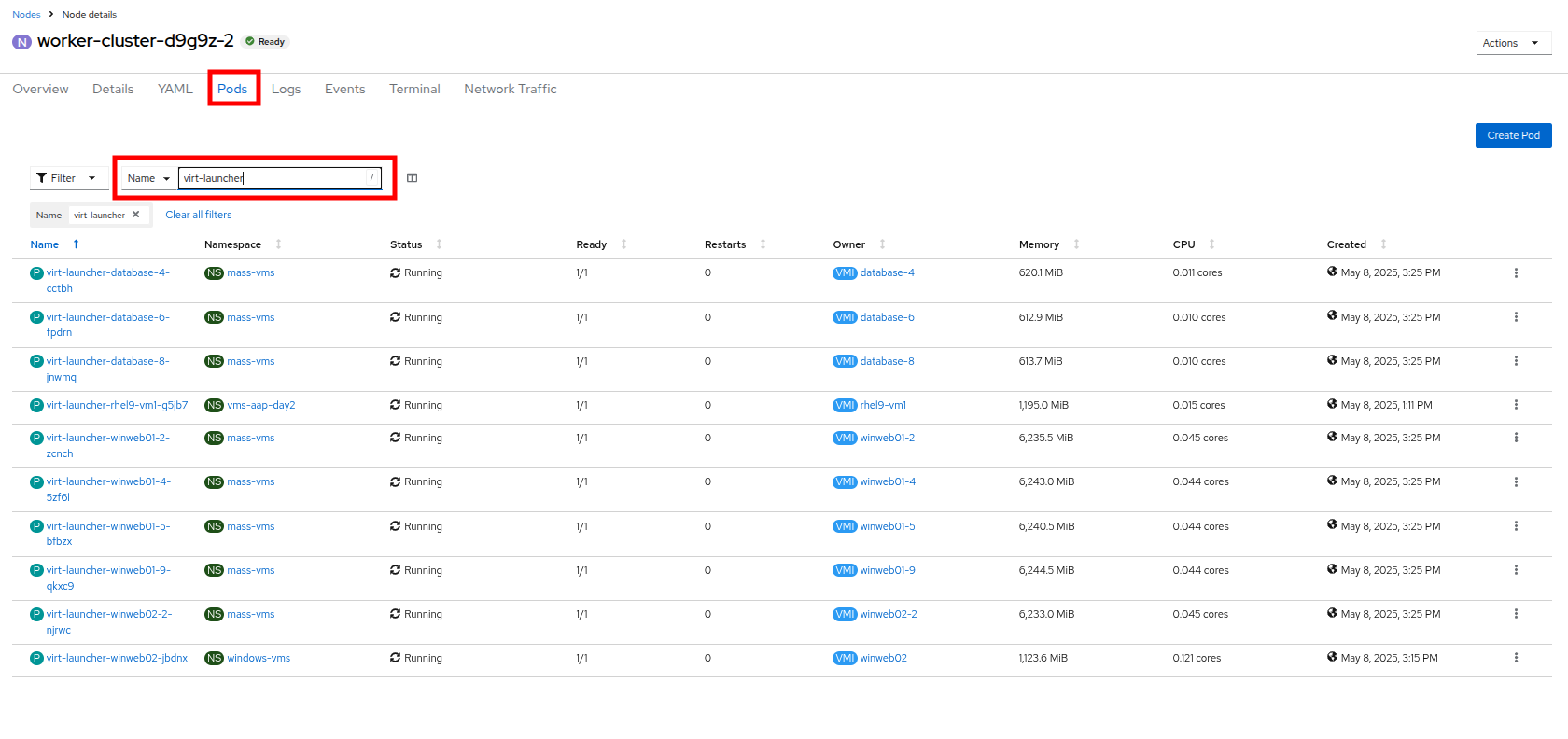

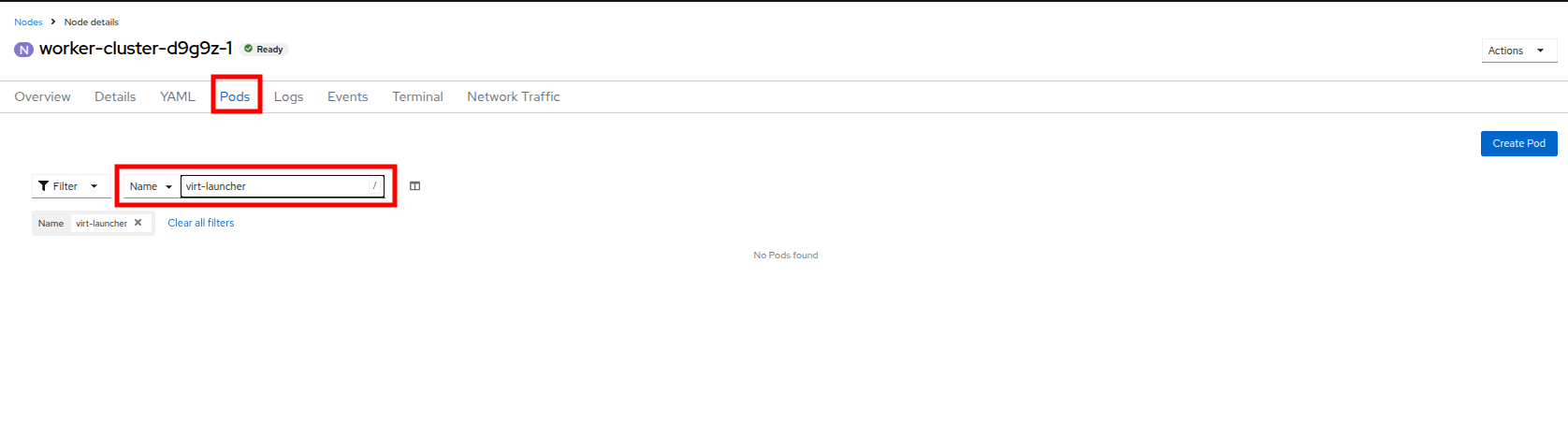

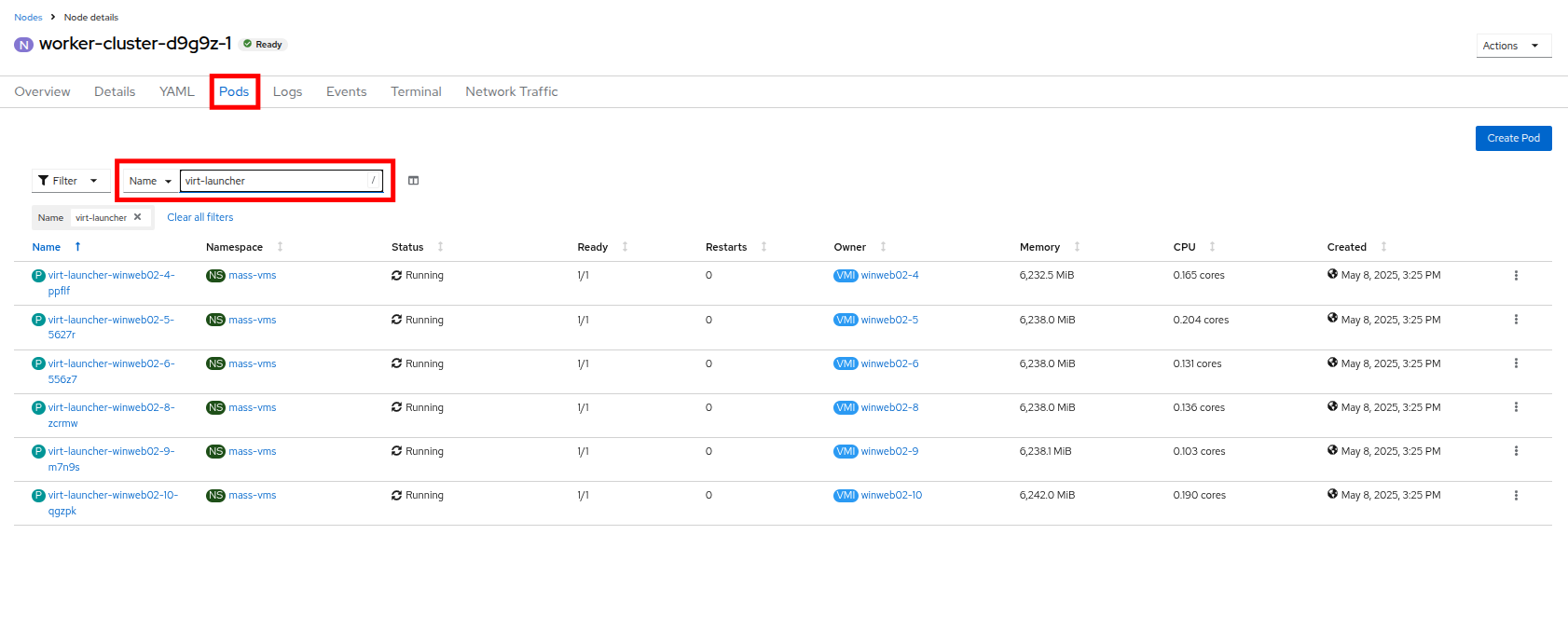

Click on the Pods tab at the top, and in the search bar, type

virt-launcherto search for VMs on the node. -

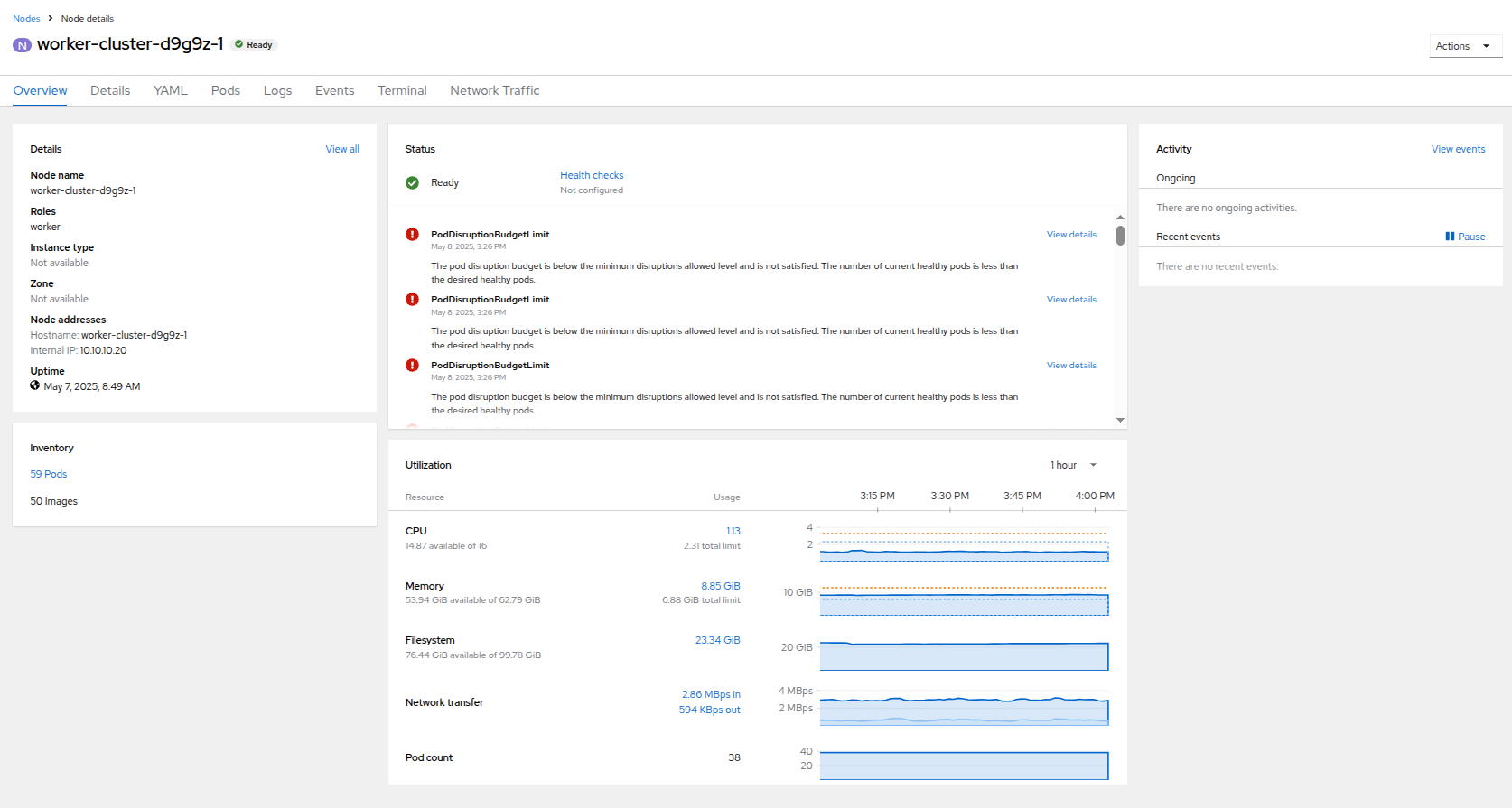

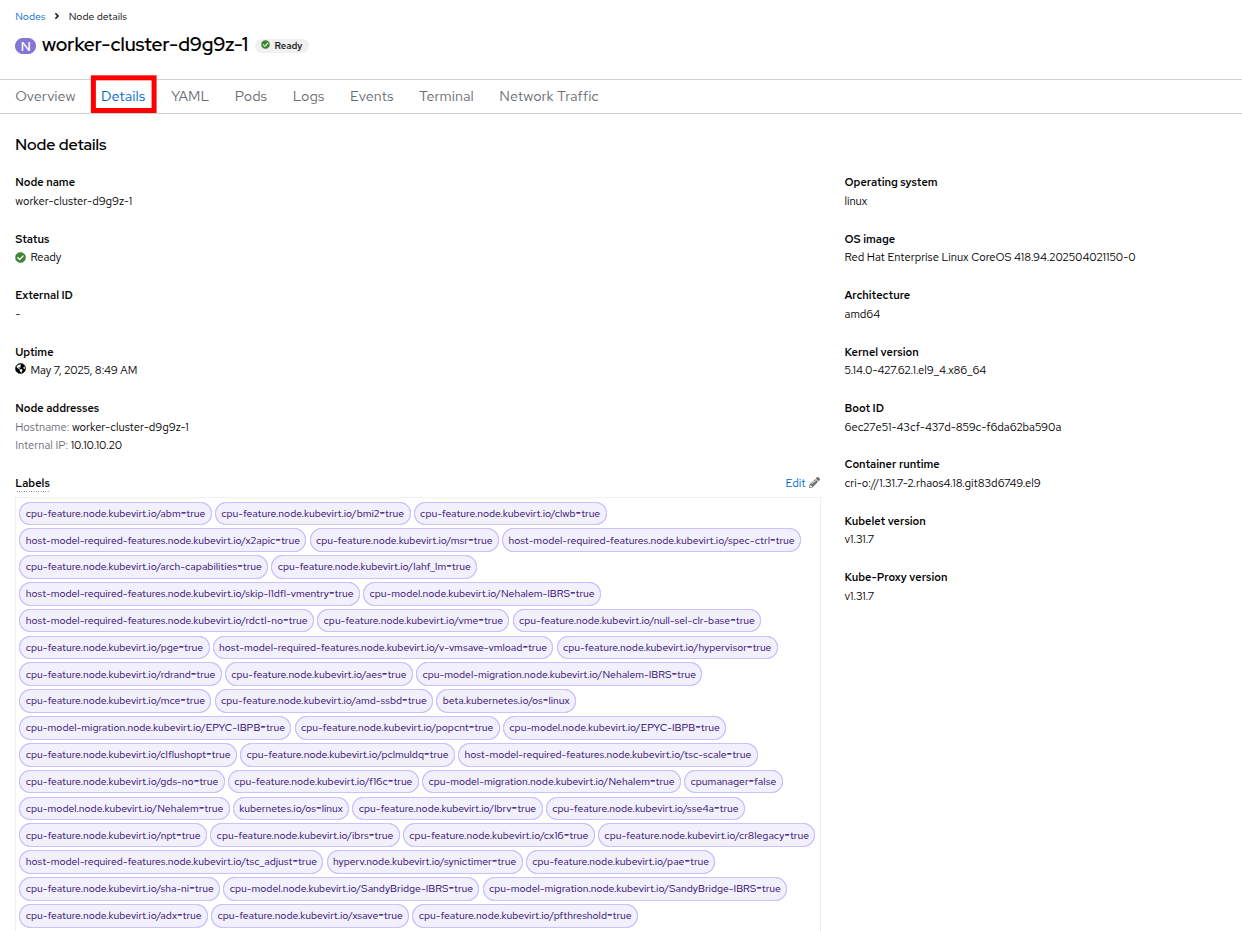

Now, click on Nodes in the left-side navigation menu, and then click on worker node 1 which will bring you to it’s Node details page. Notice there are no CPU or Memory warnings currently on the node.

-

Click on the Pods tab at the top, and in the search bar, type

virt-launcherto search VMs on the node. Notice that there are currently none. -

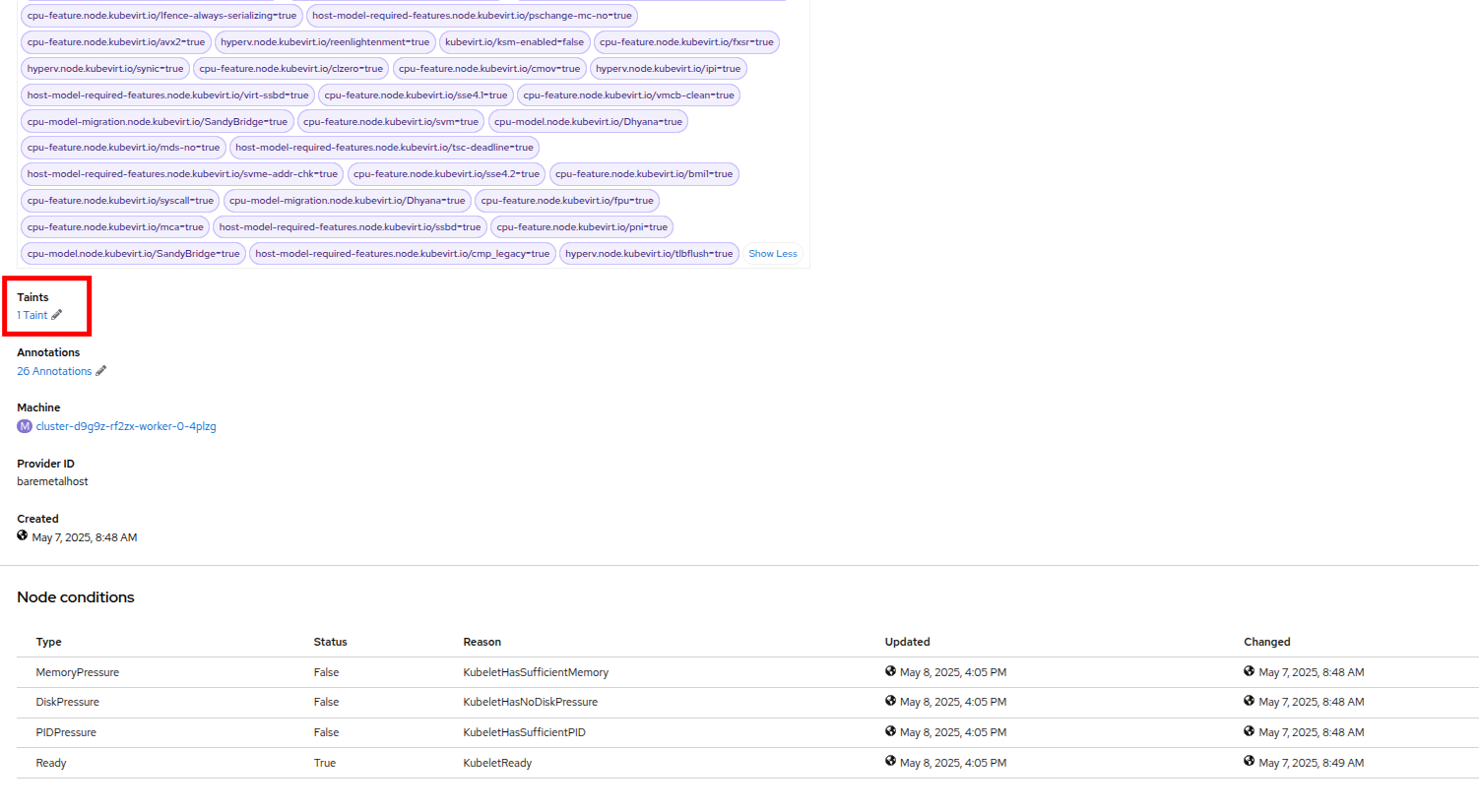

Click on the Details tab, and scroll down until until you see the Taints section where there is one taint defined.

-

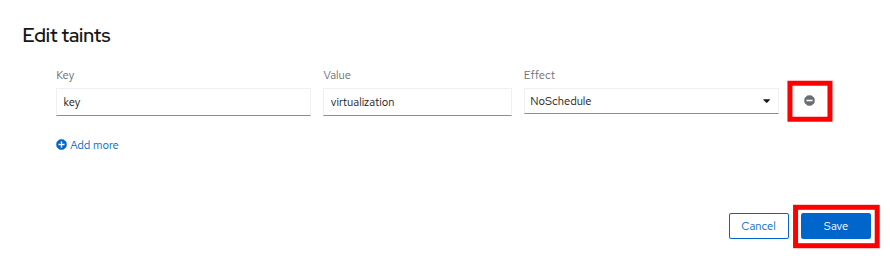

Click on the pencil icon to bring up a box to edit the current Taint on the node. When the box appears, click on the - next to the taint definition to remove it and click the Save button.

-

Once the taint is removed, scroll back to the top and click on the Pods tab again and type

virt-launcherinto the search bar once more, you will see the unscheduleable VMs are being assigned to this node now. -

Return to the list of VMs in the mass-vms project by clicking on Virtualization and then clicking on VirtualMachines in the left side navigation menu to see all of the VMs now running.

Summary

In this module you have worked as an OpenShift Virtulization administrator dealing with a high load scenario which you were able to remediate by horizontally and vertically scaling virtual machine resources. You also were able to solve an issue where you had run low on physical cluster resources and were unable to provision new virtual machines by scaling up your cluster to provide additional resources.