Introduction

Hosted Control Planes

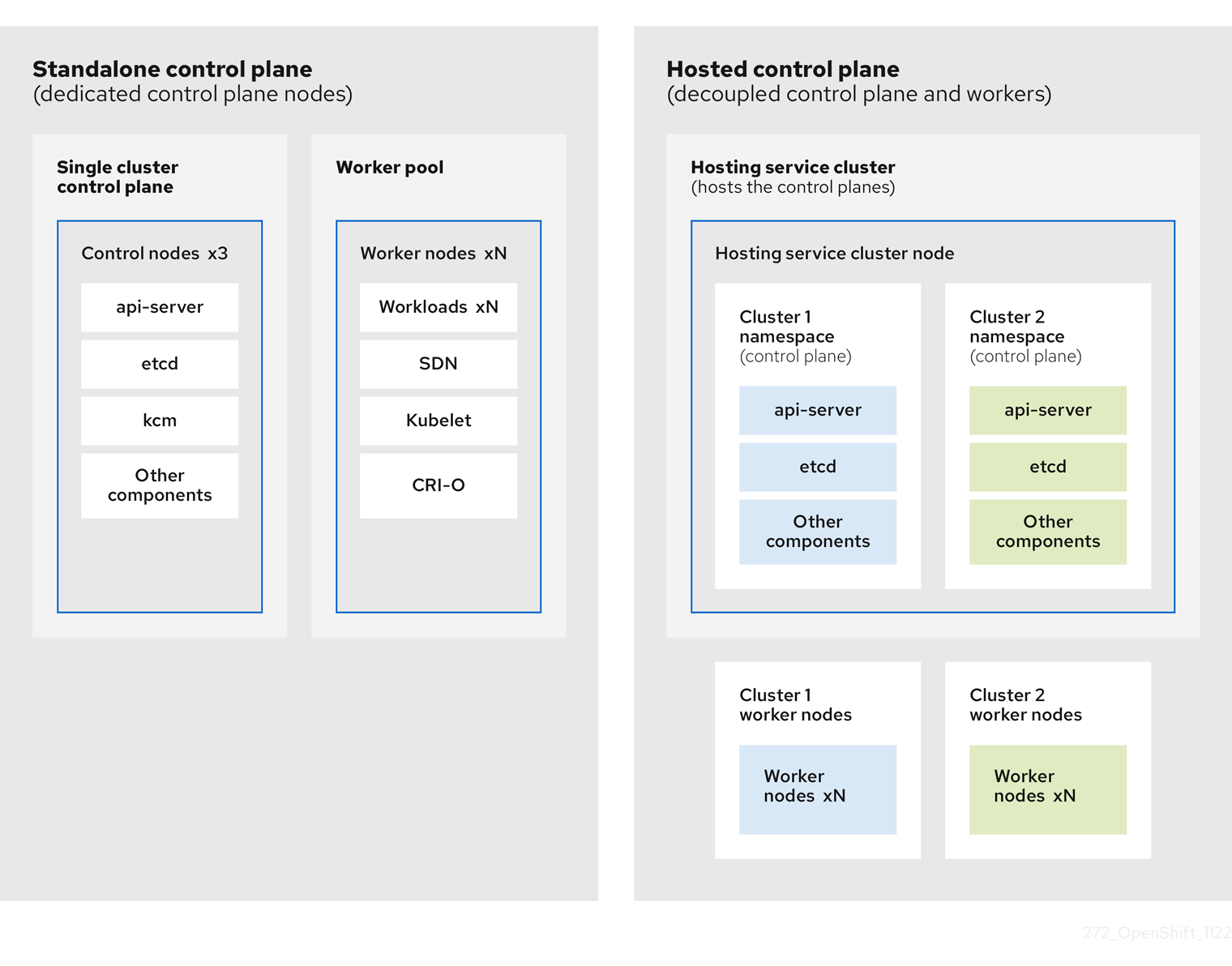

With multicluster engine operator cluster management, you can deploy OpenShift Container Platform clusters by using two different control plane configurations: standalone or hosted control planes:

-

The standalone configuration uses dedicated virtual machines or physical machines to host the OpenShift Container Platform control plane.

-

With hosted control planes for OpenShift Container Platform, you create control planes as pods on a hosting cluster without the need for dedicated physical machines for each control plane.

Hosted control planes for OpenShift Container Platform are available on bare metal and Red Hat OpenShift Virtualization, and as a Technology Preview feature on Amazon Web Services (AWS). You can host the control planes on OpenShift Container Platform version 4.14. The hosted control planes feature is enabled by default.

The following table indicates which versions are supported for each platform:

| Platform | Hosting HCP version | Minium hosted OCP version |

|---|---|---|

Bare metal |

4.14 |

4.14 |

Red Hat OpenShift Virtualization |

4.14 |

4.14 |

AWS (Technology Preview) |

4.11 - 4.14 |

4.14 |

IBM Z (Technology Preview) |

4.14 |

4.14 |

IBM Power (Technology Preview) |

4.14 |

4.14 |

The control plane of the hosted cluster runs as pods in a single namespace on the hosting cluster. When OpenShift Container Platform creates this type of hosted cluster, it creates worker nodes that are independent of the control plane.

Hosted control plane clusters offer several advantages:

-

Saves cost by removing the need to host dedicated control plane nodes

-

Introduces separation between the control plane and the workloads, which improves isolation and reduces configuration errors that can require changes

-

Decreases the cluster creation time by removing the requirement for control plane node bootstrapping

-

Supports turn-key deployments or fully customized OpenShift Container Platform provisioning

Red Hat Advanced Cluster Management for Kubernetes

Red Hat® Advanced Cluster Management for Kubernetes controls clusters and applications from a single console, with built-in security policies.

Extend the value of Red Hat OpenShift by deploying apps, managing multiple clusters, and enforcing policies across multiple clusters at scale. Red Hat’s solution ensures compliance, monitors usage, and maintains consistency.

OpenShift Virtualization

OpenShift Virtualization is an add-on to OpenShift Container Platform that allows you to run and manage virtual machine workloads alongside container workloads.

OpenShift Virtualization adds new objects into your OpenShift Container Platform cluster by using Kubernetes custom resources to enable virtualization tasks. These tasks include:

-

Creating and managing Linux and Windows virtual machines (VMs)

-

Running pod and VM workloads alongside each other in a cluster

-

Connecting to virtual machines through a variety of consoles and CLI tools

-

Importing and cloning existing virtual machines

-

Managing network interface controllers and storage disks attached to virtual machines

-

Live migrating virtual machines between nodes

An enhanced web console provides a graphical portal to manage these virtualized resources alongside the OpenShift Container Platform cluster containers and infrastructure.

OpenShift Virtualization is designed and tested to work well with Red Hat OpenShift Data Foundation features.

HCP Prerequisites for OpenShift Virtualization

-

The OpenShift Container Platform managed cluster must have wildcard DNS routes enabled

-

The OpenShift Container Platform managed cluster must have OpenShift Virtualization, version 4.14 or later, installed on it.

-

The OpenShift Container Platform managed cluster must be configured with OVNKubernetes as the default pod network CNI.

-

The OpenShift Container Platform managed cluster must have a default storage class.

-

You need a valid pull secret file for the

quay.io/openshift-release-devrepository. -

Before you can provision your cluster, you need to configure a load balancer. For example, MetalLB.

-

For optimal network performance, use a network maximum transmission unit (

MTU) of 9000 or greater on the OpenShift Container Platform cluster that hosts the OpenShift Virtualization virtual machines.

MetalLB

As a cluster administrator, you can add the MetalLB Operator to your cluster so that when a service of type LoadBalancer is added to the cluster, MetalLB can add an external IP address for the service. The external IP address is added to the host network for your cluster.

-

MetalLB operating in

layer2mode provides support for failover by utilizing a mechanism similar to IP failover. However, instead of relying on the virtual router redundancy protocol (VRRP) and keepalived, MetalLB leverages a gossip-based protocol to identify instances of node failure. When a failover is detected, another node assumes the role of the leader node, and a gratuitous ARP message is dispatched to broadcast this change. -

MetalLB operating in

layer3or border gateway protocol (BGP) mode delegates failure detection to the network. The BGP router or routers that the OpenShift Container Platform nodes have established a connection with will identify any node failure and terminate the routes to that node.

Using MetalLB instead of IP failover is preferable for ensuring high availability of pods and services.