Overcommit in OpenShift Virtualization

Overcommit occurs when the total virtual resources allocated to VMs exceed the physical resources available on the host, enabling higher workload density by leveraging the fact that VMs rarely use their full allocated capacity simultaneously.

Accessing the OpenShift Cluster

{openshift_cluster_console_url}[{openshift_cluster_console_url},window=_blank]

oc login -u {openshift_cluster_admin_username} -p {openshift_cluster_admin_password} --server={openshift_api_server_url}{openshift_api_server_url}[{openshift_api_server_url},window=_blank]

{openshift_cluster_admin_username}{openshift_cluster_admin_password}CPU Overcommit

In OpenShift Virtualization, compute resources assigned to virtual machines (VMs) are backed by either guaranteed CPUs or time-sliced CPU shares.

Guaranteed CPUs, also known as CPU reservation, dedicate CPU cores or threads to a specific workload, making them unavailable to any other workload. Assigning guaranteed CPUs to a VM ensures sole access to a reserved physical CPU. You enable dedicated resources for VMs to use guaranteed CPUs.

Time-sliced CPUs dedicate a slice of time on a shared physical CPU to each workload. You can specify the slice size during VM creation or when the VM is offline. By default, each vCPU receives 100 milliseconds (1/10 of a second) of physical CPU time.

With time-sliced CPUs, the Linux kernel’s Completely Fair Scheduler (CFS) manages how VMs share physical CPU cores. CFS rotates VMs through available cores, giving each VM a proportional slice of CPU time based on its configured CPU requests.

To allow for CPU overcommit, which is set to 10:1 by default, each VM’s virt-launcher pod will define 100m total CPU requests, which is 1/10th of a host CPU from a Kubernetes resource and scheduling perspective, per vCPU.

However this default 10:1 CPU overcommit ratio can be configured to the desired overcommit level by changing the cluster CPU Allocation Ratio. Changing this ratio will influence the amount of cpu requests each vCPU is allocated by default, which enforces a max level of CPU overcommit through Kubernetes requests scheduling.

| Note that resource assignments are made at virt-launcher pod scheduling time, so any VMs will need to be live migrated stopped and restarted to change CPU allocation behavior after a ratio change. |

Instructions

-

Ensure you are logged in to both the OpenShift Console and CLI as the admin user from your web browser and the terminal window on the right side of your screen and continue to the next step.

-

To understand CPU overcommit, you must first identify how many physical CPU cores are available on your worker nodes.

-

List all worker nodes in your cluster:

oc get nodes -l node-role.kubernetes.io/worker=OutputNAME STATUS ROLES AGE VERSION worker-cluster-pg8lt-1 Ready worker 4h59m v1.33.5 worker-cluster-pg8lt-2 Ready worker 4h58m v1.33.5 worker-cluster-pg8lt-3 Ready worker 4h58m v1.33.5 -

Show available CPU resources on a specific node:

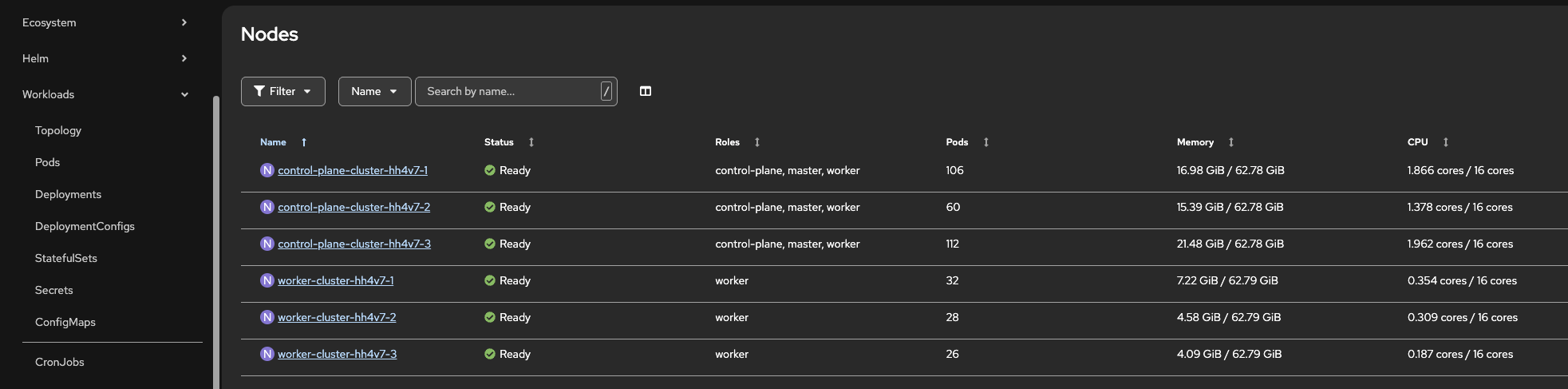

oc describe node <node_name> | grep -A 10 "Capacity:"OutputCapacity: cpu: 16 devices.kubevirt.io/kvm: 1k devices.kubevirt.io/tun: 1k devices.kubevirt.io/vhost-net: 1k ephemeral-storage: 104266732Ki hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 65836964Ki pods: 250The

cpuvalue shows the number of physical CPU cores (or threads if hyperthreading is enabled) available on the node. -

Alternatively, check CPU allocatable resources (physical CPUs minus system reservations):

oc get node "node_name" -o jsonpath='{.status.allocatable.cpu}{"\n"}'Output15500mUsing the OpenShift Console: Navigate to Compute → Nodes

-

-

Viewing VM vCPU Allocations

-

List all running VMs and their vCPU counts:

oc get vms -n over-commit -o custom-columns=\ NAMESPACE:.metadata.namespace,\ NAME:.metadata.name,\ vCPUs:.spec.template.spec.domain.cpu.cores,\ STATUS:.status.printableStatusOutputNAMESPACE NAME vCPUs STATUS over-commit overcommit-vm-1 24 Running -

For a specific VM, check the vCPU configuration:

oc get vm overcommit-vm-1 -n over-commit -o jsonpath='{.spec.template.spec.domain.cpu.cores}{"\n"}'Output24 -

View CPU requests and limits for a running VM:

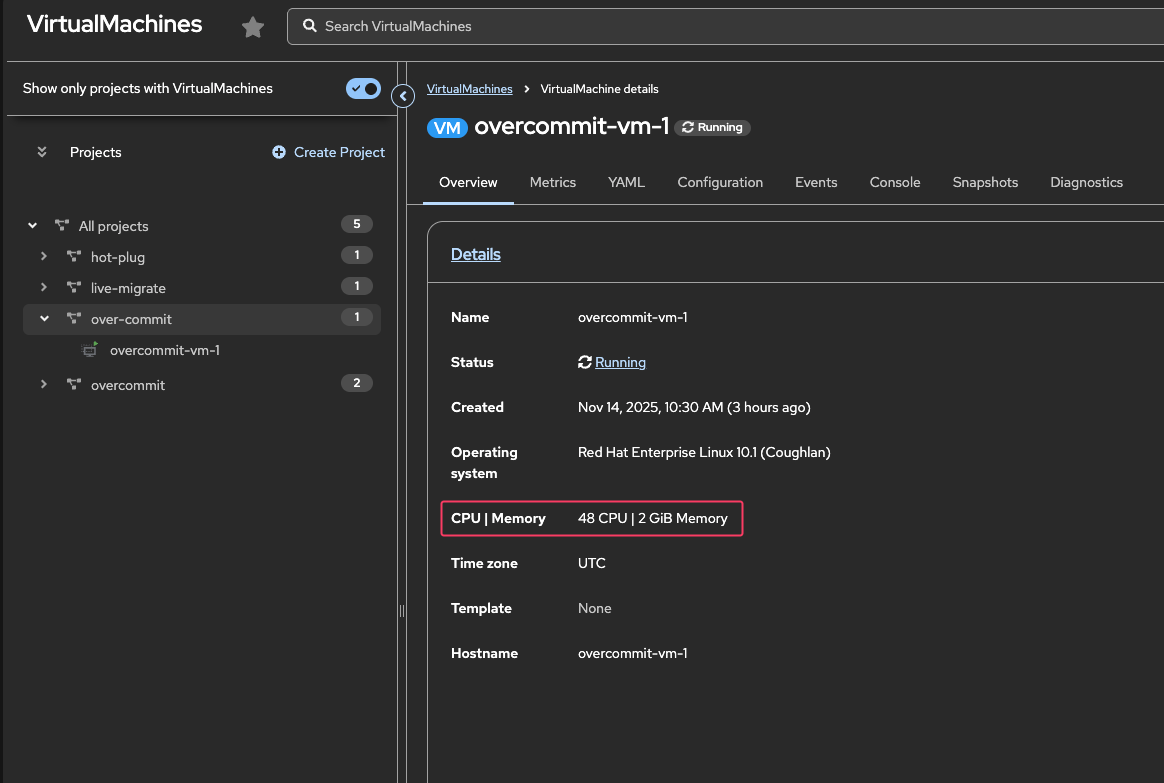

oc get vmi overcommit-vm-1 -n over-commit -o jsonpath='{.spec.domain.cpu}{"\n"}'Output{"cores":24,"maxSockets":8,"model":"host-model","sockets":2,"threads":1}Using the OpenShift Console:

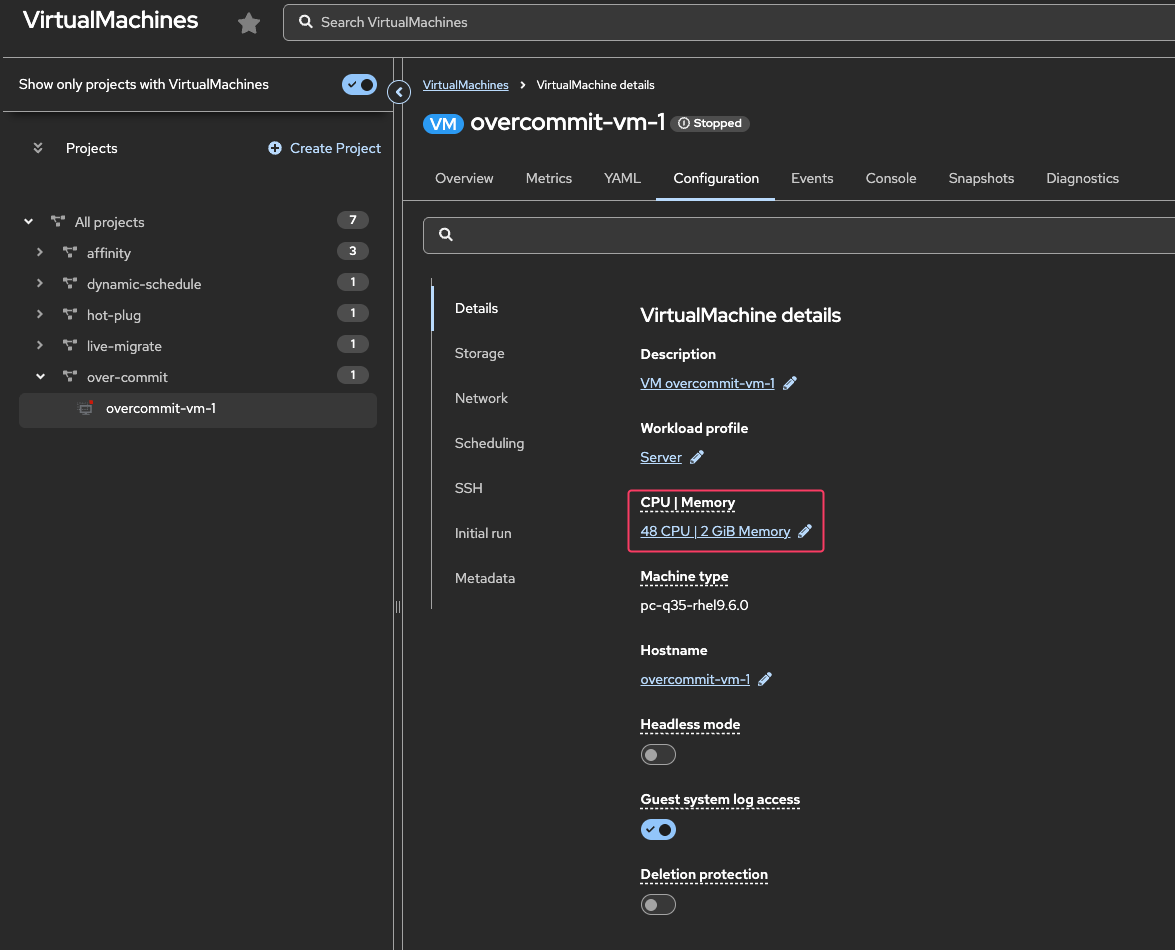

Navigate to Virtualization → Virtual Machines and select the overcommit-vm-1 VM.

-

-

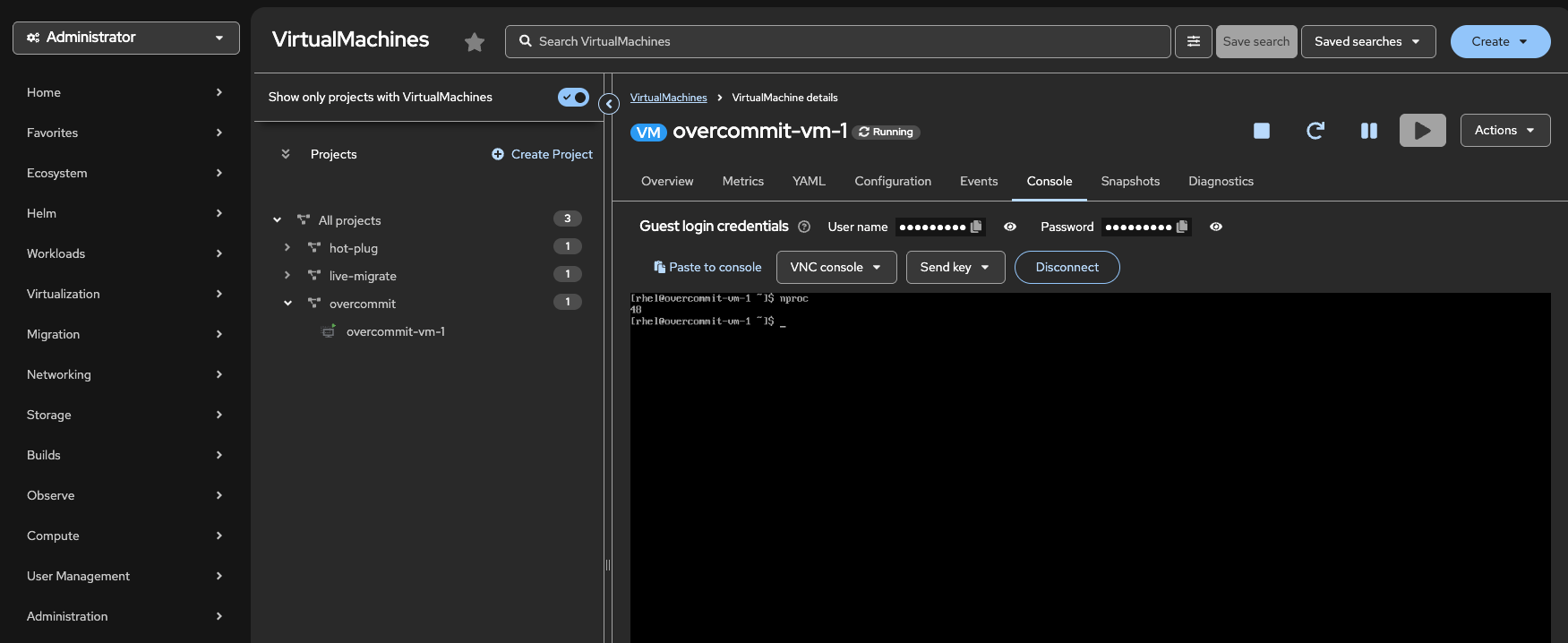

Confirm the number of CPUs inside the guest

From the web console, login into the VM and run the following command to verify the number of CPUs:

nprocOutput48 -

Calculating CPU Overcommit Ratio

To calculate the overcommit ratio on a specific node:

-

Identify all VMs running on the node:

In the following step, you need to replace "node_name" with the actual node name. NODE="node_name" # Replace with the actual node name oc get vmi -A -o wide | grep "$NODE"Outputaffinity pod-anti-affinity-vm 4h19m Running 10.133.2.11 worker-cluster-pg8lt-3 True True over-commit overcommit-vm-1 47m Running 10.133.2.25 worker-cluster-pg8lt-3 True True -

Sum the vCPUs for all VMs on that node:

oc get vmi -A -o json | \ jq -r --arg NODE "$NODE" \ '.items[] | select(.status.nodeName == $NODE) | { name: .metadata.name, total_vcpus: ((.spec.domain.cpu.sockets // 1) * (.spec.domain.cpu.cores // 1) * (.spec.domain.cpu.threads // 1)) }' | \ jq -s 'map(.total_vcpus) | add'Output48 -

Calculate the overcommit ratio:

Overcommit Ratio = Total vCPUs allocated / Physical CPU cores Example: - Physical CPU cores: 16 (Available capacity from step 3.b) - Total vCPUs allocated: 48 - Overcommit ratio: 48 / 16 = 3:1

-

-

Understanding the Default 10:1 CPU Overcommit Ratio

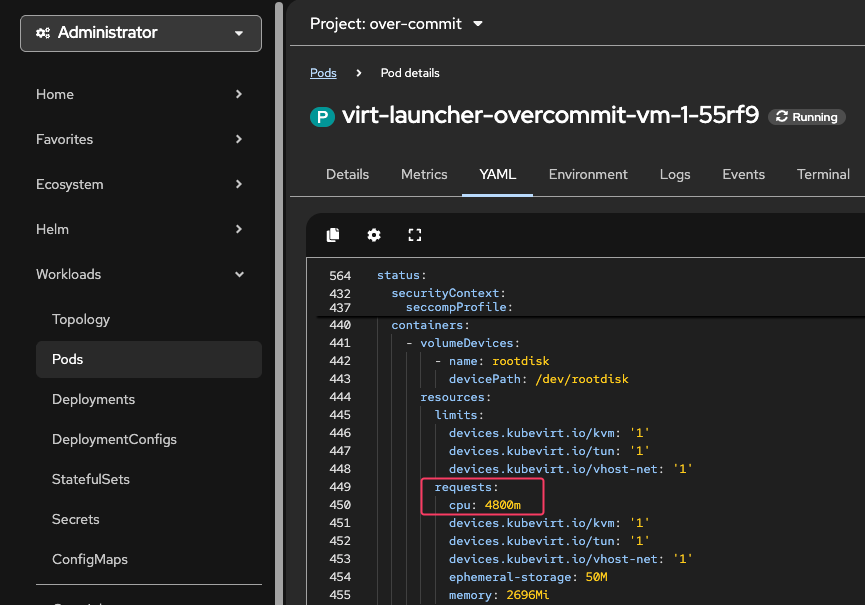

OpenShift Virtualization applies a default 10:1 CPU overcommit ratio when you don’t explicitly specify CPU requests. This means that if a VM has multiple vCPUs, the actual CPU request on the virt-launcher pod is only 1/10th of the total vCPUs.

-

Check virt-launcher Pod CPU Requests

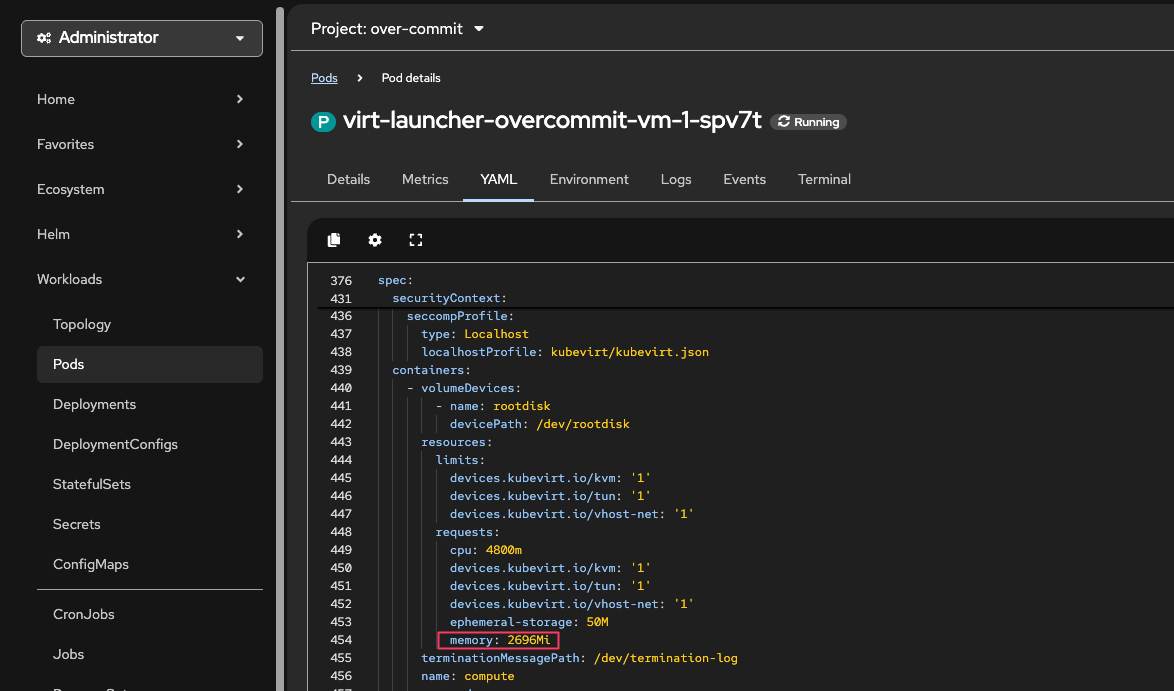

oc get pods -n over-commit -l vm.kubevirt.io/name=overcommit-vm-1OutputNAME READY STATUS RESTARTS AGE virt-launcher-overcommit-vm-1-rtspw 2/2 Running 0 47moc get pod -n over-commit <pod_name> \ -o jsonpath='{.spec.containers[?(@.name=="compute")].resources}{"\n"}' | jqOutput{ "limits": { "devices.kubevirt.io/kvm": "1", "devices.kubevirt.io/tun": "1", "devices.kubevirt.io/vhost-net": "1" }, "requests": { "cpu": "4800m", "devices.kubevirt.io/kvm": "1", "devices.kubevirt.io/tun": "1", "devices.kubevirt.io/vhost-net": "1", "ephemeral-storage": "50M", "memory": "2696Mi" } }Using the OpenShift Console: Navigate to Workloads → Pods and select the virt-launcher pod.

A VM with 48 vCPUs only requests 4800m(0.1 * 48 = 4.8 CPU) by default! -

Memory Overcommit

By design, Kubernetes, and hence OpenShift and OpenShift Virtualization, does not allow use of swap.

Memory oversubscription without use of swap is hazardous because if the amount of memory required by processes running on a node exceeds the amount of RAM available, processes will be killed. That’s not desirable in any event, but particularly not for VMs where the workloads running on VMs will be taken down if the VMs are killed.

OpenShift Virtualization has built the wasp-agent component to permit controlled use of swap with VMs. Refer to the wasp-agent component documentation for more information.

| We won’t configure the wasp-agent component with real swap backend in this lab. |

The memoryOvercommitPercentage parameter tells CNV how to scale the memory request that is made for each VM created. So when set to 100, CNV calculates its memory request based on the full declared memory size of the VM; when it’s set to a higher value, CNV sets the request to a smaller value than that actually required by the VM, allowing overcommitting of memory.

For example, with a VM with 16 GiB configured, if the overcommit percentage is set to its default value of 100, the memory request that CNV will apply to the pod is 16 GiB plus some extra for the overhead of the QEMU process running the VM. If it’s set to 200, the request will be set to 8 GiB plus the overhead.

Instructions

-

Ensure you are logged in to both the OpenShift Console and CLI as the admin user from your web browser and the terminal window on the right side of your screen and continue to the next step.

-

Memory requests with the default overcommit percentage

Observe the memory requests for the virt-launcher pod compared to the memory allocated to the VM :

oc get vm -n over-commit overcommit-vm-1 -o json | jq .spec.template.spec.domain.memoryOutput{ "guest": "2Gi" } -

Observe the memory requests for the virt-launcher pod compared to the memory allocated to the VM :

oc get pod -n over-commit -l vm.kubevirt.io/name=overcommit-vm-1 -o json | jq .items[0].spec.containers[0].resources.requests.memoryOutput"2696Mi"The virt-launcher pod is requesting 2696Mi which is 2048Mi + some overhead. This overhead is necessary and expected - it ensures the VM has enough resources to run properly. -

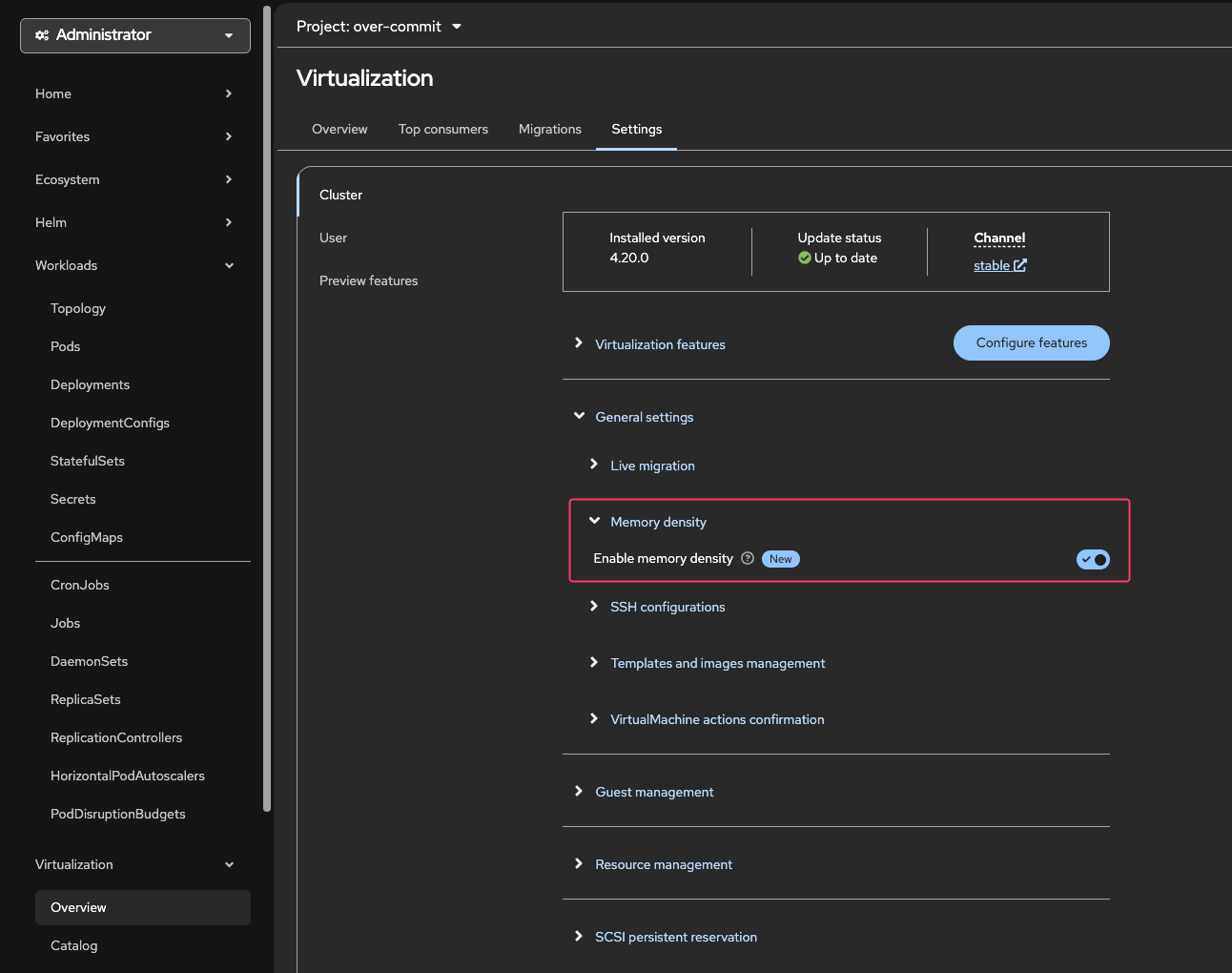

Enable the memory overcommit feature

Using the OpenShift Console: Navigate to Virtualization → Overview → Settings → Cluster → General Settings and Enable "Memory density"

You can confirm using the CLI :

oc get hyperconverged -n openshift-cnv kubevirt-hyperconverged -o json | jq '.spec.higherWorkloadDensity.memoryOvercommitPercentage'Output150This means that the memory overcommit ratio is 150% which is 1.5:1. -

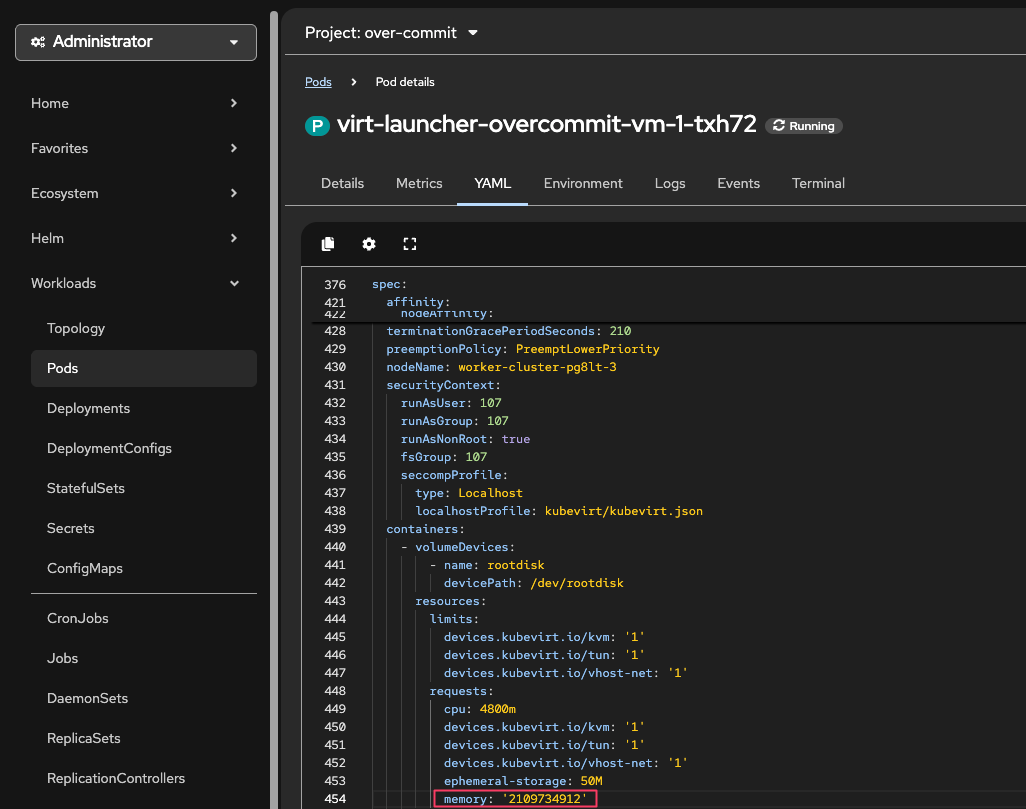

Observe the memory requests for the virt-launcher pod compared to the memory allocated to the VM.

Live Migrate the VM (or stop and restart it) to apply the new setting, then observe the memory requests for the virt-launcher pod :

The virt-launcher pod is requesting 2109734912 bytes which is ~ 2011Mi ~ 1.96GiB.

This is less than the 2048MiB requested by the VM. This is because the memory overcommit ratio is 150%. -

Increase the memory overcommit ratio to 200%.

-

Patch the hyperconverged custom resource to set the memory overcommit ratio to 200%.

oc patch hyperconverged -n openshift-cnv kubevirt-hyperconverged --type merge -p '{"spec":{"higherWorkloadDensity":{"memoryOvercommitPercentage":200}}}'Outputhyperconverged.hco.kubevirt.io/kubevirt-hyperconverged patchedoc get hyperconverged -n openshift-cnv kubevirt-hyperconverged -o json | jq '.spec.higherWorkloadDensity.memoryOvercommitPercentage'Output200This means that the memory overcommit ratio is now 200% which is 2:1. -

Live migrate (or stop and restart) the VM to apply the new setting, then observe the memory requests for the virt-launcher pod from the web console or from the CLI:

oc get pod -l vm.kubevirt.io/name=overcommit-vm-1 -o json | jq .items[0].spec.containers[0].resources.requests.memoryOutput"1670Mi"The virt-launcher pod is now requesting 1670Mi which is ~ 1.6GiB.

-