Affinity and Anti-Affinity for VM Placement

This lab is designed to enhance your proficiency in applying Node Affinity, Pod Affinity, and Anti-Pod Affinity specifically to Virtual Machines. The core purpose is to illustrate the practical application of these principles in real-world scenarios and solidify your conceptual understanding.

Accessing the OpenShift Cluster

{openshift_cluster_console_url}[{openshift_cluster_console_url},window=_blank]

oc login -u {openshift_cluster_admin_username} -p {openshift_cluster_admin_password} --server={openshift_api_server_url}{openshift_api_server_url}[{openshift_api_server_url},window=_blank]

{openshift_cluster_admin_username}{openshift_cluster_admin_password}Node Affinity

Node Affinity defines rules that guide the scheduler to attract a Virtual Machine Pod to a specific node or group of nodes. These rules rely on matching labels applied to the nodes.

The core use case for Node Affinity is to ensure that a VM runs only on nodes that possess specific hardware features, such as a particular GPU model or a high amount of RAM, by matching the corresponding labels on the node.

This lab will demonstrate how Node Affinity is set up and how it functions.

Instructions

-

Ensure you are logged in to both the OpenShift Console and CLI as the admin user from your web browser and the terminal window on the right side of your screen and continue to the next step.

-

Navigate to the affinity project → Home → Project → affinity

-

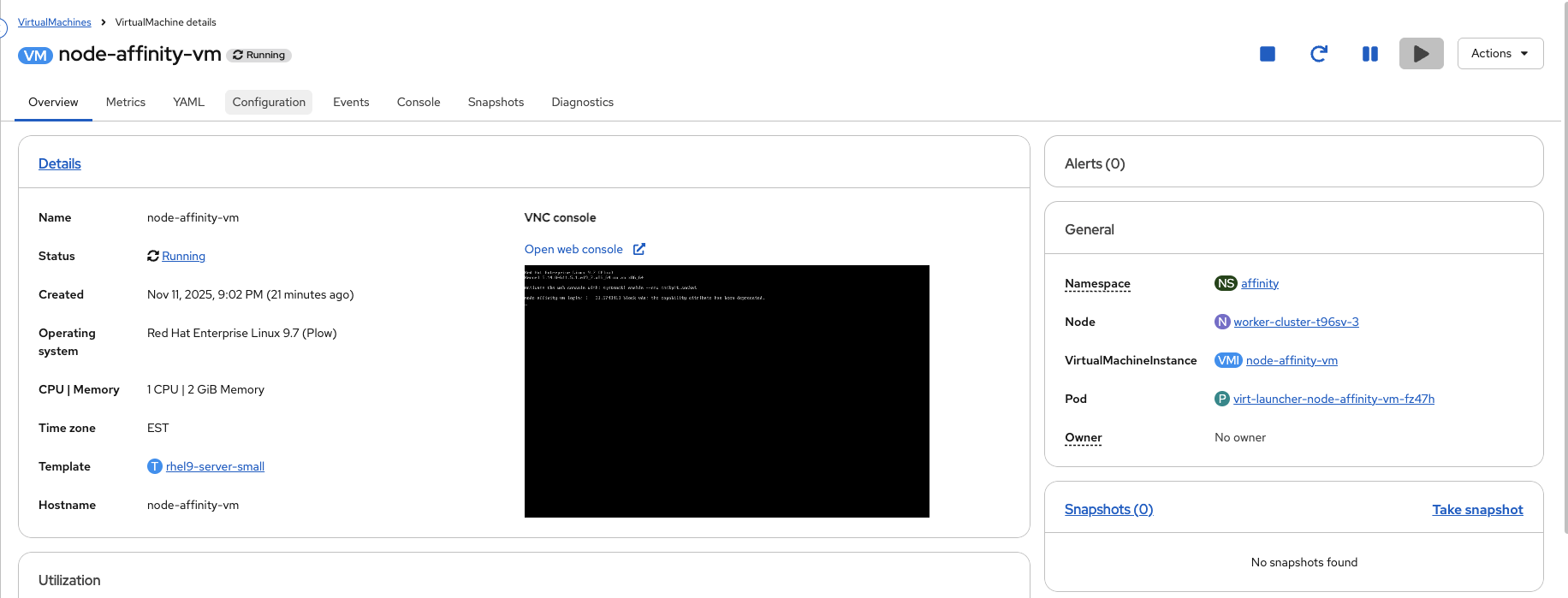

Find out what node the node-affinity-vm is running on. To do this you can look at the vm in the affinity project or you can use the openshift CLI.

Change to affinity projectoc project affinityNow using project "affinity" on server {openshift_api_server_url}.Get VM informationoc get vmi node-affinity-vmNAME AGE PHASE IP NODENAME READY node-affinity-vm 78m Running 10.235.0.25 worker-cluster-k66tl-1 True

-

Set an east node label on any work where the node-affinity-vm is currently not running.

Get node listoc get nodesOutputNAME STATUS ROLES AGE VERSION control-plane-cluster-k66tl-1 Ready control-plane,master,worker 4h38m v1.33.5 control-plane-cluster-k66tl-2 Ready control-plane,master,worker 4h23m v1.33.5 control-plane-cluster-k66tl-3 Ready control-plane,master,worker 4h37m v1.33.5 worker-cluster-k66tl-1 Ready worker 88m v1.33.5 worker-cluster-k66tl-2 Ready worker 87m v1.33.5 worker-cluster-k66tl-3 Ready worker 86m v1.33.5

Label nodeoc label node <worker where node-affinity-vm is not running> zone=eastOutputnode/worker-cluster-k66tl-3 labeled

Alternatively you can use the OpenShift Console by going to Compute → Nodes and then pick a node where node-affinity-vm is not running. Then click the 3 dots and click Edit labels. Once finished adding the label click Save.

-

Make sure the label was applied.

Find node with the assigned labeloc get nodes <worker you just labeled> --show-labels | grep -i zone=eastAlternatively you can use the OpenShift Console by going to Compute → Nodes and then pick a node you just label. Then click the 3 dots and click Edit labels. Once finished looking click Save.

-

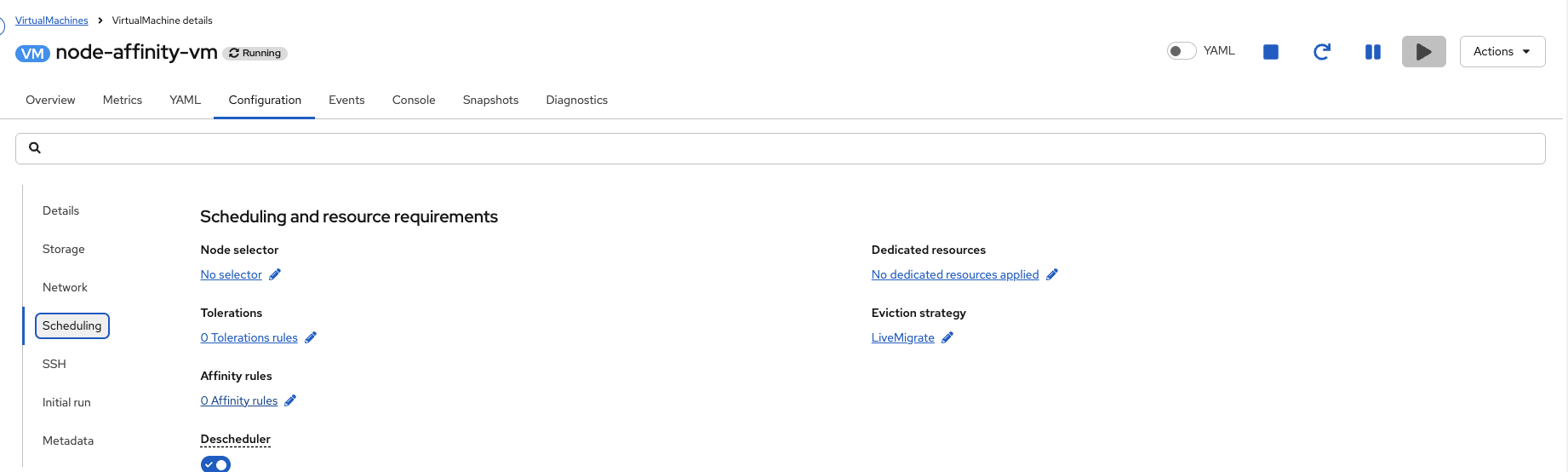

Navigate to Virtualization → Virtual Machines → node-affinity-vm → Configuration

-

Now navigate to Scheduling → Affinity rules

-

Click Add affinity rule.

-

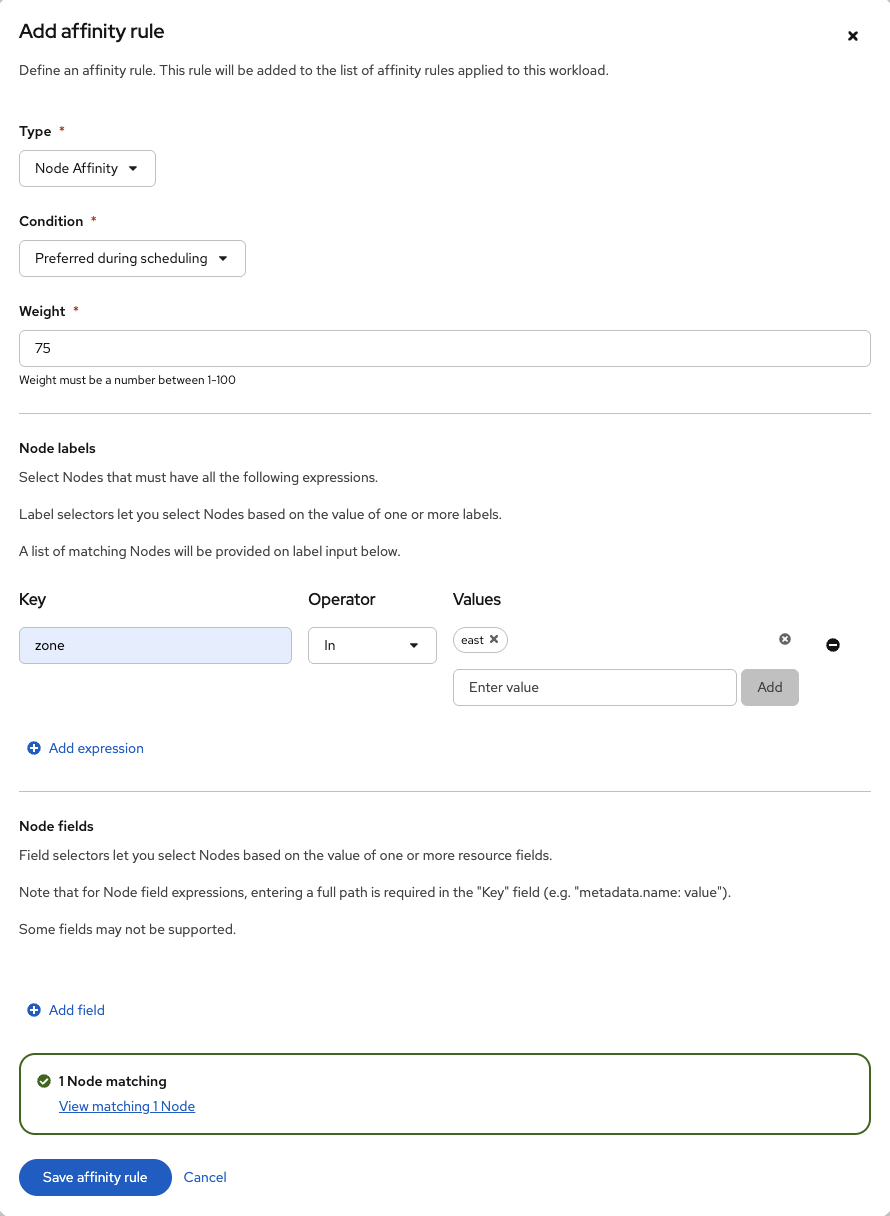

Change the Condition to Preferred during scheduling and set the weight to 75. Then, under Node Labels click Add Expression. Set the Key field to zone and the Values field to east, which is the label applied to the node earler. Your Final Node Affinity rule will look like the picture below. Confirm this is true and click Save affinity rule.

-

Then click Apply rules.

-

Now let’s take a look at the Node Affinity rule on node-affinity-vm. You can view this information either through the GUI by navigating to node-affinity-vm → YAML, or by using the CLI.

View the VM definitionoc -n affinity get vm/node-affinity-vm -o jsonpath='{.spec.template.spec.affinity}'{"nodeAffinity":{"preferredDuringSchedulingIgnoredDuringExecution":[{"preference":{"matchExpressions":[{"key":"zone","operator":"In","values":["east"]}]},"weight":75}]}} -

To make the VM follow these new affinity rules, you could migrate it to the node with the label. However, to show that this affinity rule is working we are going to power off the VM completely and then power it back on.

-

Click Actions → Stop.

-

Once the VM completely stops. Click Actions → Start.

-

The VM’s relocation to the node with the assigned label is visible via the GUI. Alternatively, you can verify this change using the OCP CLI.

Get VMI informationoc get vmi node-affinity-vmNAME AGE PHASE IP NODENAME READY node-affinity-vm 58s Running 10.233.2.20 worker-cluster-k66tl-3 True

Pod Affinity

Pod Affinity is a scheduling rule that co-locates a VM Pod and an existing Pod (or another VM Pod) with a specific label onto the same node.

The primary benefit of using pod affinity is to improve performance for dependent VMs or services that require low-latency communication by guaranteeing their placement on the same worker node.

This lab will illustrate the setup and function of Pod Affinity.

Instructions

-

Ensure you are logged in to both the OpenShift Console and CLI as the admin user from your web browser and the terminal window on the right side of your screen and continue to the next step.

-

To begin, we need to apply a label to the node-affinity-vm. This will enable it to serve as the VM that our pod will have pod affinity to. The label can be added by editing the VM within the OpenShift CLI and including app: fedora

Modify the VMIoc edit vmi node-affinity-vm -n affinityapiVersion: kubevirt.io/v1 kind: VirtualMachineInstance metadata: annotations: kubevirt.io/latest-observed-api-version: v1 kubevirt.io/storage-observed-api-version: v1 kubevirt.io/vm-generation: "3" vm.kubevirt.io/flavor: small vm.kubevirt.io/os: rhel9 vm.kubevirt.io/workload: server creationTimestamp: "2025-11-13T13:25:16Z" finalizers: - kubevirt.io/virtualMachineControllerFinalize - foregroundDeleteVirtualMachine generation: 13 labels: app: fedora ←—- Put label here kubevirt.io/domain: node-affinity-vm kubevirt.io/nodeName: worker-cluster-t96sv-1 kubevirt.io/size: small network.kubevirt.io/headlessService: headless:wq!oc edit vm node-affinity-vm -n affinityapiVersion: kubevirt.io/v1 kind: VirtualMachine metadata: annotations: kubemacpool.io/transaction-timestamp: "2025-11-12T05:17:34.117234412Z" kubevirt.io/latest-observed-api-version: v1 kubevirt.io/storage-observed-api-version: v1 vm.kubevirt.io/validations: | [ { "name": "minimal-required-memory", "path": "jsonpath::.spec.domain.memory.guest", "rule": "integer", "message": "This VM requires more memory.", "min": 1610612736 } ] creationTimestamp: "2025-11-12T02:02:05Z" finalizers: - kubevirt.io/virtualMachineControllerFinalize generation: 3 labels: app: node-affinity-vm ←-- Do not put the label here kubevirt.io/dynamic-credentials-support: "true" podaffinity: "" vm.kubevirt.io/template: rhel9-server-small vm.kubevirt.io/template.namespace: openshift vm.kubevirt.io/template.revision: "1" vm.kubevirt.io/template.version: v0.34.1 name: node-affinity-vm namespace: affinity resourceVersion: "1485819" uid: 20136774-16cb-46e5-8cb4-417de1712da6 spec: dataVolumeTemplates: - apiVersion: cdi.kubevirt.io/v1beta1 kind: DataVolume metadata: creationTimestamp: null name: node-affinity-vm spec: sourceRef: kind: DataSource name: rhel9 namespace: openshift-virtualization-os-images storage: resources: requests: storage: 30Gi runStrategy: RerunOnFailure template: metadata: annotations: vm.kubevirt.io/flavor: small vm.kubevirt.io/os: rhel9 vm.kubevirt.io/workload: server creationTimestamp: null labels: app: fedora ←—- Put label here kubevirt.io/domain: node-affinity-vm kubevirt.io/size: small network.kubevirt.io/headlessService: headless:wq! -

Click the pod-affinity-vm

-

See where the pod-affinity-vm is running by looking at OpenShift Console or check on the OpenShift Command line.

Get VMI informationoc get vmi pod-affinity-vm -

Click Configure → Scheduling.

-

Click the Affinity rules.

-

Click Add affinity rule.

-

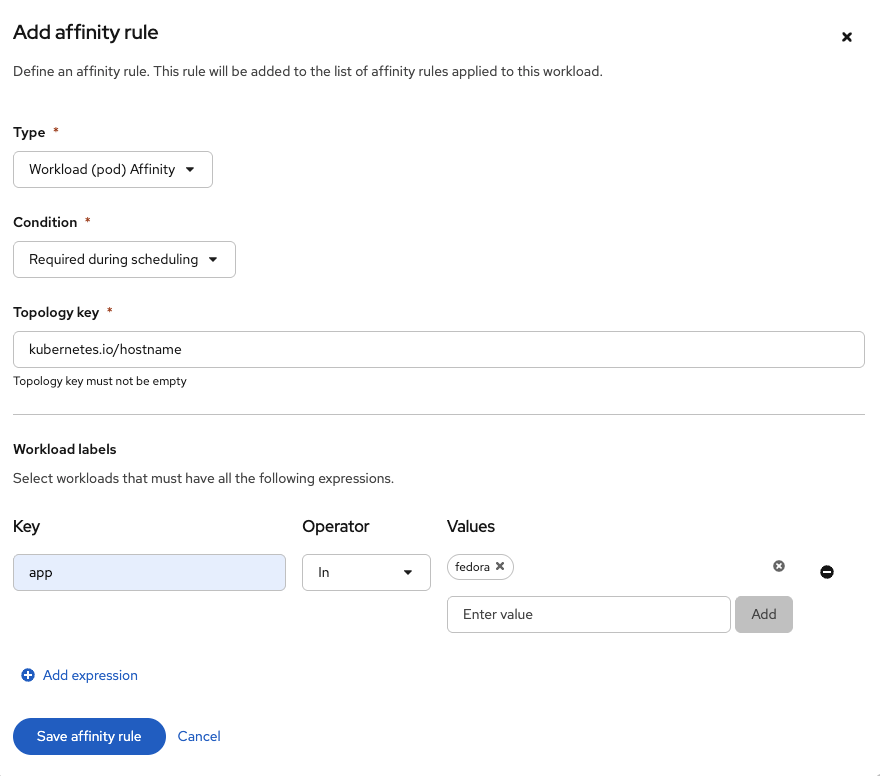

Change the type to Workload (pod) Affinity

-

Keep the Condition set to Required during scheduling.

-

Leave the Topology key at the default value.

-

Click Add expression under Workload labels.

-

You will now set a key so this VM will run on the same node as node-affinity-vm. Set the Key field to

appand the Values field tofedora. Your Final Pod Affinity rule will look like the picture below. Confirm this is true and click Save affinity rule. -

Click Apply rules

-

Now let’s take a look at the Pod Affinity rule on pod-affinity-vm. You can view this information either through the GUI by navigating to pod-affinity-vm → YAML, or by using the CLI.

oc get vm pod-affinity-vm -o jsonpath='{.spec.template.spec.affinity}'{"podAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":[{"labelSelector":{"matchExpressions":[{"key":"app","operator":"In","values":["fedora"]}]},"topologyKey":"kubernetes.io/hostname"}]}} -

See where the pod-affinity-vm and node-affinity-vm are running by looking at OpenShift Console or check on the OpenShift Command line.

oc get vmi pod-affinity-vm oc get vmi node-affinity-vm -

To make the vm follow these new affinity rules. You normally would migrate it to the node with the label. However, to show that this affinity rule is working. We are going to power off the vm completely and then power it back on.

-

Using the pod-affinity-vm VM, Click on Action → Stop.

-

Once the VM completely stops. Click Actions → Start.

-

See where the pod-affinity-vm and node-affinity-vm are running by looking at OpenShift Console or check on the OpenShift Command line.

oc get vmi pod-affinity-vm oc get vmi node-affinity-vm -

You will now see the the pod-affinity-vm is now running the same location as the node-affinity-vm because of the pod affinity rule.

Pod Anti-Affinity

Pod Anti-affinity is a crucial feature for achieving High Availability (HA) in virtualized environments. It functions by instructing the scheduler to prevent the co-location of VM Pods on the same node if they possess a specific label.

The primary benefit of this rule is to enhance application resilience. For instance, by ensuring that VM Pods belonging to the same service (such as a database cluster) are distributed across different nodes, a failure in a single node will not cause an outage for the entire service.

This lab will guide you through setting up and demonstrating the functionality of Pod Anti-Affinity.

Instructions

-

Ensure you are logged in to both the OpenShift Console and CLI as the admin user from your web browser and the terminal window on the right side of your screen and continue to the next step.

-

We are going to use the pod-anti-affinity-vm and reuse the app: fedora label we created last time to create our pod anti affinity rule.

-

Click the pod-anti-affinity-vm

-

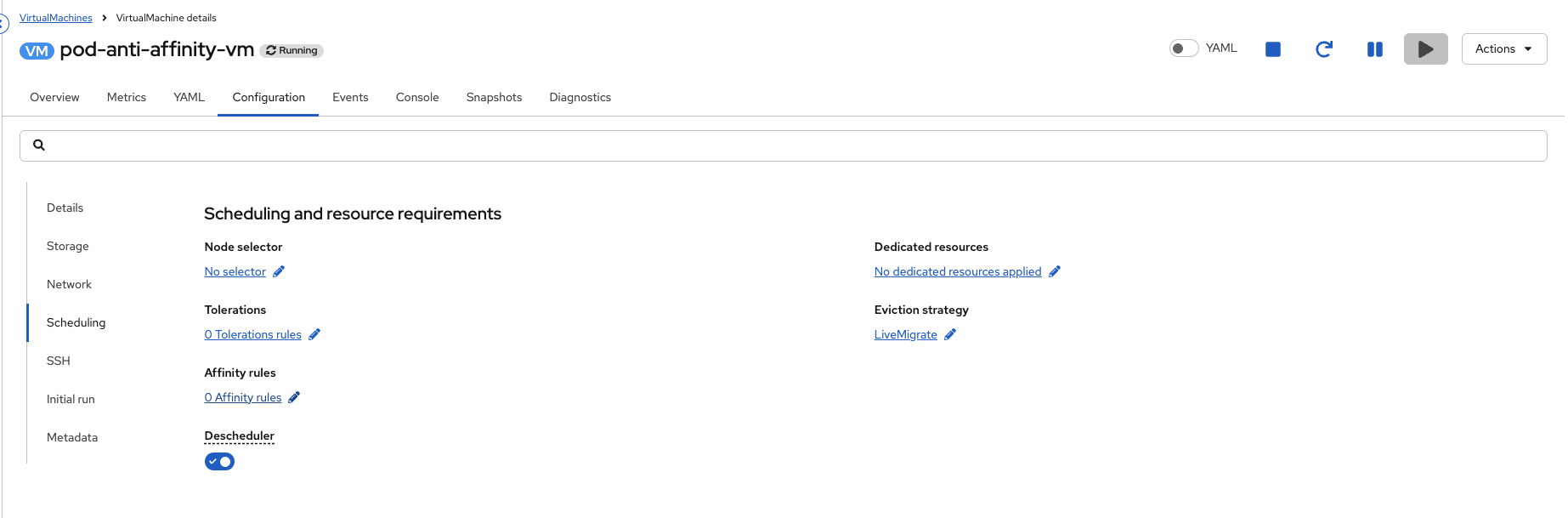

Click Configure → scheduling

-

Click the Affinity rules

-

Add Affinity rule

-

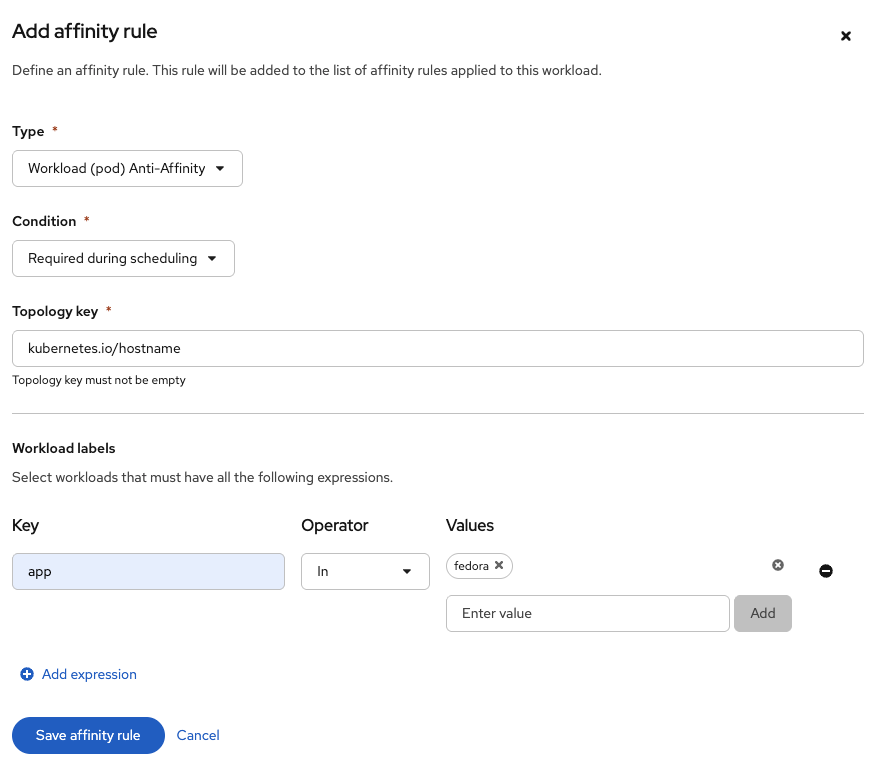

Change the type to Workload (pod) Anti-Affinity

-

Keep the Condition set to Required during scheduling.

-

Keep the Topology key the same

-

Click Add expression under Workload labels

-

Next, we will set a key and value to establish Pod Anti-affinity, ensuring this VM runs on a different node than pod-affinity-vm. Set the key to

appand the value tofedora. The resulting Pod Affinity rule should match the illustration provided below. Verify the rule’s correctness, then click Save affinity rule. -

Click Apply Rules

-

Now let’s take a look at the Pod Anti-Affinity rule on pod-anti-affinity-vm. You can view this information either through the GUI by navigating to pod-anti-affinity-vm → YAML, or by using the CLI.

oc get vm pod-anti-affinity-vm -o jsonpath='{.spec.template.spec.affinity}'{"podAntiAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":[{"labelSelector":{"matchExpressions":[{"key":"app","operator":"In","values":["fedora"]}]},"topologyKey":"kubernetes.io/hostname"}]}} -

See where the pod-affinity-vm and node-affinity-vm are running by looking at OpenShift Console or check on the OpenShift Command line.

oc get vmi pod-anti-affinity-vm oc get vmi node-affinity-vm -

To make the VM follow these new affinity rules. You normally would migrate it to the node with the label. However, to show that this affinity rule is working. We are going to power off the vm completely and then power it back on.

-

On the pod-anti-affinity-vm virtual machine, Click Action → Stop.

-

Once the VM completely stops. Click Actions → Start.

-

See where the pod-affinity-vm and pod-anti-affinity-vm are running by looking at OpenShift Console or check on the OpenShift Command line.

oc get vmi pod-anti-affinity-vm oc get vmi pod-affinity-vm -

The pod-anti-affinity-vm VM will now be running on a different node than pod-affinity-vm. If pod-anti-affinity-vm was not already running on a different node than the pod-affinity-vm.