Introduction

When deploying your ROSA cluster, you can configure many aspects of your worker nodes, but what happens when you need to change your worker nodes after they’ve already been created? These activities include scaling the number of nodes, changing the instance type, adding labels or taints, just to name a few.

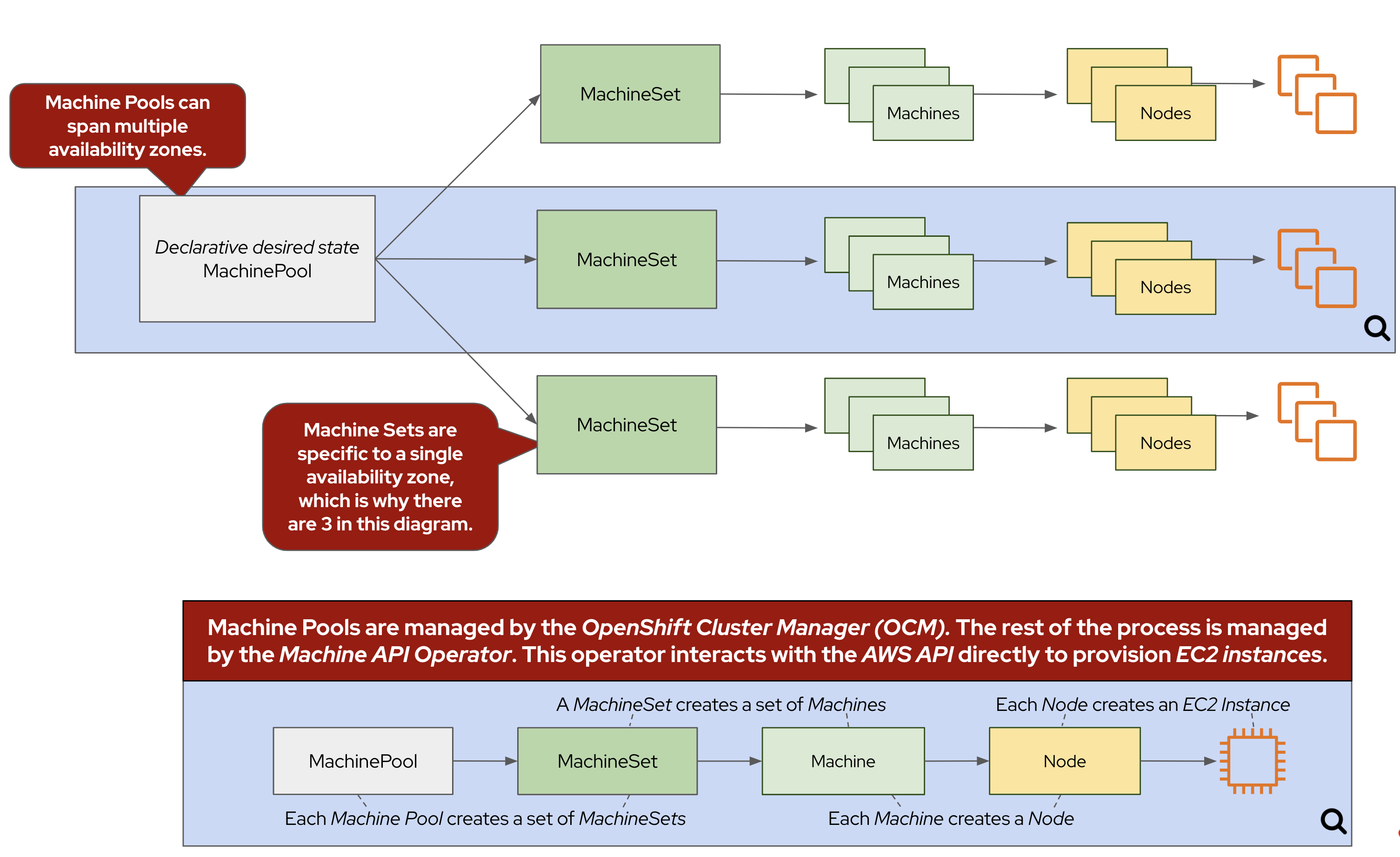

Many of these changes are done using Machine Pools. Machine Pools ensure that a specified number of Machine replicas are running at any given time. Think of a Machine Pool as a "template" for the kinds of Machines that make up the worker nodes of your cluster. If you’d like to learn more, see the Red Hat documentation on worker node management.

Here are some of the advantages of using ROSA Machine Pools to manage the size of your cluster

-

Scalability - A ROSA Machine Pool enables horizontal scaling of your cluster. It can easily add or remove worker nodes to handle the changes in workload. This flexibility ensures that your cluster can dynamically scale to meet the needs of your applications

-

High Availability - ROSA Machine Pools supports the creation of 3 replicas of workers across different availability zones. This redundancy helps ensure high availability of applications by distributing workloads.

-

Infrastructure Diversity - ROSA Machine Pools allow you to provision worker nodes of different instance types. This enables you to leverage the best kind of instance family for different workloads.

-

Integration with Cluster Autoscaler - ROSA Machine Pools seamlessly integrate with the Cluster Autoscaler feature, which automatically adjusts the number of worker nodes based on the current demand. This integration ensures efficient resource utilization by scaling the cluster up or down as needed, optimizing costs and performance.

Review the following digram to see the relationship between a Machine Pool, Machine Set, Machines, and Nodes.

Scaling worker nodes

Via the CLI

-

First, let’s see what Machine Pools already exist in our cluster. To do so, run the following command:

rosa list machinepools -c rosa-${GUID}Sample OutputID AUTOSCALING REPLICAS INSTANCE TYPE LABELS TAINTS AVAILABILITY ZONE SUBNET DISK SIZE VERSION AUTOREPAIR workers No 2/2 m6a.xlarge us-east-2a subnet-068e9a693eeb96757 300 GiB 4.15.43 Yes -

Now, let’s take a look at the nodes inside of the ROSA cluster that have been created according to the instructions provided by the above MachinePool. To do so, run the following command:

oc get nodes -l "hypershift.openshift.io/nodePool=rosa-${GUID}-workers"Sample OutputNAME STATUS ROLES AGE VERSION ip-10-0-0-164.us-east-2.compute.internal Ready worker 2d2h v1.28.15+ff493be ip-10-0-0-42.us-east-2.compute.internal Ready worker 2d2h v1.28.15+ff493beFor this workshop, we’ve deployed your ROSA cluster with a single machine pool consisting of two worker nodes.

-

Now that we know that we have two worker nodes, let’s create a MachinePool to add a new worker node using the ROSA CLI. To do so, run the following command:

rosa create machinepool -c rosa-${GUID} --replicas 1 --name workshop --instance-type m5.xlargeSample OutputI: Fetching instance types I: Machine pool 'workshop' created successfully on cluster 'rosa-6n4s8' I: To view all machine pools, run 'rosa list machinepools -c rosa-6n4s8'This command adds a single m5.xlarge instance to the first AWS availability zone in the region your cluster is deployed in.

-

Now, let’s scale up our selected MachinePool from one to two machines. To do so, run the following command:

rosa update machinepool -c rosa-${GUID} --replicas 2 workshopSample OutputI: Updated machine pool 'workshop' on cluster 'rosa-6n4s8' -

Now that we’ve scaled the new machine pool, we can look at the status of the machine pool using the ROSA command line:

rosa describe machinepool -c rosa-$GUID workshop -o json | jq .statusSample Output{ "current_replicas": 1, "kind": "NodePoolStatus", "message": "Minimum availability requires 2 replicas, current 1 available" }Note, that the number of current_replicas may not *yet* match the desired number of replicas. This is because the machine provisioning process can take some time to complete.

-

We can also get the state of our nodes to see the additional machines being provisioned:

oc get nodes -l "hypershift.openshift.io/nodePool=rosa-${GUID}-workshop" --watchSample OutputNAME STATUS ROLES AGE VERSION ip-10-0-0-74.us-east-2.compute.internal Ready worker 46s v1.29.10+67d3387 ip-10-0-0-91.us-east-2.compute.internal Ready worker 4m1s v1.29.10+67d3387 -

Let the above command run until all machines are in the Ready phase. This means that they are ready and available to run Pods in the cluster. Hit CTRL-C to exit the

occommand. -

Now let’s scale the cluster back down to a total of 2 worker nodes by deleting the "Workshop" Machine Pool. To do so, run the following command:

rosa delete machinepool -c rosa-${GUID} workshop --yesSample OutputI: Successfully deleted machine pool 'workshop' from cluster 'rosa-6n4s8' -

You can validate that the MachinePool has been deleted by using the

rosacli:rosa list machinepools -c rosa-${GUID}Sample OutputID AUTOSCALING REPLICAS INSTANCE TYPE LABELS TAINTS AVAILABILITY ZONES SUBNETS SPOT INSTANCES Default No 2 m5.xlarge us-east-2a N/A

Congratulations! You’ve successfully scaled your cluster up and back down to two worker nodes.