Deploy and Test the Base Model

Deploy the Base Model with VLLM

Ready to deploy your model? Let’s get started! Follow these steps to bring your model to life in the Data Science Project (userX):

-

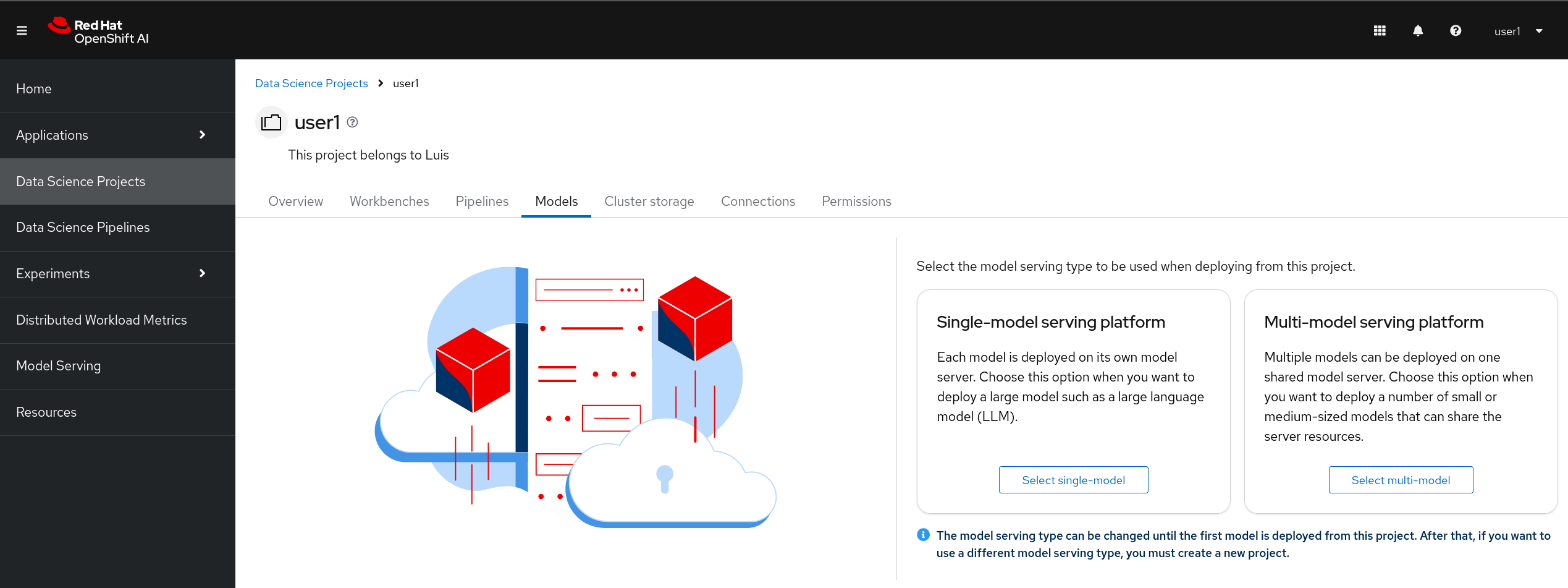

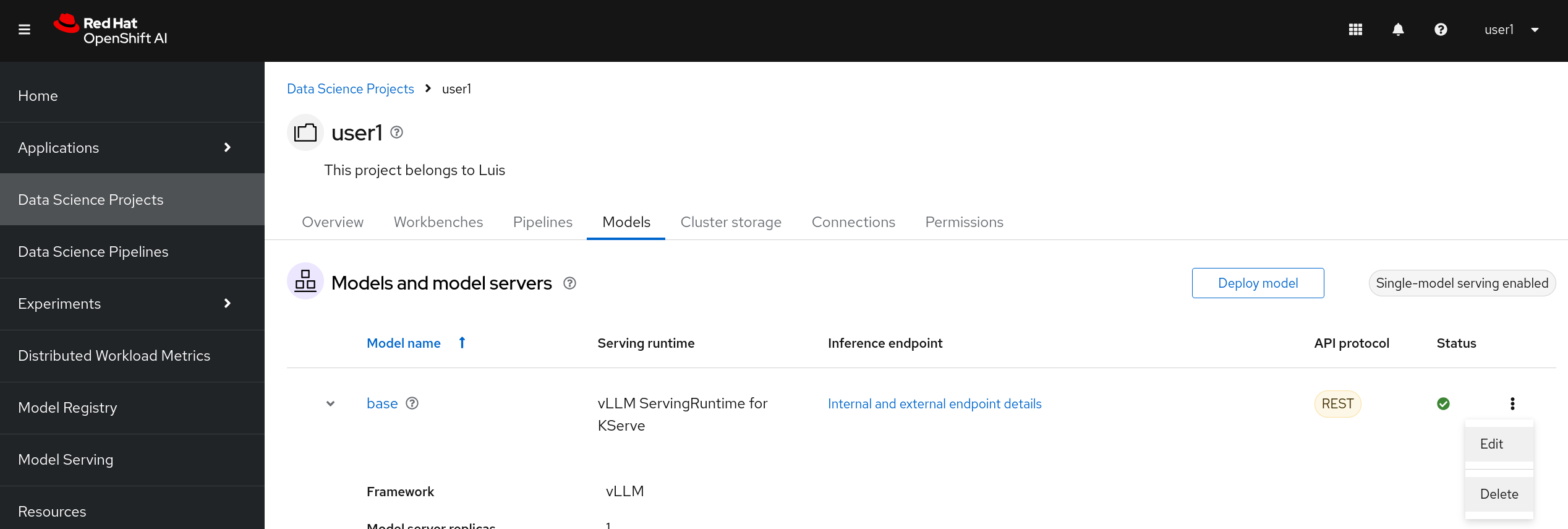

Navigate to Your Project: Head over to your created Data Science Project and locate the Models section.

-

Select the Service Platform: Click on Models and choose the Single-model service platform option.

-

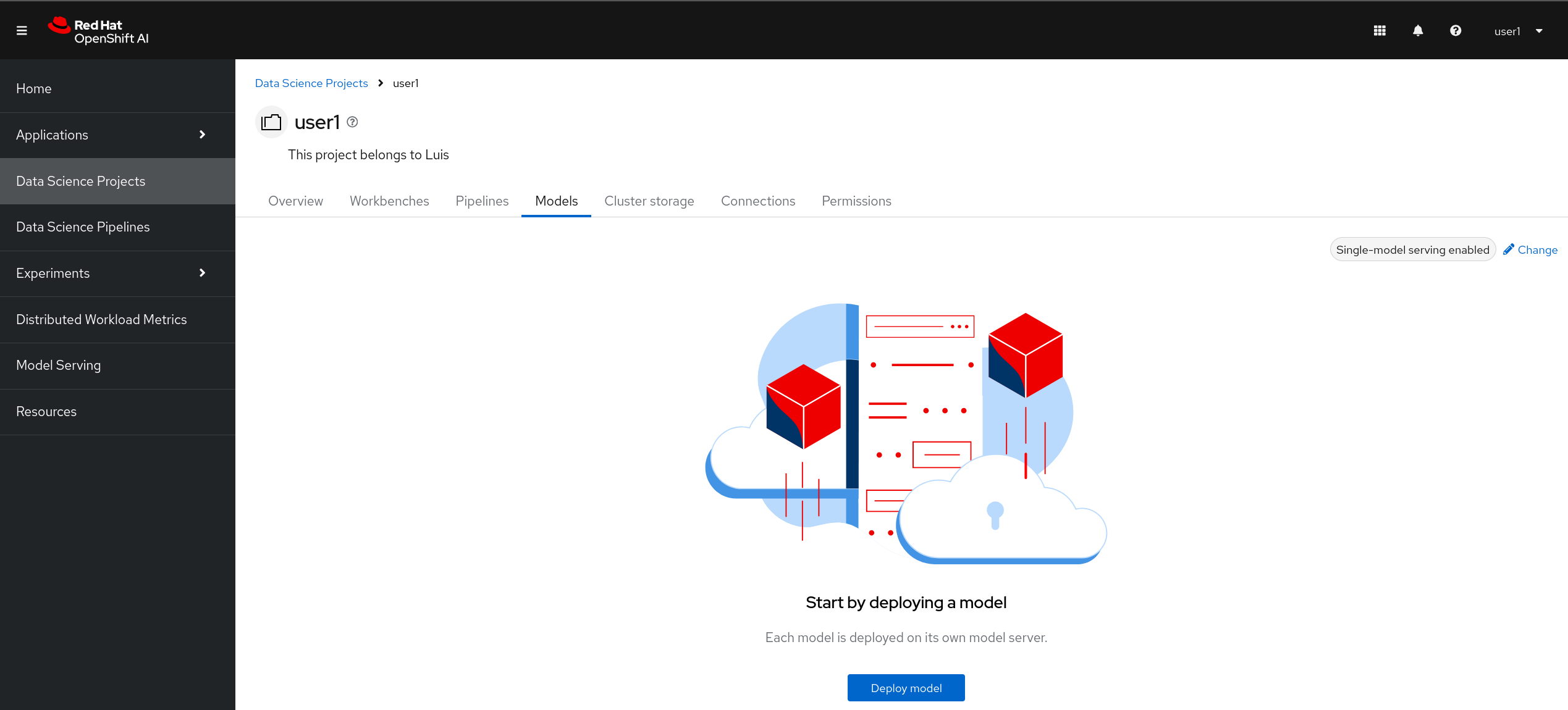

Deploy Your Model: Click on the Deploy model button to start the deployment process.

-

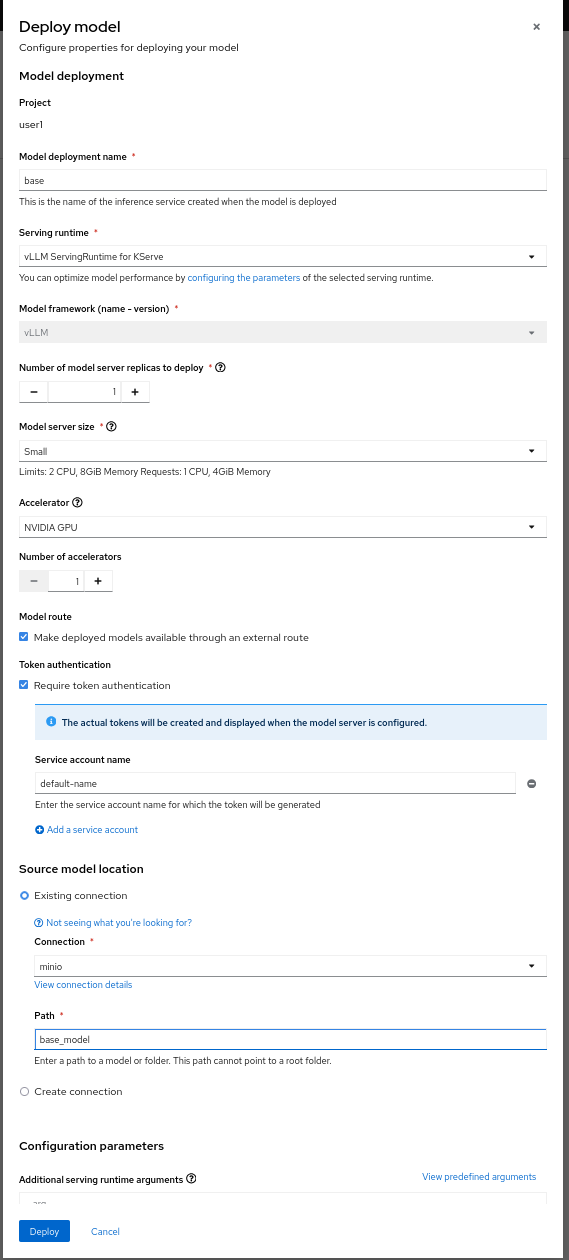

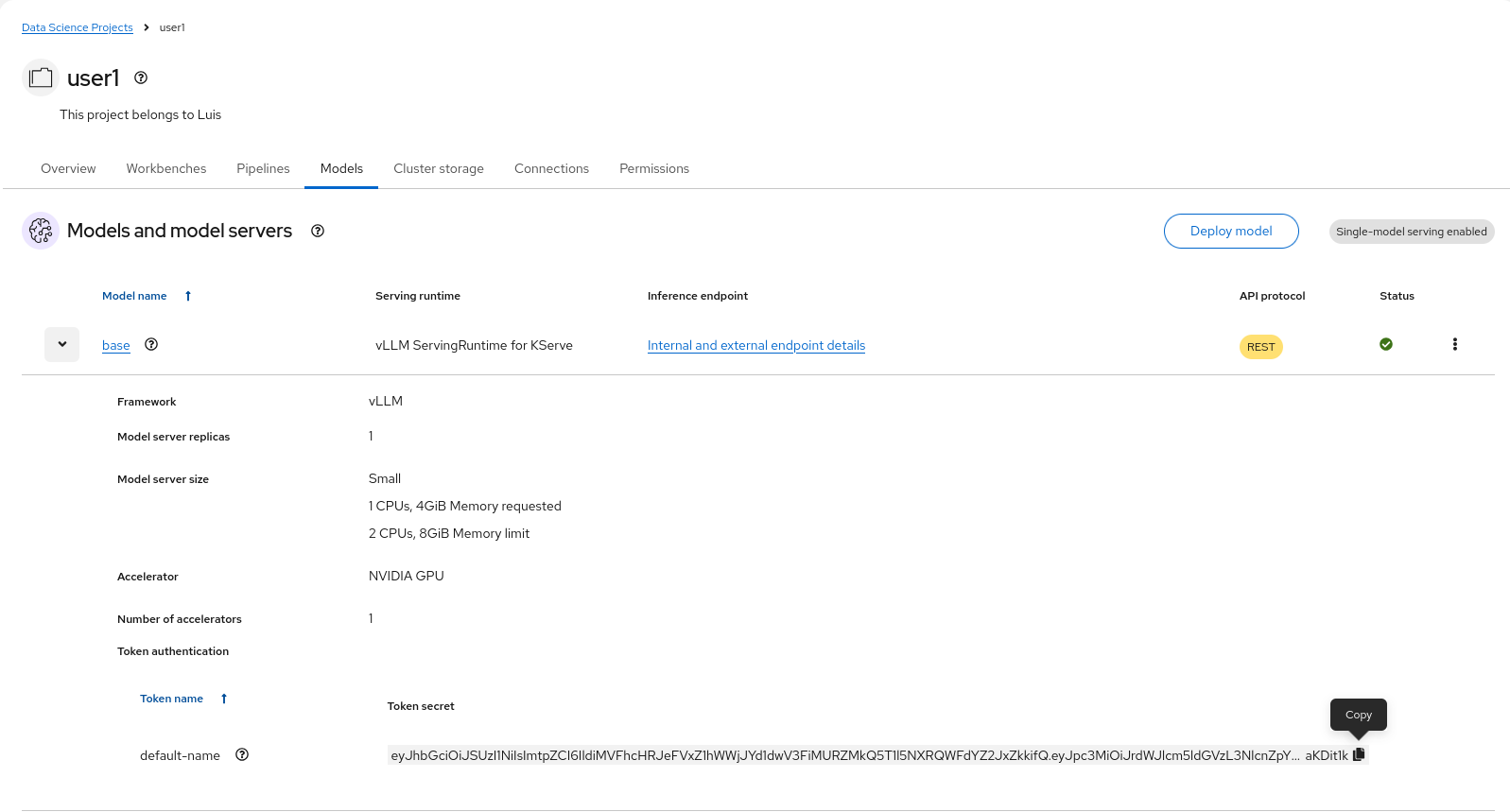

Fill Out the Deployment Form: You’ll need to provide some essential information. Here’s what to enter:

-

Name:

base -

Serving runtime:

vLLM ServingRuntime for KServe -

Model server size:

Small -

Accelerator:

NVIDIA GPU -

Model route: Select the option to make your model available through an external route.

-

Token authentication: Choose

Require token authenticationand leave the default Service account name. -

Existing connection:

-

-

Deploy and Wait: After filling out the form, click on Deploy. Now, wait while your model gets ready. This might take a moment! ☕

Test the Base Model

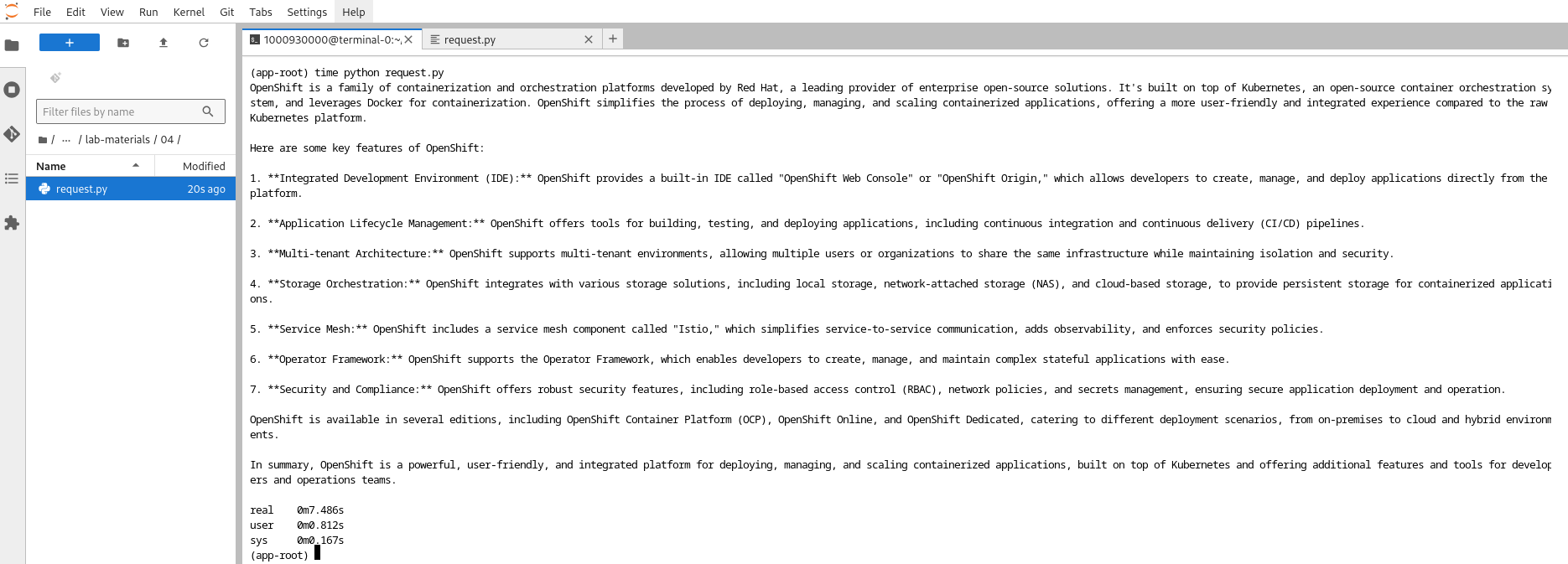

Congratulations on successfully deploying your model! 🎉 Now, it’s time to put it to the test. Get ready to send a request to your model and measure its response time. Let’s dive in!

Workbench Setup

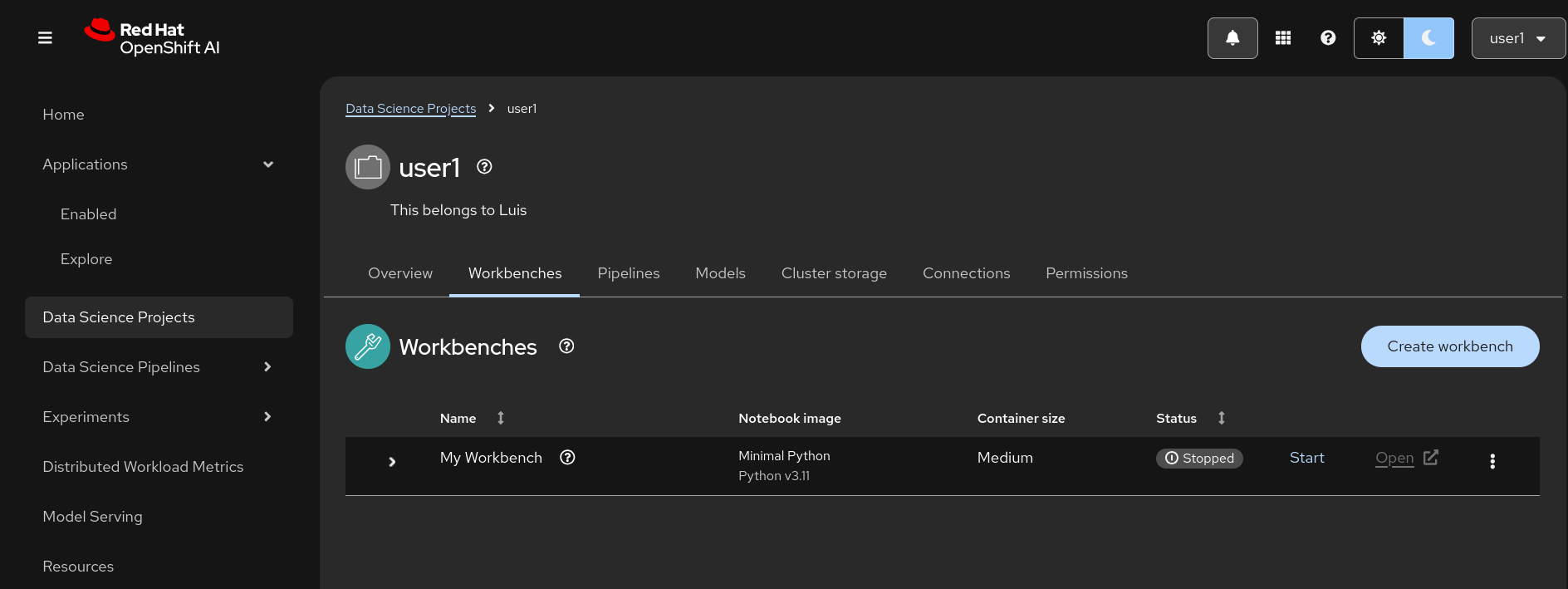

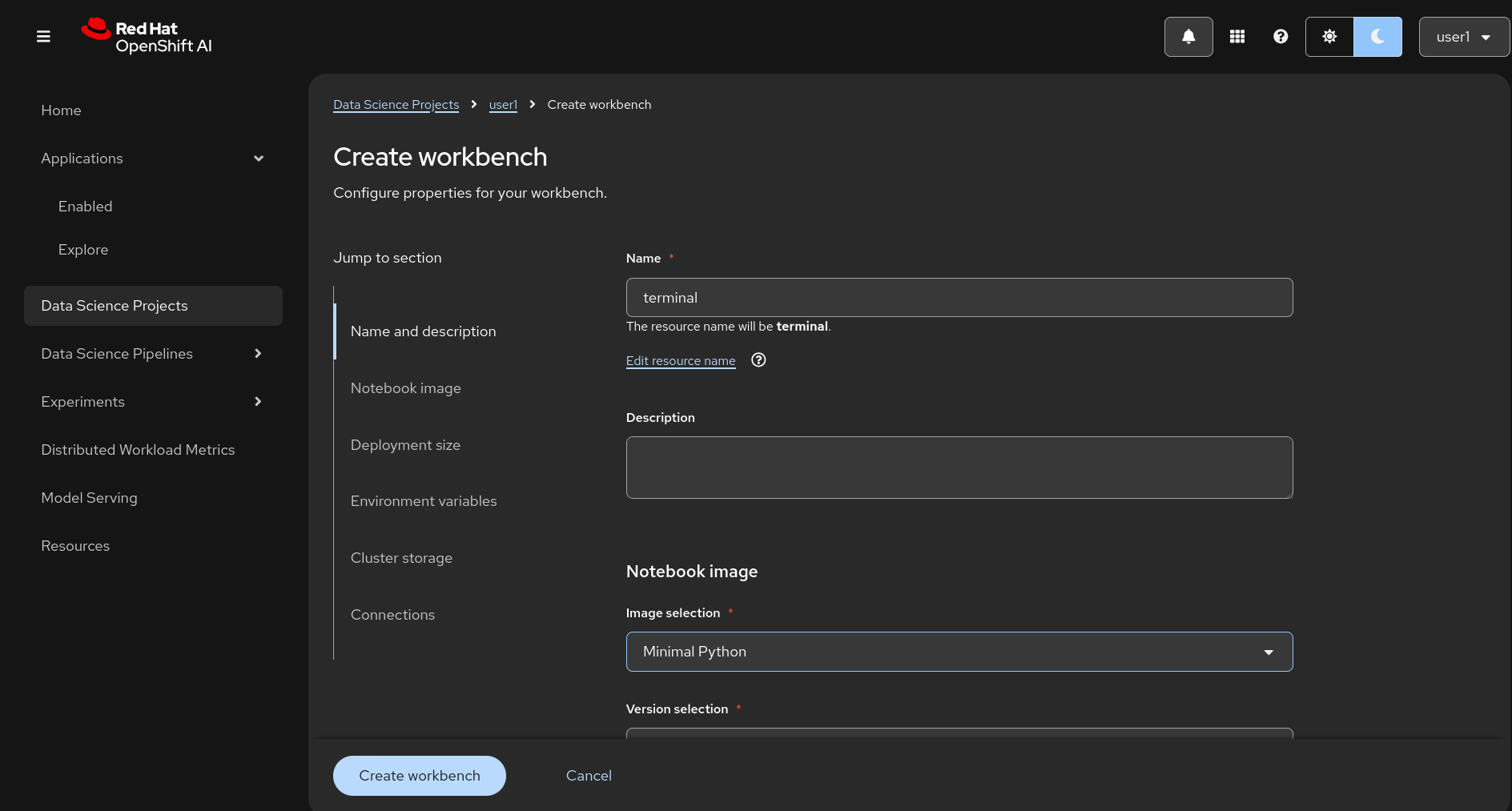

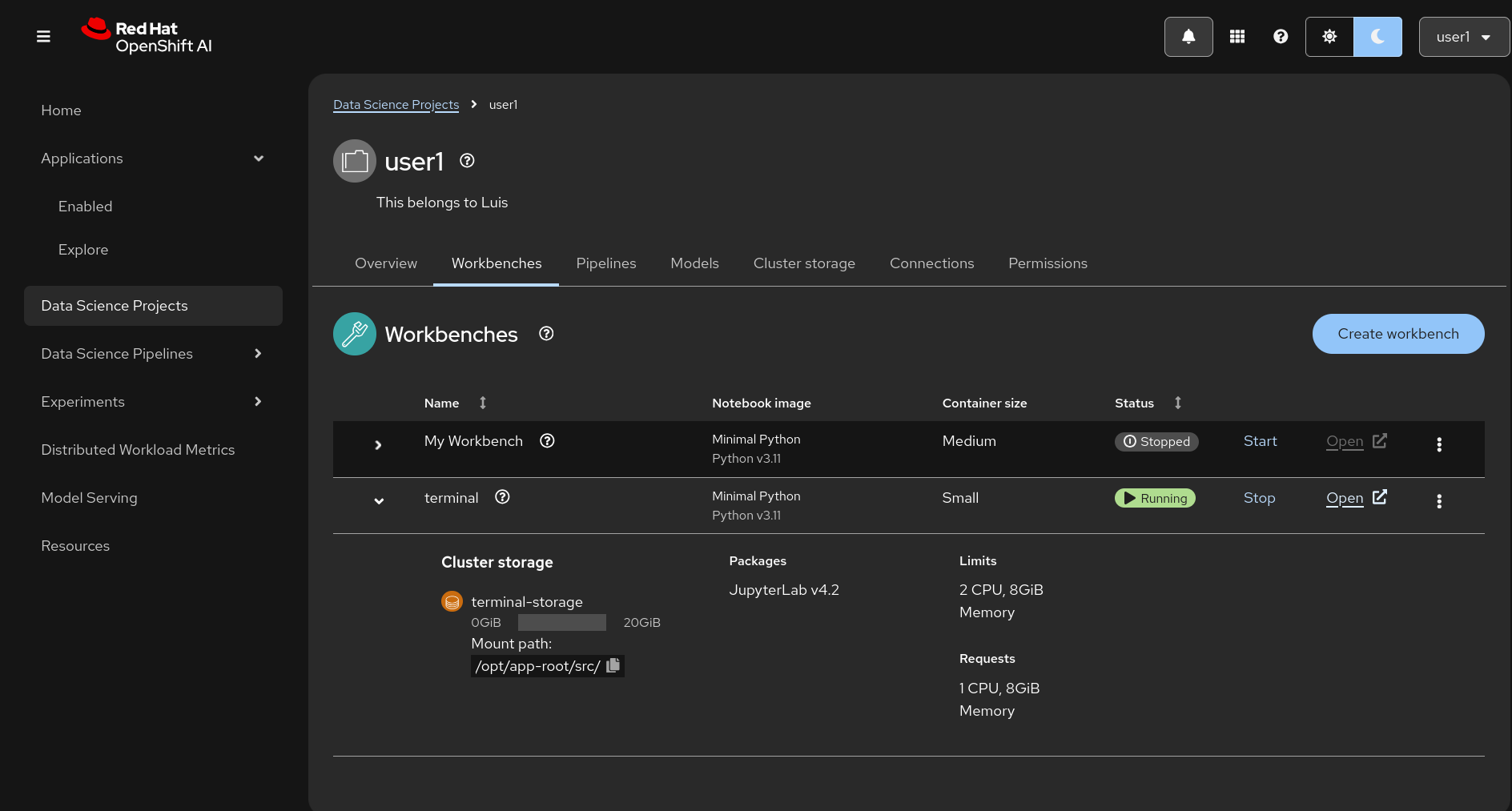

Similarly to Section 2.3, go to Data Science Projects, select your previously created project (userX), and:

-

Click on Create a workbench to create a new workbench, named

terminal. This time without attaching any GPU. Also, thesmallsize should be enough. -

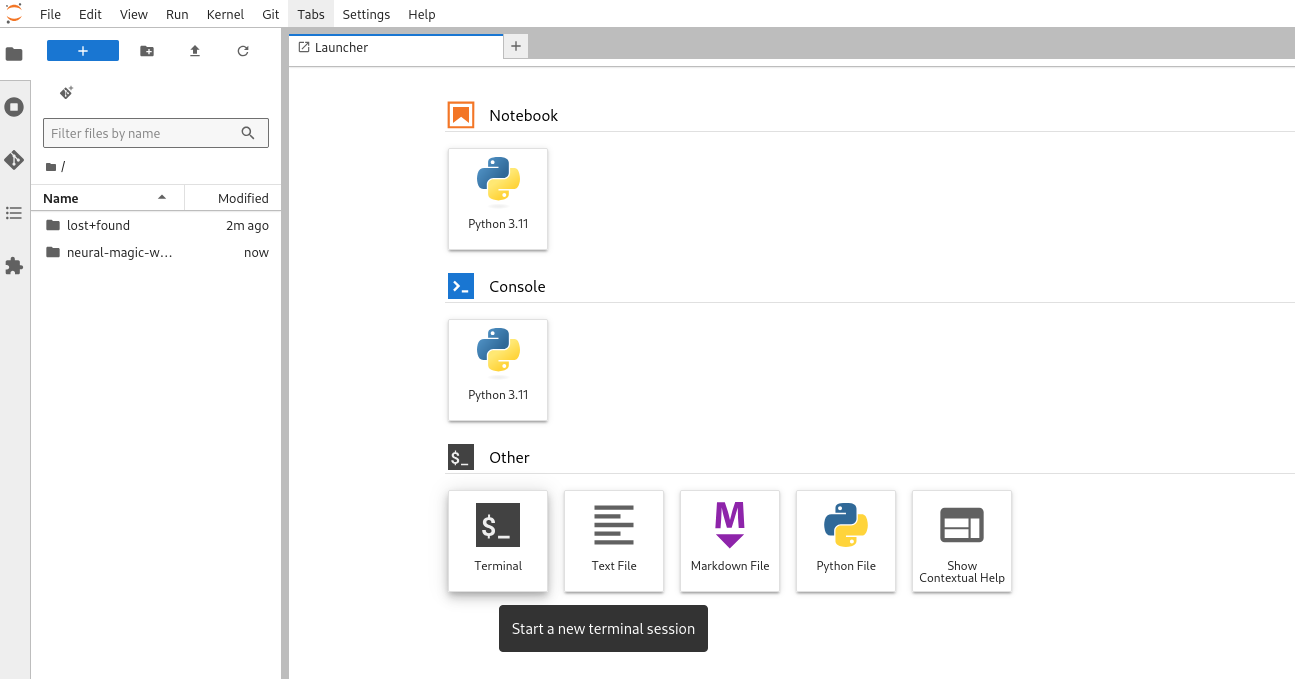

Open the

terminalworkbench and create a terminal inside. -

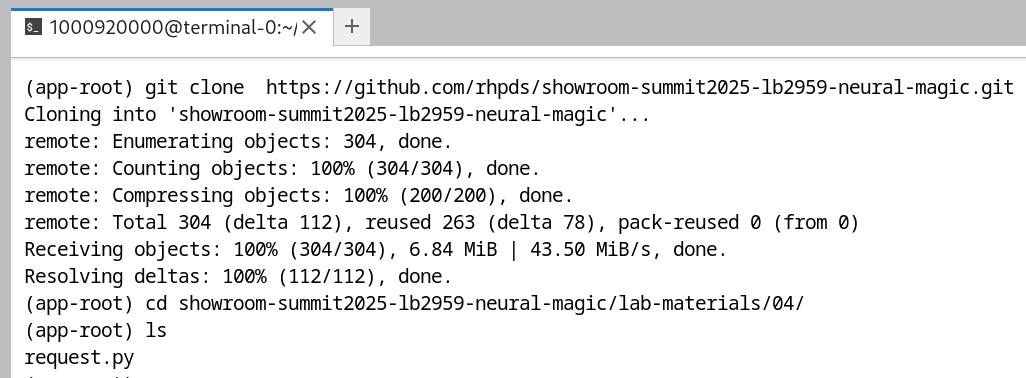

Clone the repository

https://github.com/rhpds/showroom-summit2025-lb2959-neural-magic.gitand go to theshowroom-summit2025-lb2959-neural-magic/lab-materials/04folder. -

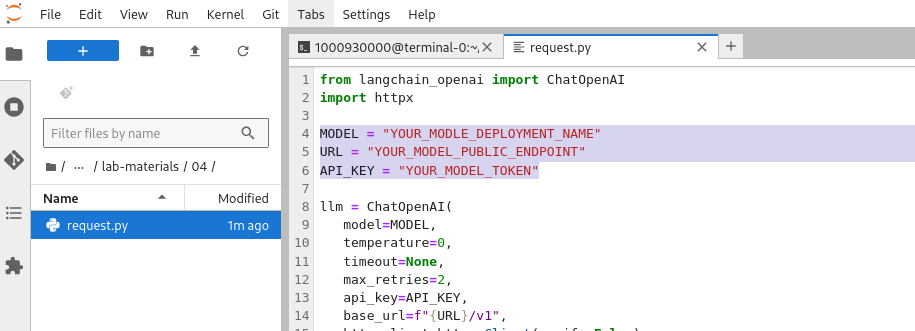

Update Your Variables: Open the

request.pyfile and update the following variables to match your setup:

MODEL = "your-model-name" # Replace with your model name URL = "your-api-url" # Replace with your API endpoint API_KEY = "your-api-key" # Replace with your API key

To fill in these variables, use the information from your deployed model:

-

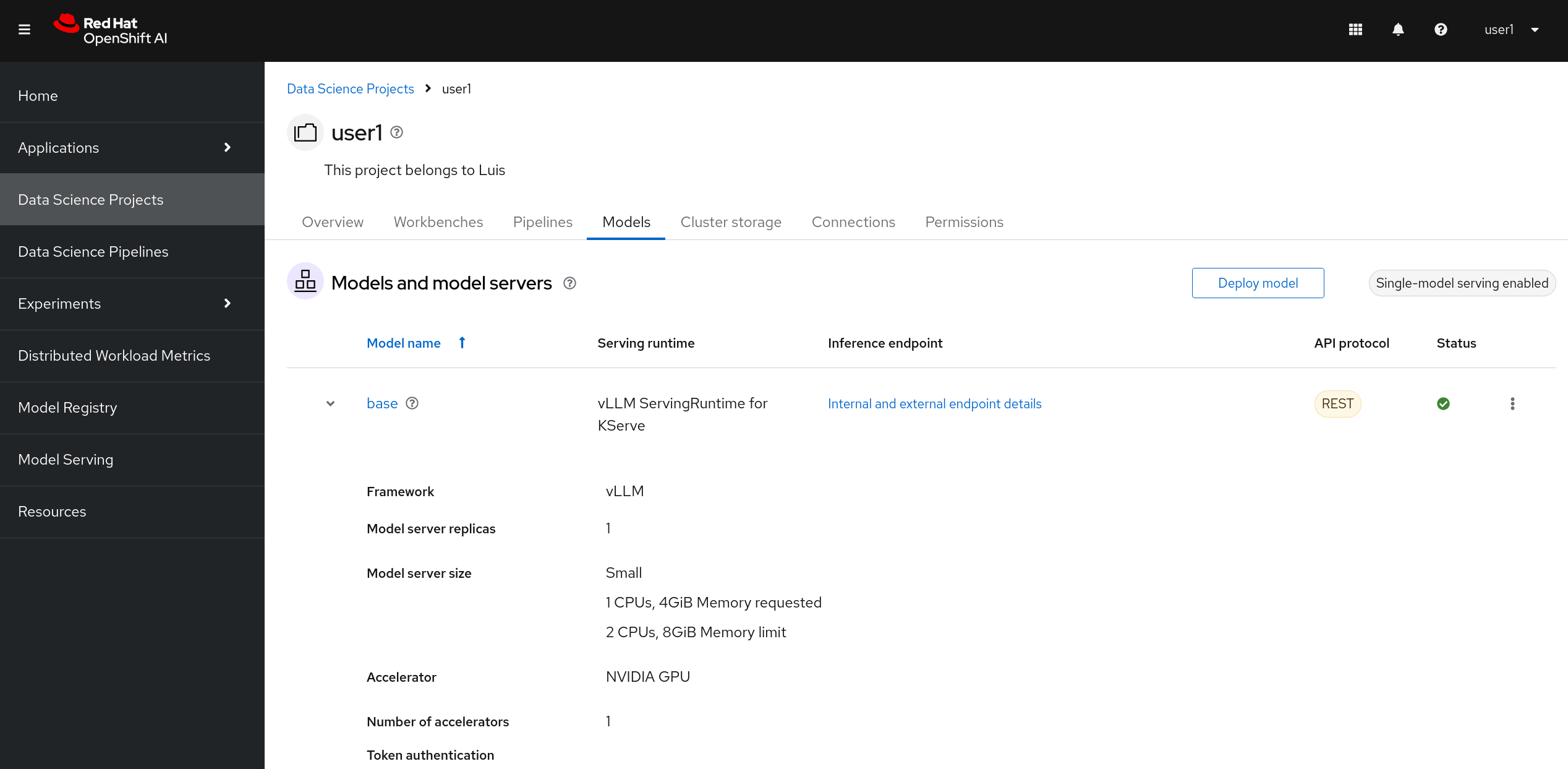

Set

MODELto the name of your model (base). -

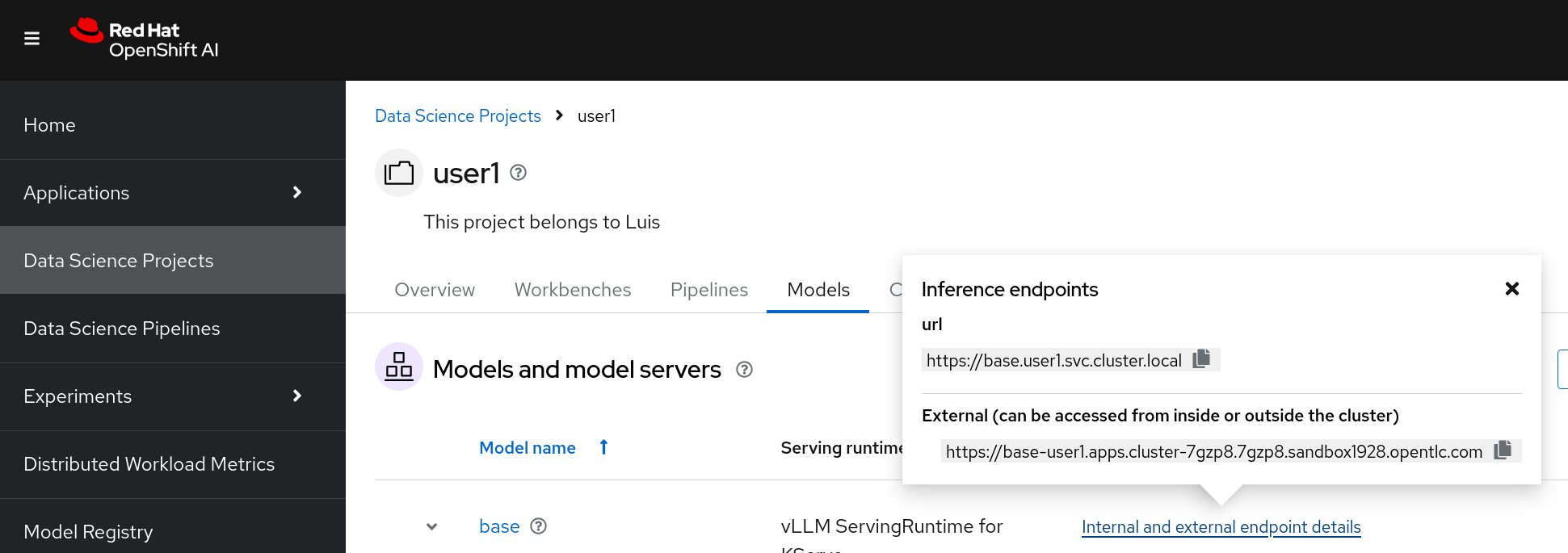

For the

URL, check theinternal and external endpoints detailsof your deployed model and use the external endpoint. -

Copy the model server

tokenfor theAPI_KEY.

Install the Required Dependency: Go back to the created terminal and install the necessary package to interact with your model:

pip install langchain_openai