Continuous Integration and Pipelines

In this lab you will learn about pipelines and how to configure a pipeline in OpenShift so that it will take care of the application lifecycle.

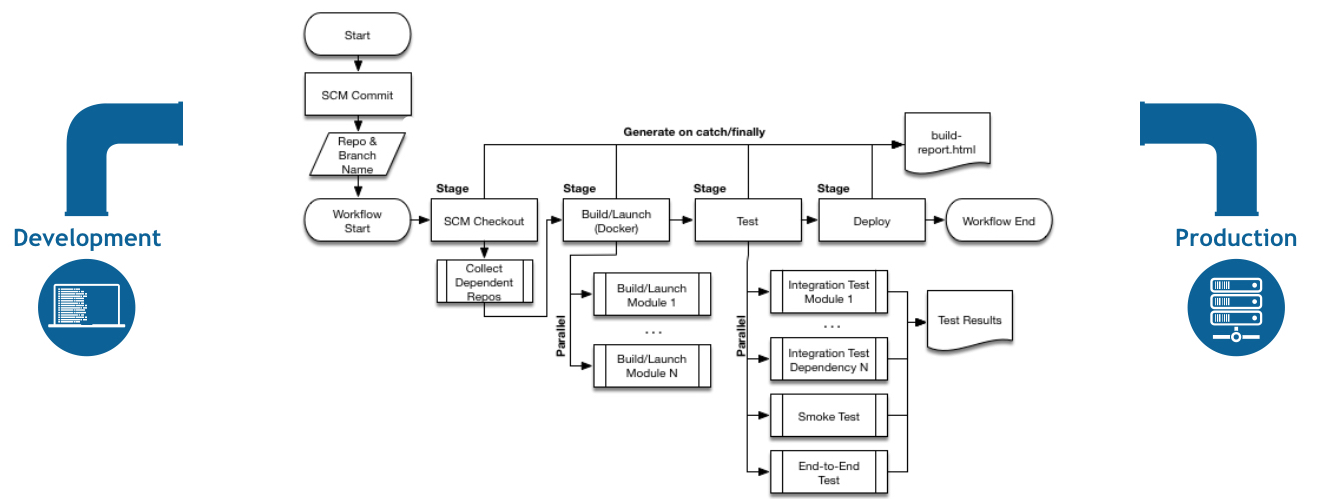

A continuous delivery (CD) pipeline is an automated expression of your process for getting software from version control right through to your users and customers. Every change to your software (committed in source control) goes through a complex process on its way to being released. This process involves building the software in a reliable and repeatable manner, as well as progressing the built software (called a "build") through multiple stages of testing and deployment.

OpenShift Pipelines is a cloud-native, continuous integration and delivery (CI/CD) solution for building pipelines using Tekton. Tekton is a flexible, Kubernetes-native, open-source CI/CD framework that enables automating deployments across multiple platforms (Kubernetes, serverless, VMs, etc) by abstracting away the underlying details.

Understanding Tekton

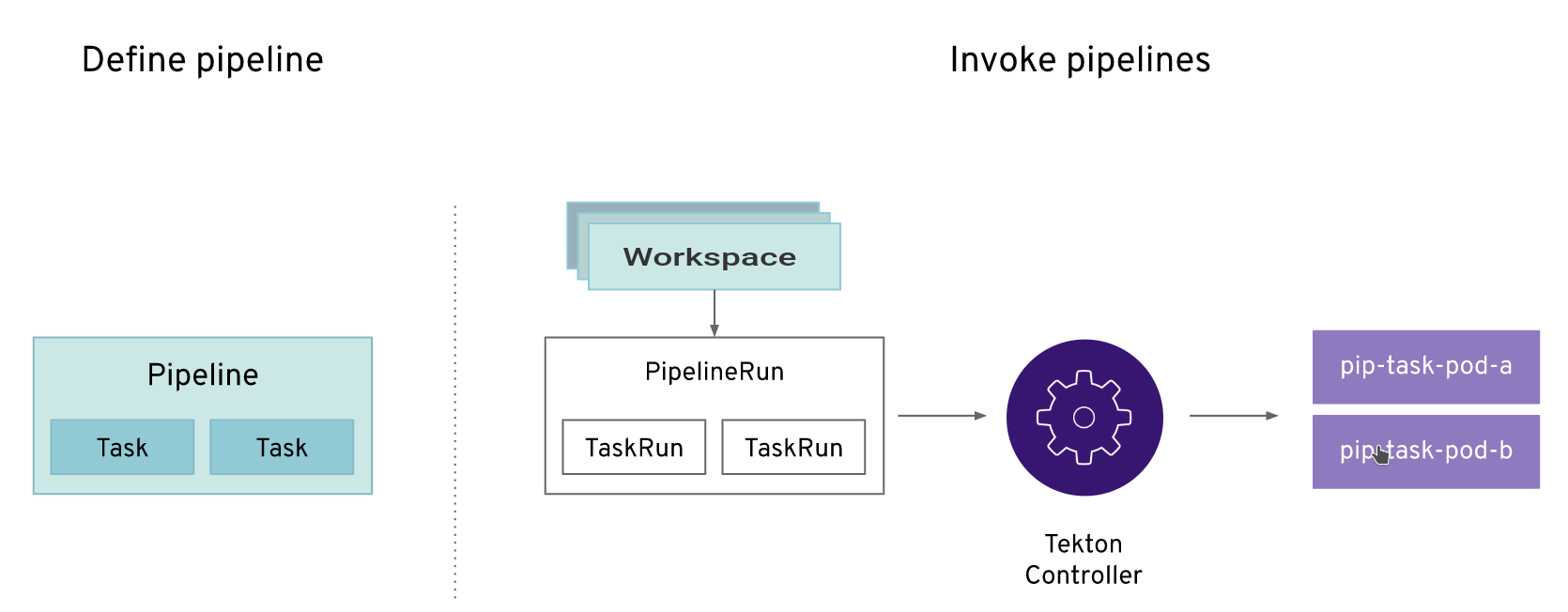

Tekton defines a number of Kubernetes custom resources as building blocks in order to standardize pipeline concepts and provide a terminology that is consistent across CI/CD solutions.

The custom resources needed to define a pipeline are listed below:

-

Task: a reusable, loosely coupled number of steps that perform a specific task (e.g. building a container image) -

Pipeline: the definition of the pipeline and theTasksthat it should perform -

TaskRun: the execution and result of running an instance of task -

PipelineRun: the execution and result of running an instance of pipeline, which includes a number ofTaskRuns

In short, in order to create a pipeline, one does the following:

-

Create custom or install existing reusable

Tasks -

Create a

PipelineandPipelineResourcesto define your application’s delivery pipeline -

Create a

PersistentVolumeClaimto provide the volume/filesystem for pipeline execution or provide aVolumeClaimTemplatewhich creates aPersistentVolumeClaim -

Create a

PipelineRunto instantiate and invoke the pipeline

For further details on pipeline concepts, refer to the Tekton documentation that provides an excellent guide for understanding various parameters and attributes available for defining pipelines.

Exercise: Create Your Pipeline

As pipelines provide the ability to promote applications between different stages of the delivery cycle, Tekton, which is our Continuous Integration server that will execute our pipelines, will be deployed on a project with a Continuous Integration role. Pipelines executed in this project will have permissions to interact with all the projects modeling the different stages of our delivery cycle.

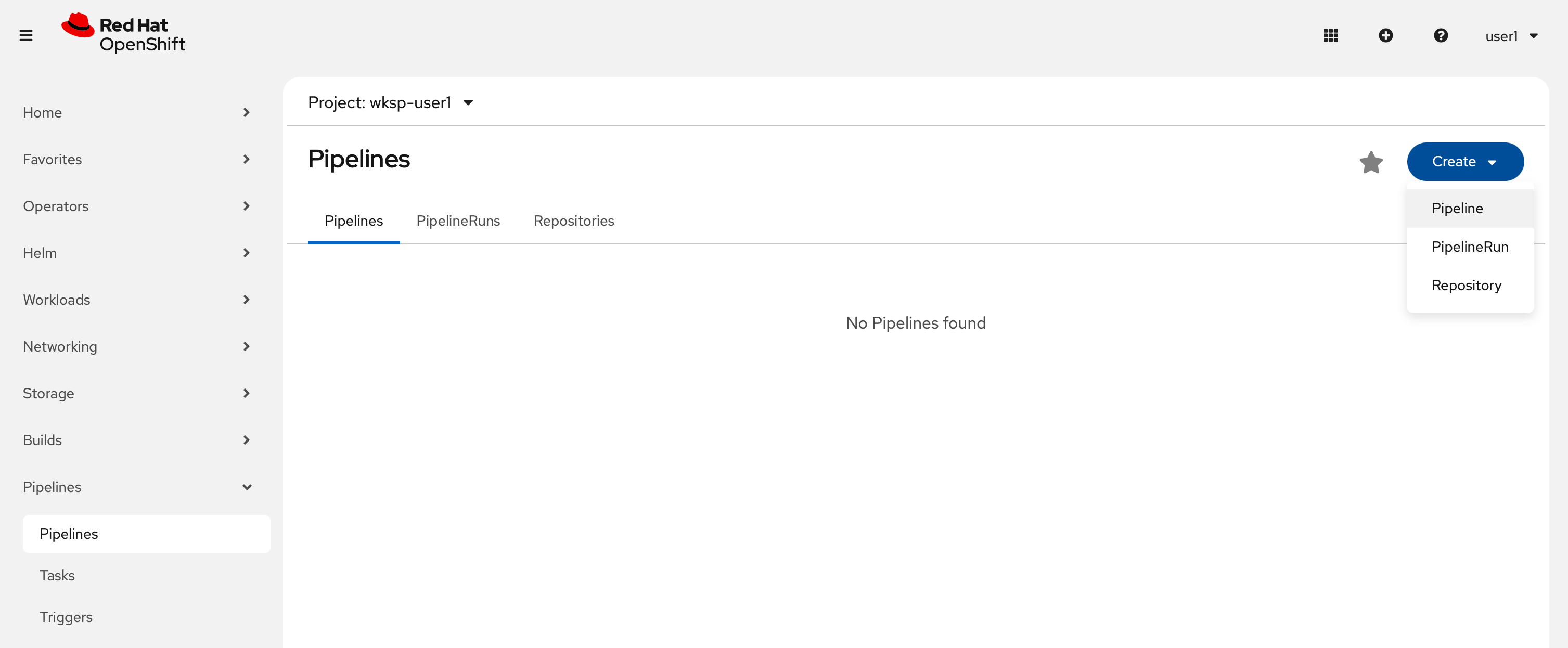

Let’s now create a Tekton pipeline for Nationalparks backend. In the Developer Perspective, click Pipelines → Pipelines in the left navigation, then click Create → Pipeline.

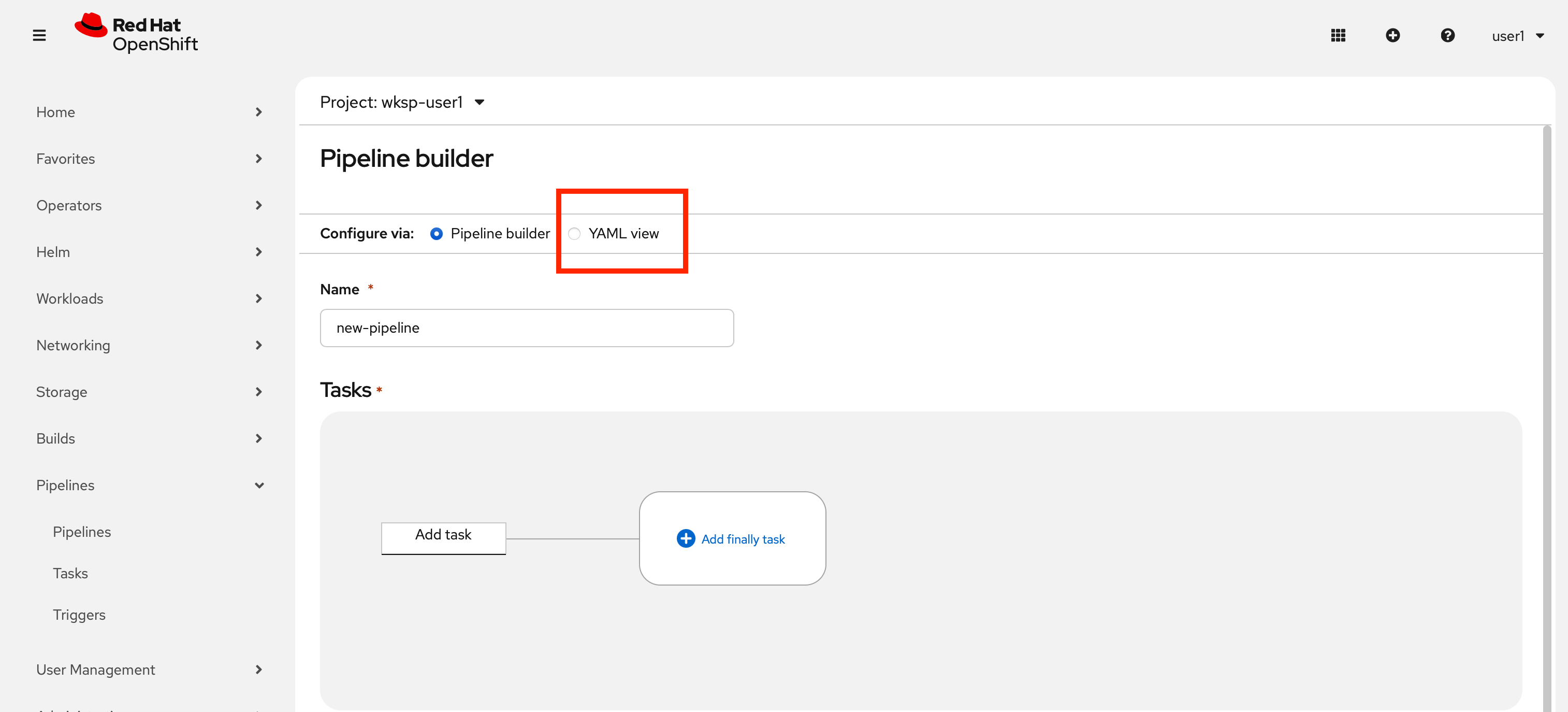

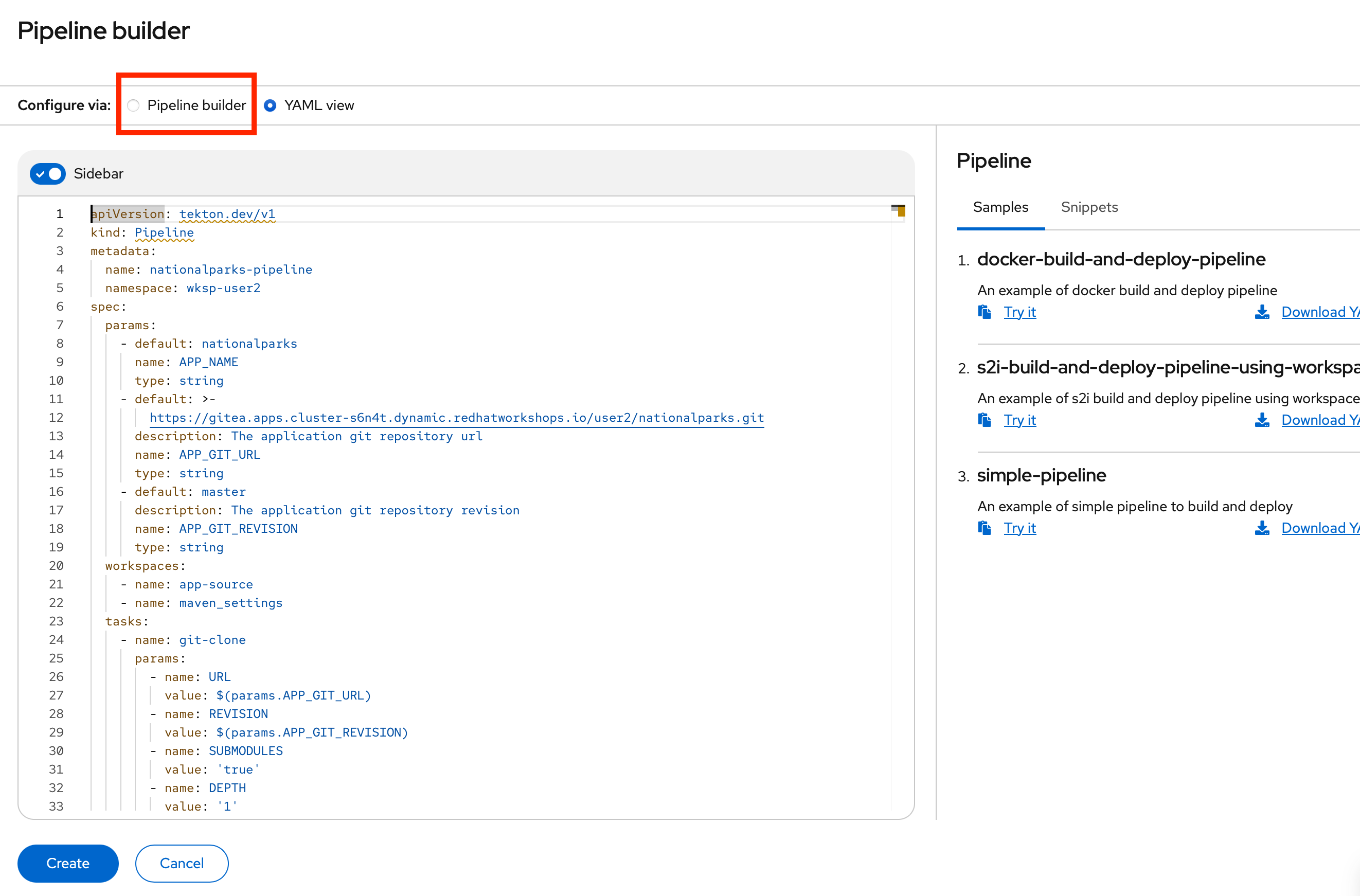

Here we can view the interactive Pipeline builder. We can add tasks to the pipeline by clicking on the Add task button. We can also add parameters to the pipeline by clicking on the created tasks. To save time here, we’ll use the YAML view to create the pipeline.

Now, depending on which language you are using, you’ll need to create the appropriate pipeline:

Java

Here, we can copy this Tekton Pipeline in the YAML text area. This pipeline will clone the source code from our local Git server (Gitea), build and test the application, build a container image and deploy it on OpenShift.

apiVersion: tekton.dev/v1

kind: Pipeline

metadata:

name: nationalparks-pipeline

spec:

params:

- default: nationalparks

name: APP_NAME

type: string

- default: https://gitea.apps.cluster.example.com/userX/nationalparks.git

description: The application git repository url

name: APP_GIT_URL

type: string

- default: master

description: The application git repository revision

name: APP_GIT_REVISION

type: string

tasks:

- name: git-clone

params:

- name: URL

value: $(params.APP_GIT_URL)

- name: REVISION

value: $(params.APP_GIT_REVISION)

- name: SUBMODULES

value: 'true'

- name: DEPTH

value: '1'

- name: SSL_VERIFY

value: 'true'

- name: DELETE_EXISTING

value: 'true'

- name: VERBOSE

value: 'true'

taskRef:

kind: Task

name: git-clone

workspaces:

- name: output

workspace: app-source

- name: build-and-test

params:

- name: MAVEN_IMAGE

value: maven:3.8.3-openjdk-11

- name: GOALS

value:

- package

- name: PROXY_PROTOCOL

value: http

runAfter:

- git-clone

taskRef:

kind: Task

name: maven

workspaces:

- name: source

workspace: app-source

- name: maven_settings

workspace: maven-settings

- name: build-image

params:

- name: IMAGE

value: image-registry.openshift-image-registry.svc:5000/$(context.pipelineRun.namespace)/$(params.APP_NAME):latest

- name: BUILDER_IMAGE

value: registry.redhat.io/rhel8/buildah:latest

- name: STORAGE_DRIVER

value: vfs

- name: DOCKERFILE

value: ./Dockerfile

- name: CONTEXT

value: .

- name: TLSVERIFY

value: 'true'

- name: FORMAT

value: oci

runAfter:

- build-and-test

taskRef:

kind: Task

name: buildah

workspaces:

- name: source

workspace: app-source

- name: redeploy

params:

- name: SCRIPT

value: oc rollout restart deployment/$(params.APP_NAME)

runAfter:

- build-image

taskRef:

kind: Task

name: openshift-client

workspaces:

- name: app-source

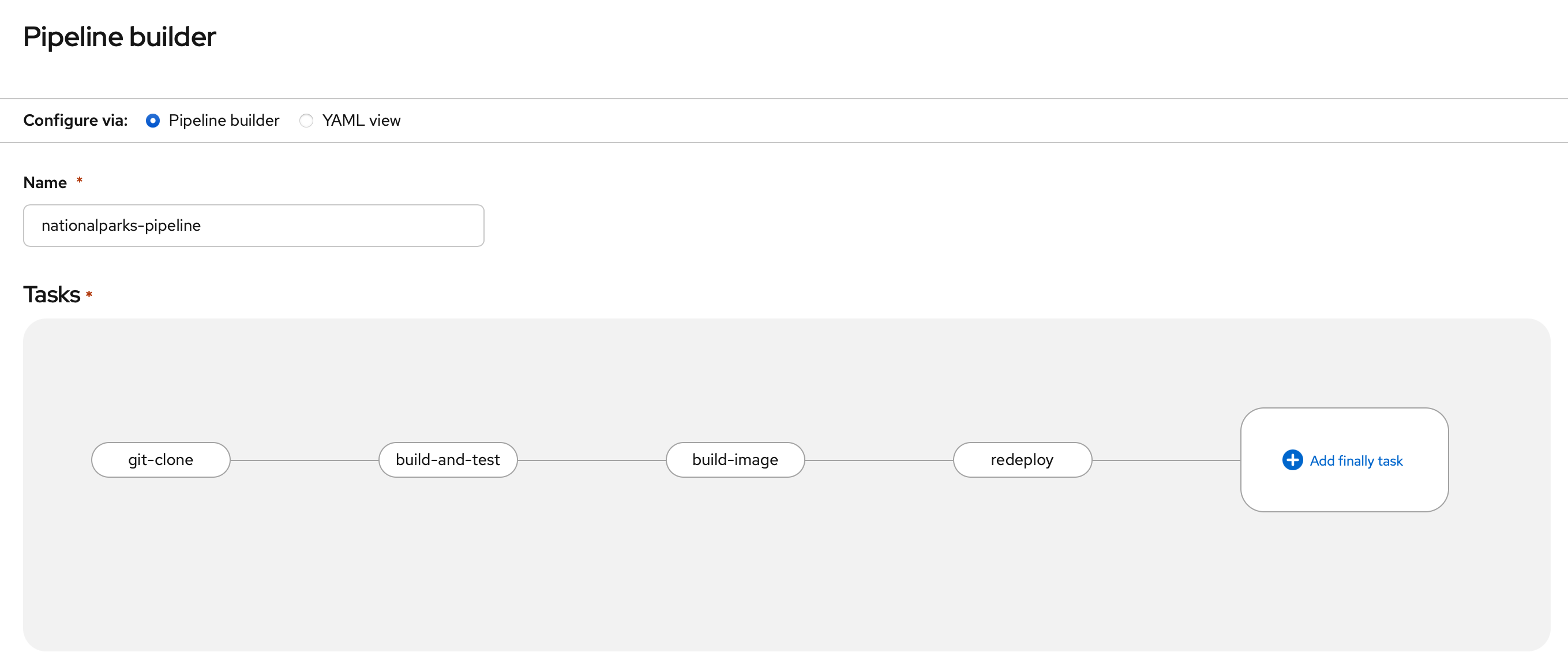

- name: maven-settingsNow, let’s head back to the Pipeline builder view to see it visually.

This pipeline has 4 Tasks defined:

-

git clone: this is a

Taskthat will clone our source repository for nationalparks and store it to aWorkspaceapp-sourcewhich will use the PVC created for itapp-source-workspace -

build-and-test: will build and test our Java application using

mavenTask -

build-image: this is a buildah Task that will build an image using a binary file as input in OpenShift, in our case a JAR artifact generated in the previous task

-

redeploy: it will use an

openshift-clientTask to deploy the created image on OpenShift using the Deployment namednationalparkswe created in the previous lab

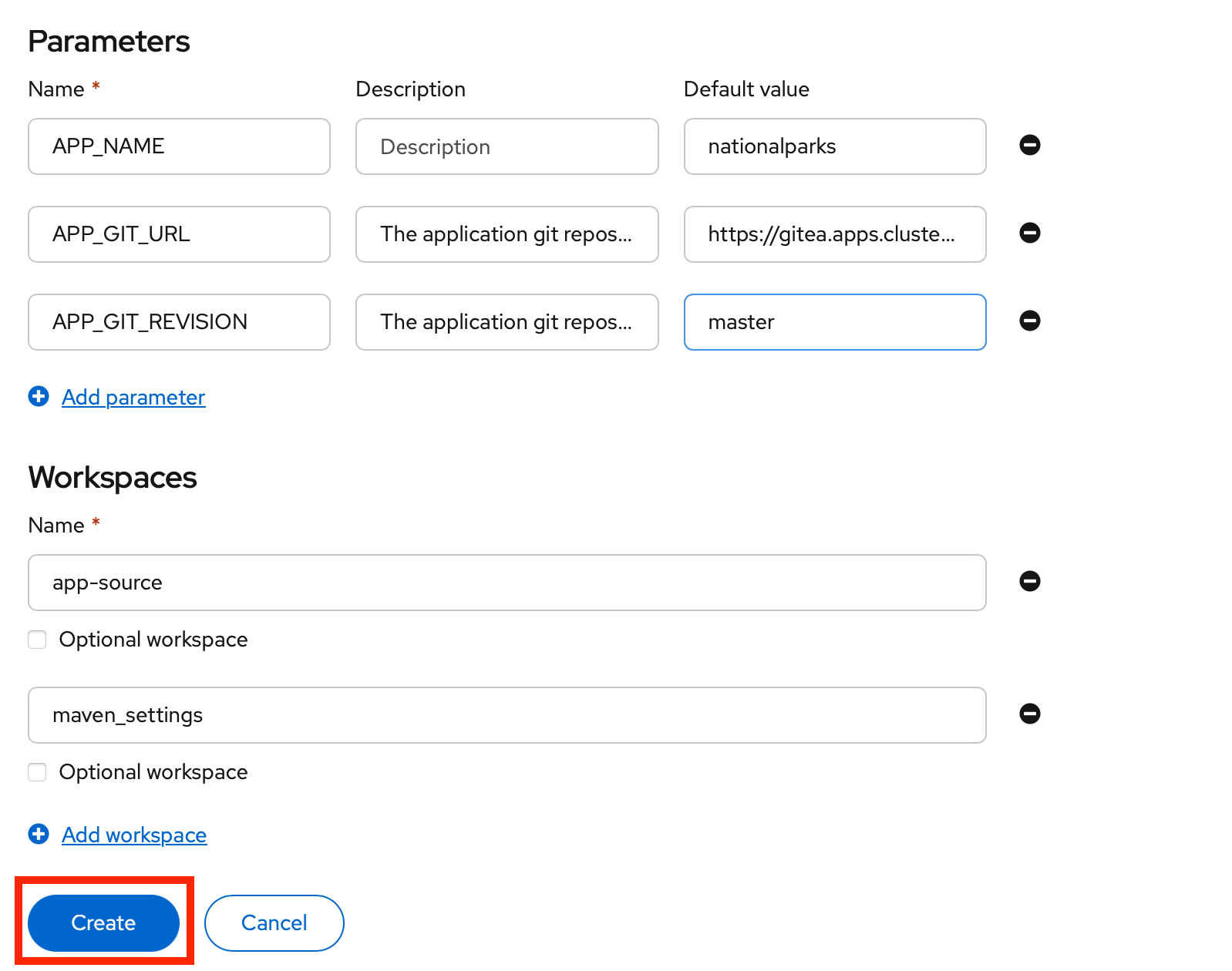

The Pipeline is parametric, with default values already preconfigured.

It is using two Workspaces:

-

app-source: linked to a PersistentVolumeClaim

app-source-pvcwe will create next. This will be used to store the artifact to be used in different Task -

maven_settings: an EmptyDir volume for the maven cache, this can be extended also with a PVC to make subsequent Maven builds faster

Finally, make sure to click the Create button to create the Pipeline.

Exercise: Add Storage for your Pipeline

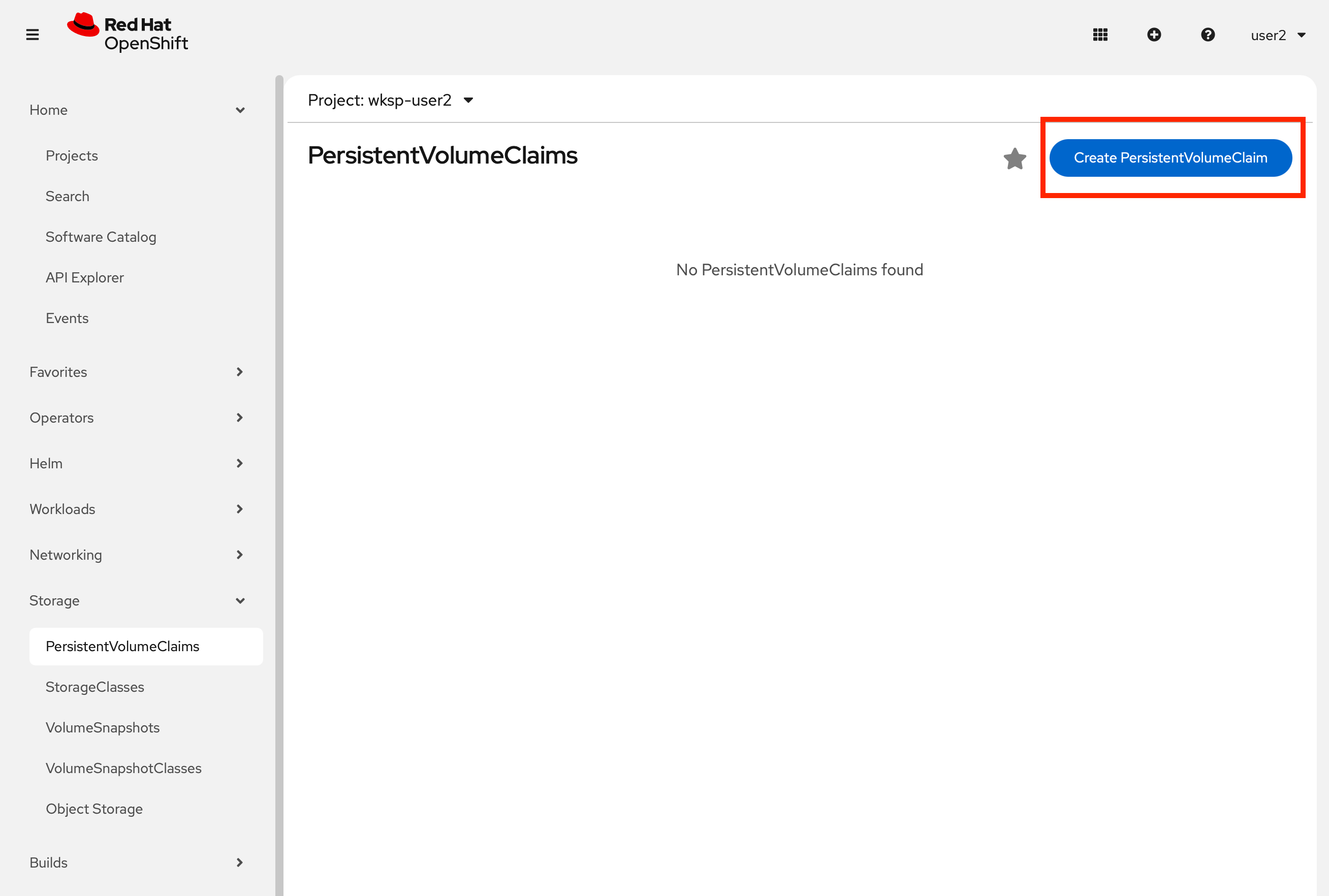

OpenShift manages Storage with Persistent Volumes to be attached to Pods running our applications through Persistent Volume Claim requests, and it also provides the capability to manage it at ease from the Web Console. From the left menu, go to Storage → Persistent Volume Claims.

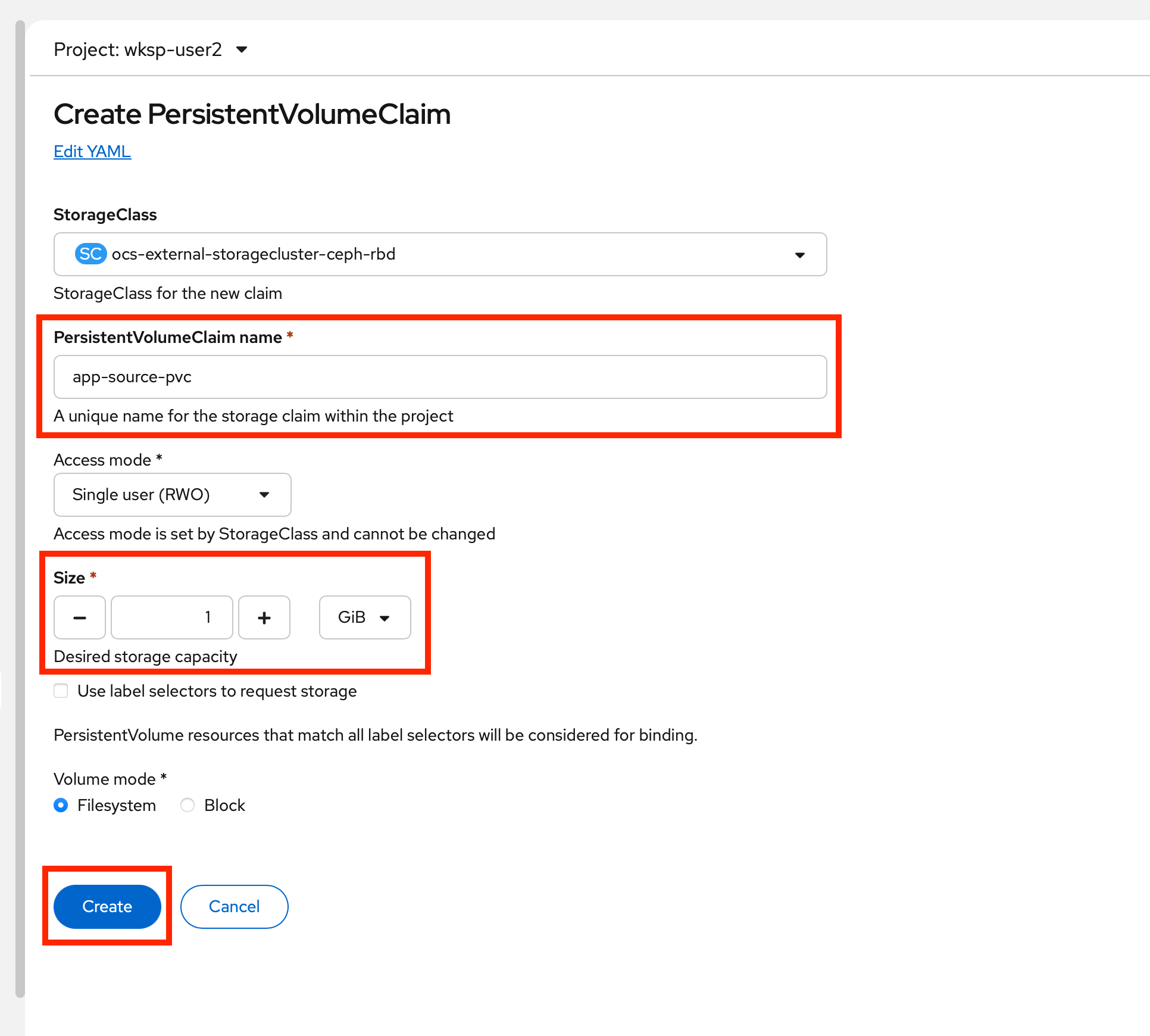

Go to the top-right side and click Create Persistent Volume Claim button.

Inside Persistent Volume Claim name insert app-source-pvc.

In Size section, insert 1 as we are going to create 1 GiB Persistent Volume for our Pipeline, using RWO Single User access mode.

Leave all other default settings, and click Create.

| The Storage Class is the type of storage available in the cluster. |

Run the Pipeline

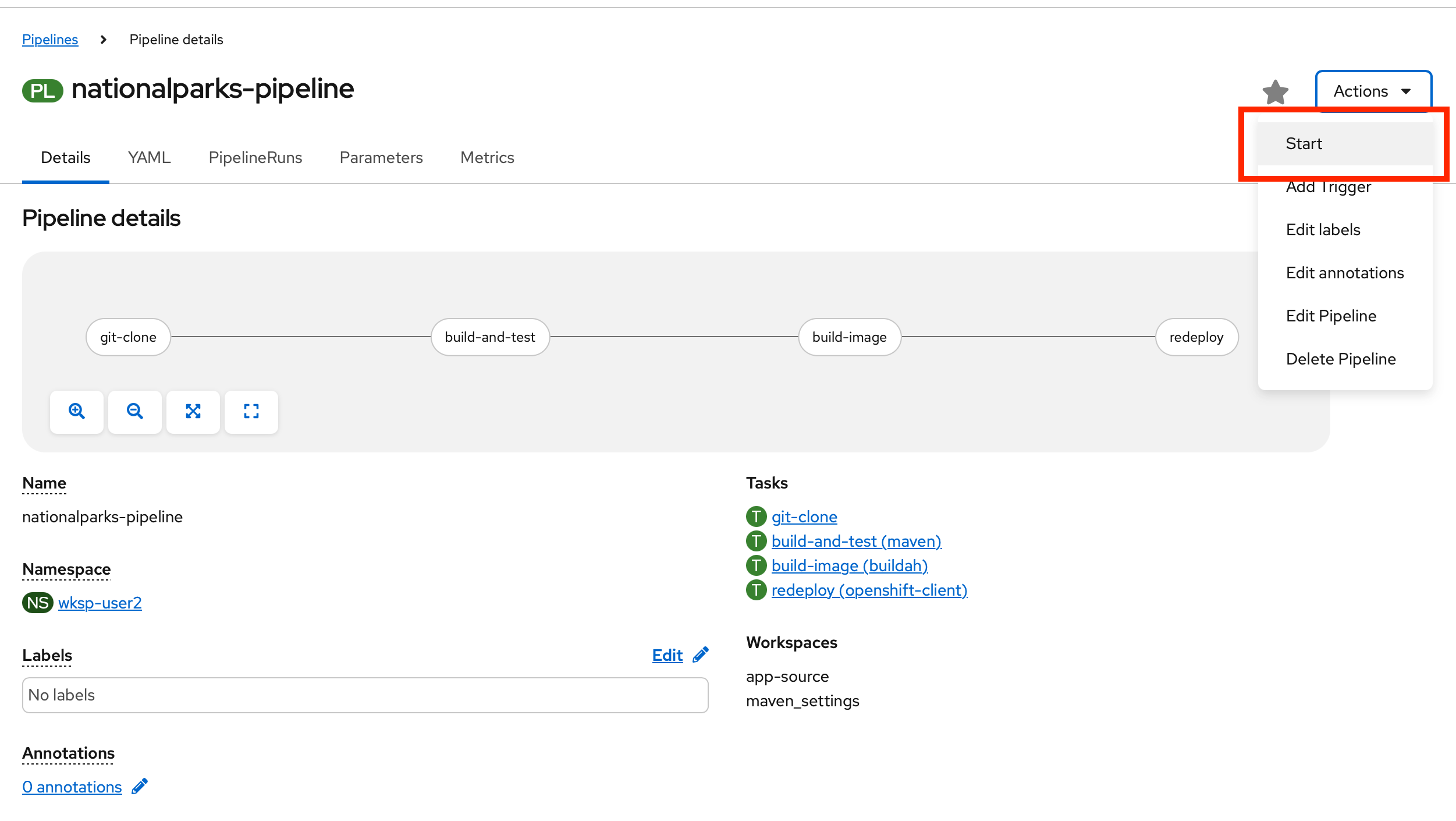

We can now start the Pipeline from the Web Console. Go to left-side menu, click on Pipelines → Pipelines, then click on nationalparks-pipeline. From top-right Actions list, click on Start.

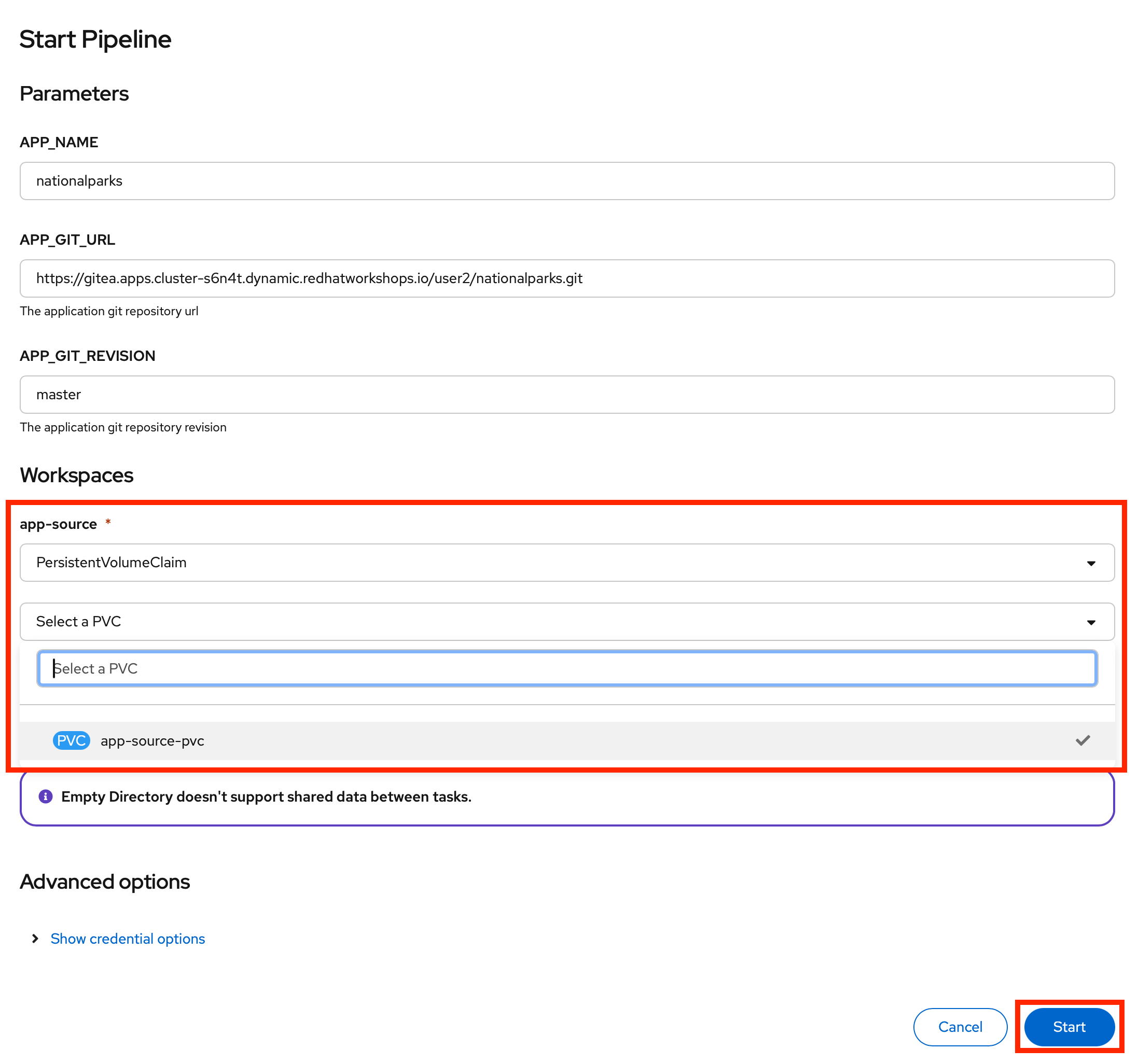

You will be prompted with parameters to add the Pipeline, showing default ones. Your appropriate values are already preconfigured.

In Workspaces → app-source, select PersistentVolumeClaim from the list, and then select app-source-pvc. This is the share volume used by Pipeline Tasks in your Pipeline containing the source code and compiled artifacts.

In Workspaces → maven-settings, leave it as Empty Directory.

Click on Start to run your Pipeline.

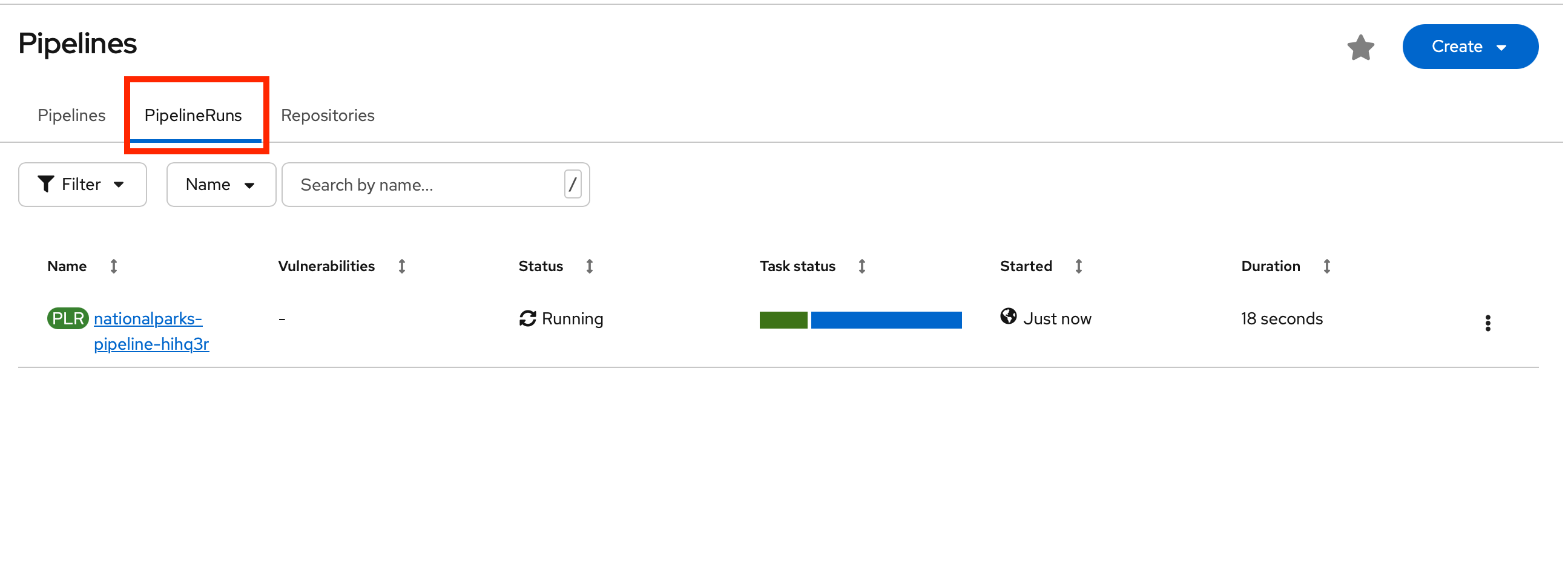

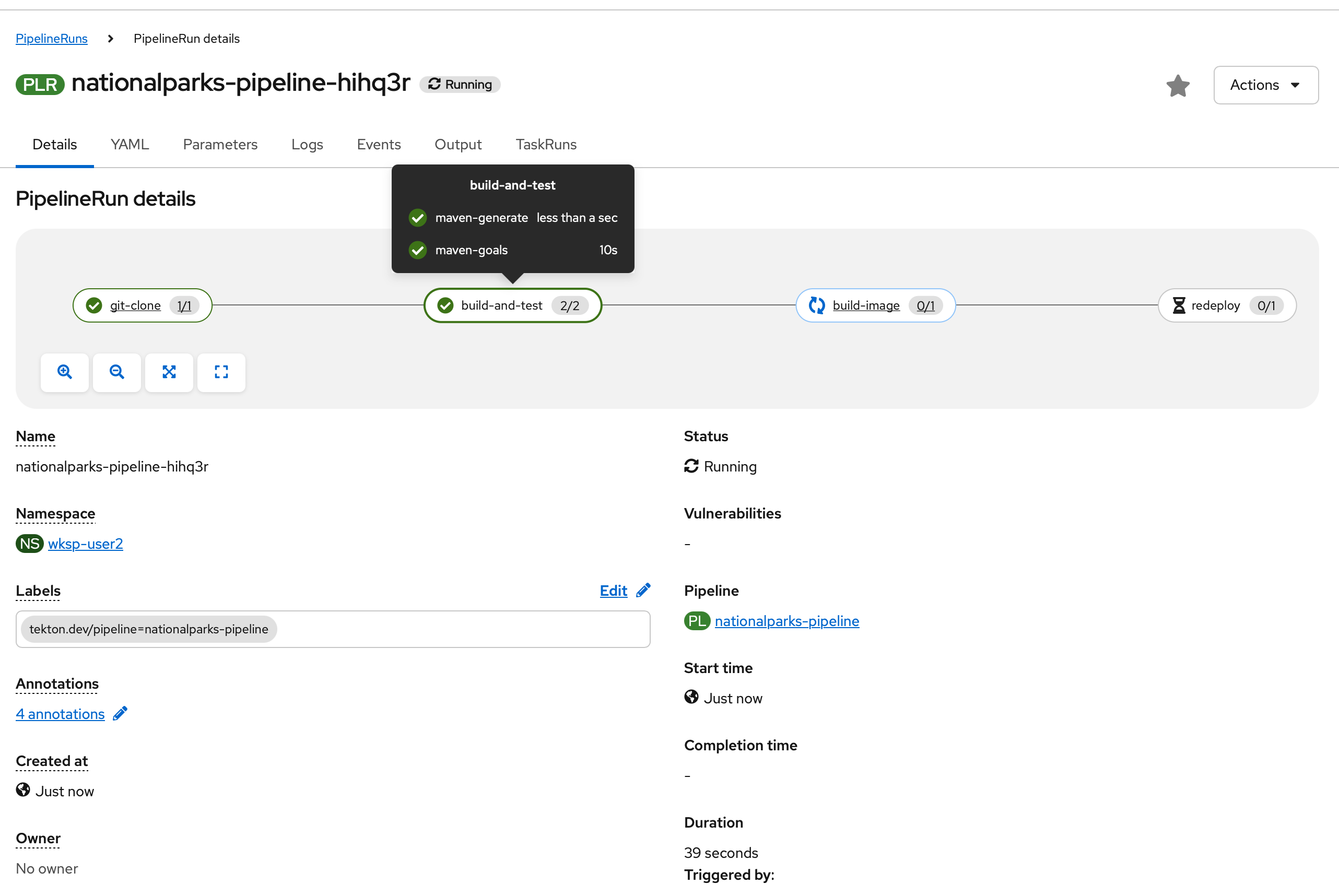

You can follow the Pipeline execution at ease from Web Console. Go to left-side menu, click on Pipelines → Pipeline, then click on nationalparks-pipeline. Switch to Pipeline Runs tab to watch all the steps in progress:

The click on the PipelineRun:

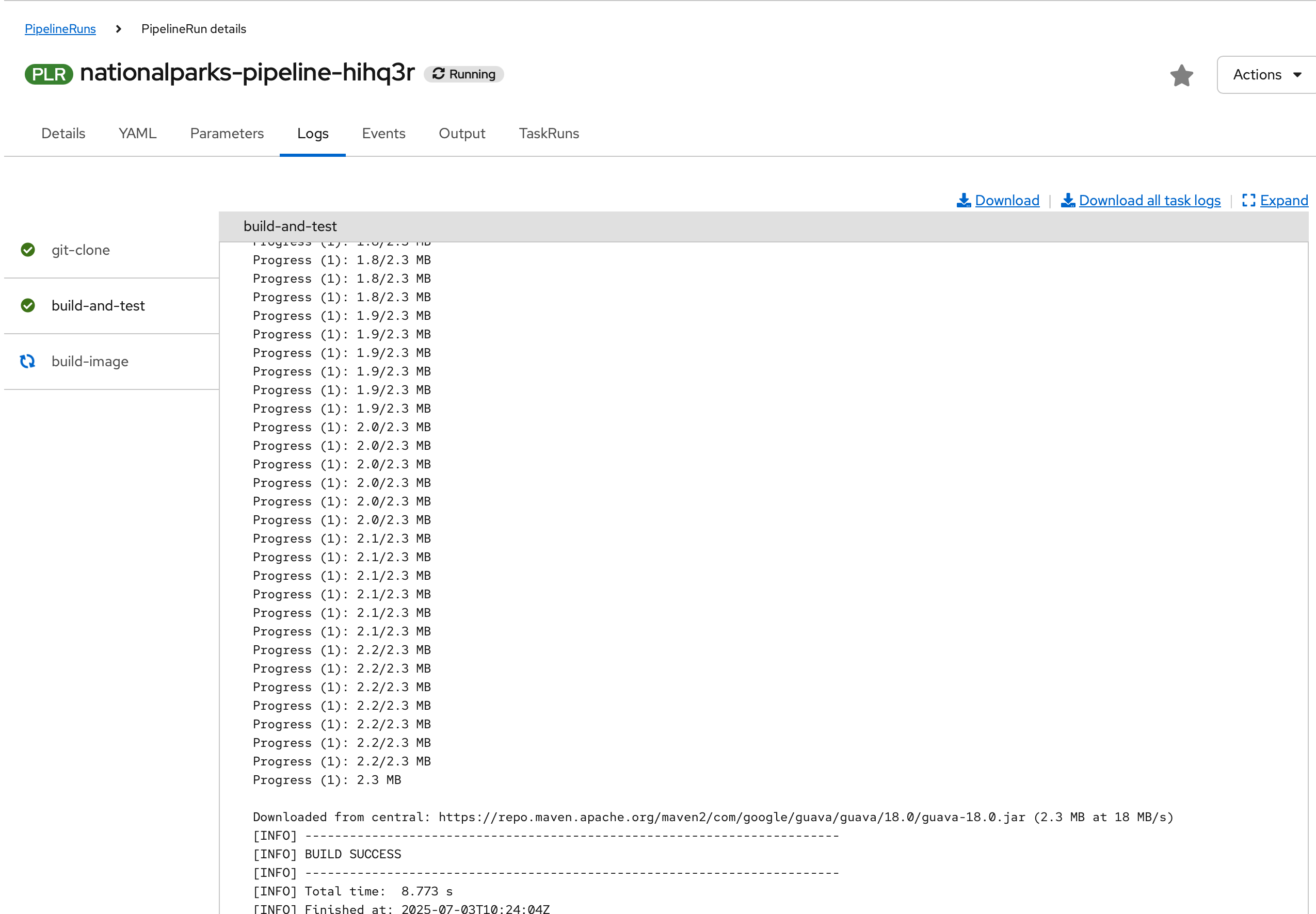

Then click on the Task running to check logs:

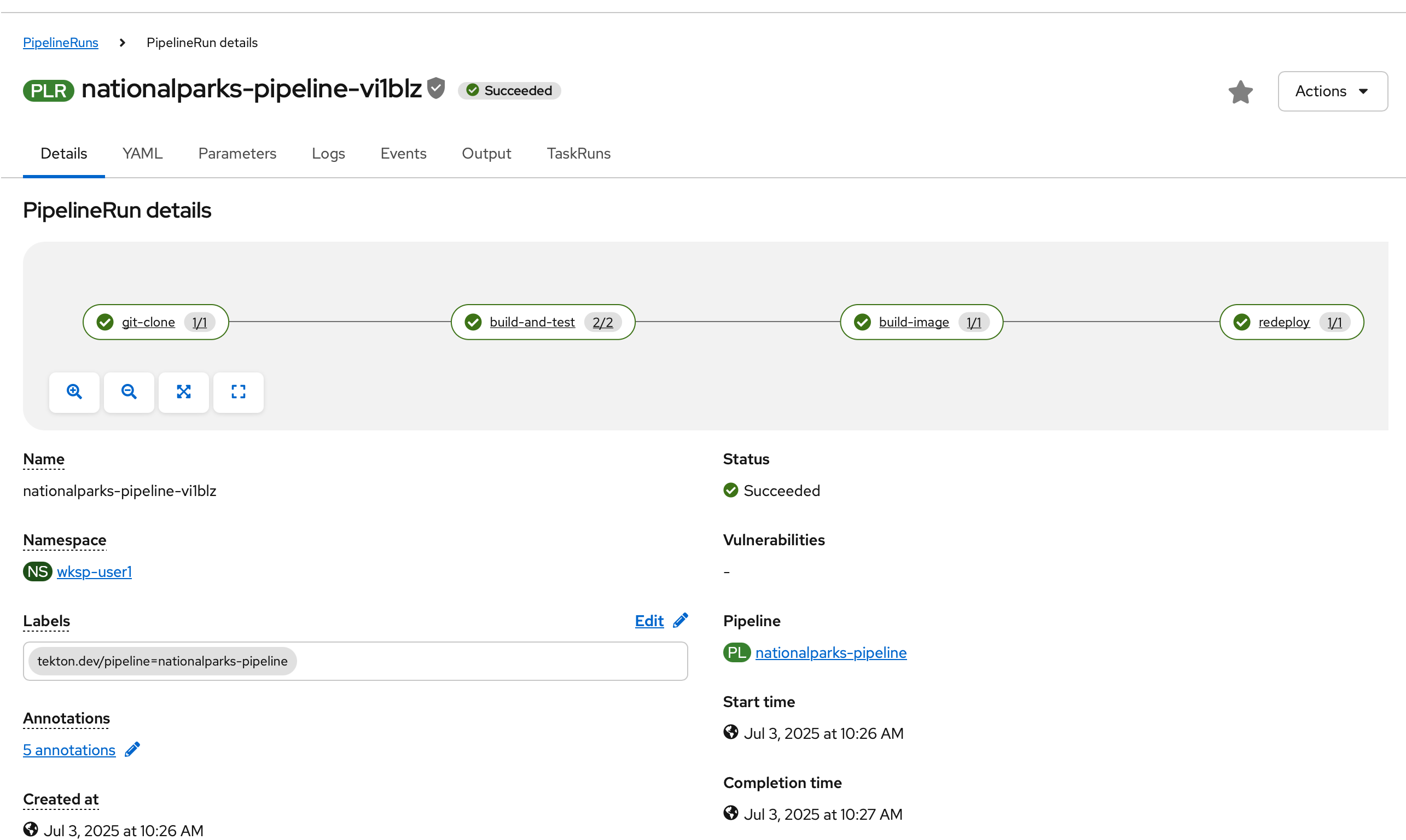

Verify PipelineRun has been completed with success: