Scaling Apps

Background: Deployments and ReplicaSets

While Services provide routing and load balancing for Pods, which may go in and out of existence, ReplicaSets (RS) and ReplicationControllers (RC) are used to specify and ensure the desired number of Pods (replicas) are running. For example, if you want your application server to always run 3 Pods (instances), a ReplicaSet is needed. Without an RS, any Pods that are killed or exit are not automatically restarted. ReplicaSets and ReplicationControllers provide self-healing in OpenShift. Deployments control ReplicaSets, while ReplicationControllers are controlled by DeploymentConfigs.

From the deployments documentation:

Similar to a replication controller, a ReplicaSet is a native Kubernetes API object that ensures a specified number of pod replicas are running at any given time. The difference between a replica set and a replication controller is that a replica set supports set-based selector requirements whereas a replication controller only supports equality-based selector requirements.

In Kubernetes, a Deployment defines how something should be deployed. In almost all cases, you will use Pod, Service, ReplicaSet, and Deployment resources together. OpenShift will create all of them for you when you deploy container images using the web console or CLI.

There are some advanced scenarios where you might want some Pods and a ReplicaSet without a Deployment or a Service. If you are interested in these cases, ask your instructor after the workshop.

Exercise: Exploring Deployment-related Objects

Now that you know the background of ReplicaSets and Deployments, explore how they work together. Take a look at the Deployment that was created when you instructed OpenShift to deploy the parksmap image:

oc get deploymentNAME READY UP-TO-DATE AVAILABLE AGE

parksmap 1/1 1 1 20mTo get more details, look into the ReplicaSet (RS):

oc get rsNAME DESIRED CURRENT READY AGE

parksmap-65c4f8b676 1 1 1 21mThis output shows that you currently expect one Pod to be deployed (Desired), and you have one Pod actually deployed (Current). By changing the desired number, you can tell OpenShift that you want more or fewer Pods.

| OpenShift’s HorizontalPodAutoscaler (HPA) can monitor the CPU and memory usage of a set of Pods and can update the RCs to ensure application stability by adding more instances. You can learn more about Horizontal Pod Autoscalers in the documentation. |

Exercise: Scaling the Application

In this exercise, you will scale the parksmap application up to 2 instances. You can do this with the oc scale command or by incrementing the Desired Count in the OpenShift web console. Choose the method you prefer.

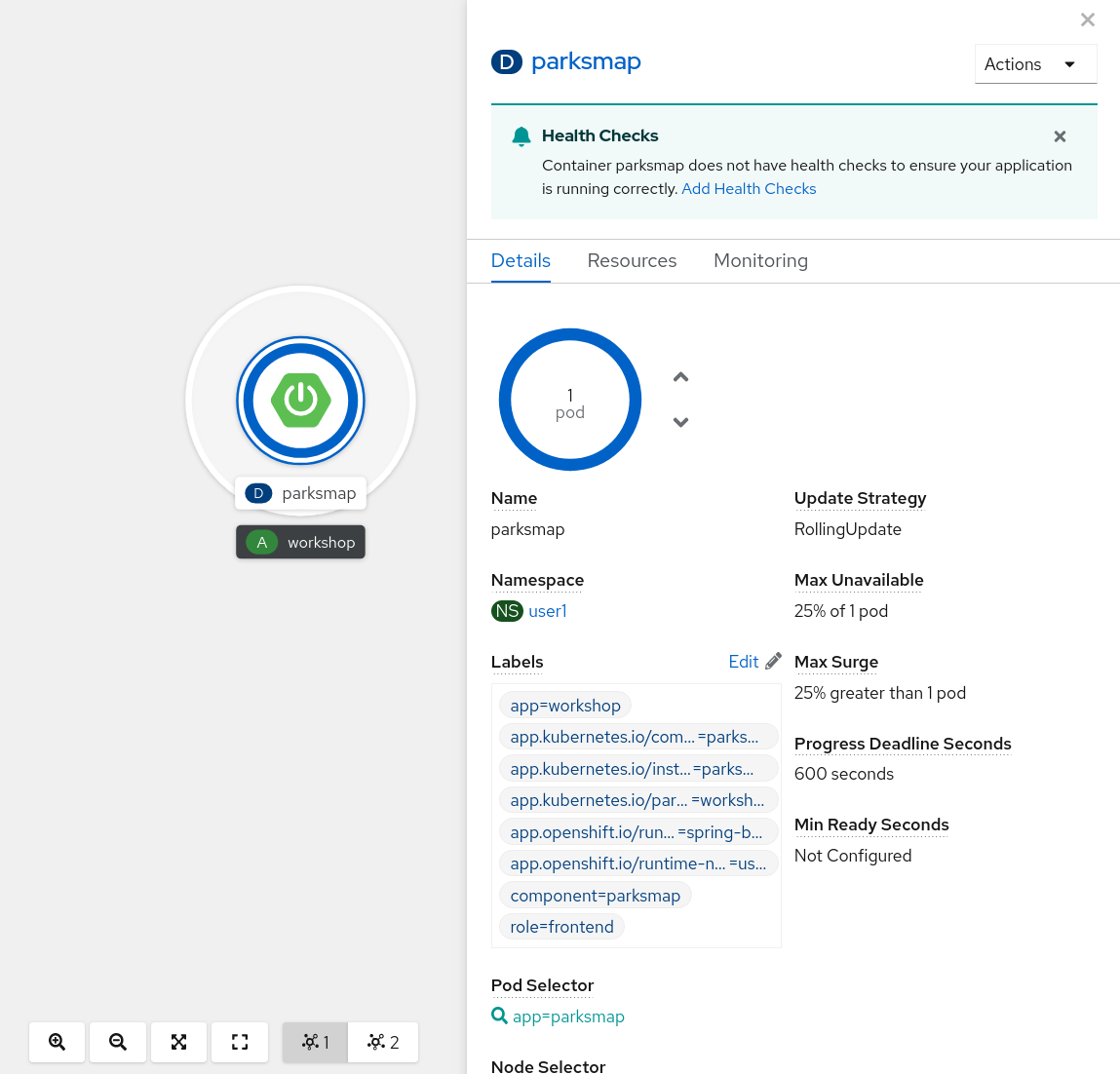

oc scale --replicas=2 deployment/parksmapAlternatively, you can scale up to two pods in the Developer Perspective. From the Topology view, click the parksmap Deployment config and select the Details tab:

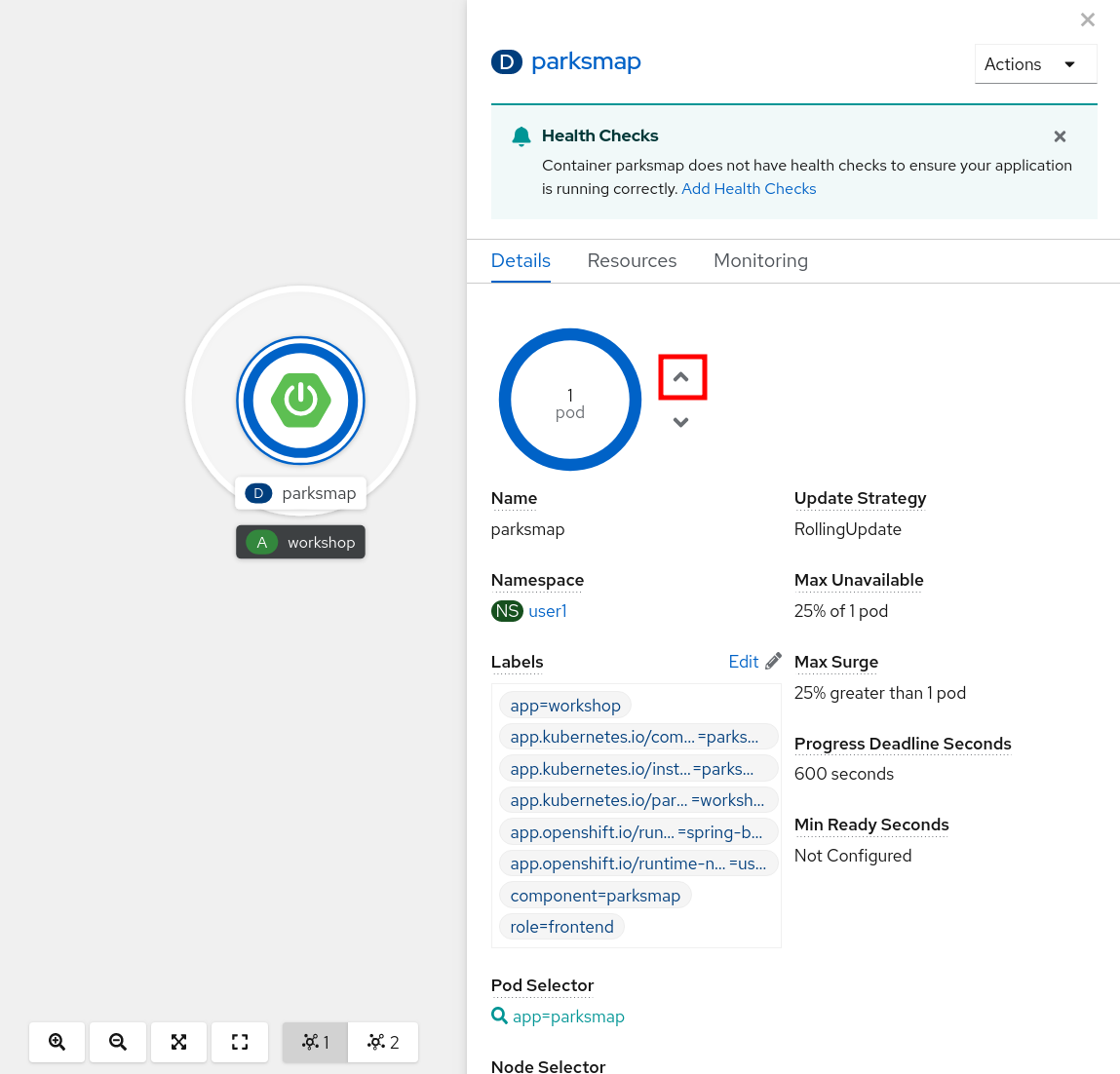

Next, click the ^ icon next to the Pod visualization to scale up to 2 pods.

To verify that you changed the number of replicas, issue the following command:

oc get rsNAME DESIRED CURRENT READY AGE

parksmap-65c4f8b676 2 2 2 23mYou can see that you now have 2 replicas. Verify the number of pods with the oc get pods command:

oc get podsNAME READY STATUS RESTARTS AGE

parksmap-65c4f8b676-fxcrq 1/1 Running 0 92s

parksmap-65c4f8b676-k5gkk 1/1 Running 0 24mLastly, verify that the Service accurately reflects two endpoints:

oc describe svc parksmapYou will see output resembling the following. Notice that the Servce now targets two Endpoints:

Name: parksmap

Namespace: user1

Labels: app=workshop

app.kubernetes.io/component=parksmap

app.kubernetes.io/instance=parksmap

app.kubernetes.io/part-of=workshop

component=parksmap

role=frontend

Annotations: openshift.io/generated-by: OpenShiftWebConsole

Selector: app=parksmap,deploymentconfig=parksmap

Type: ClusterIP

IP: 172.30.22.209

Port: 8080-tcp 8080/TCP

TargetPort: 8080/TCP

Endpoints: 10.128.2.90:8080,10.131.0.40:8080

Session Affinity: None

Events: <none>Another way to look at a Service's endpoints is with the following:

oc get endpoints parksmapYou will see output resembling the following example:

NAME ENDPOINTS AGE

parksmap 10.128.2.90:8080,10.131.0.40:8080 45mYour IP addresses will likely be different, as each Pod receives a unique IP within the OpenShift environment. The endpoint list is a quick way to see how many pods are behind a service.

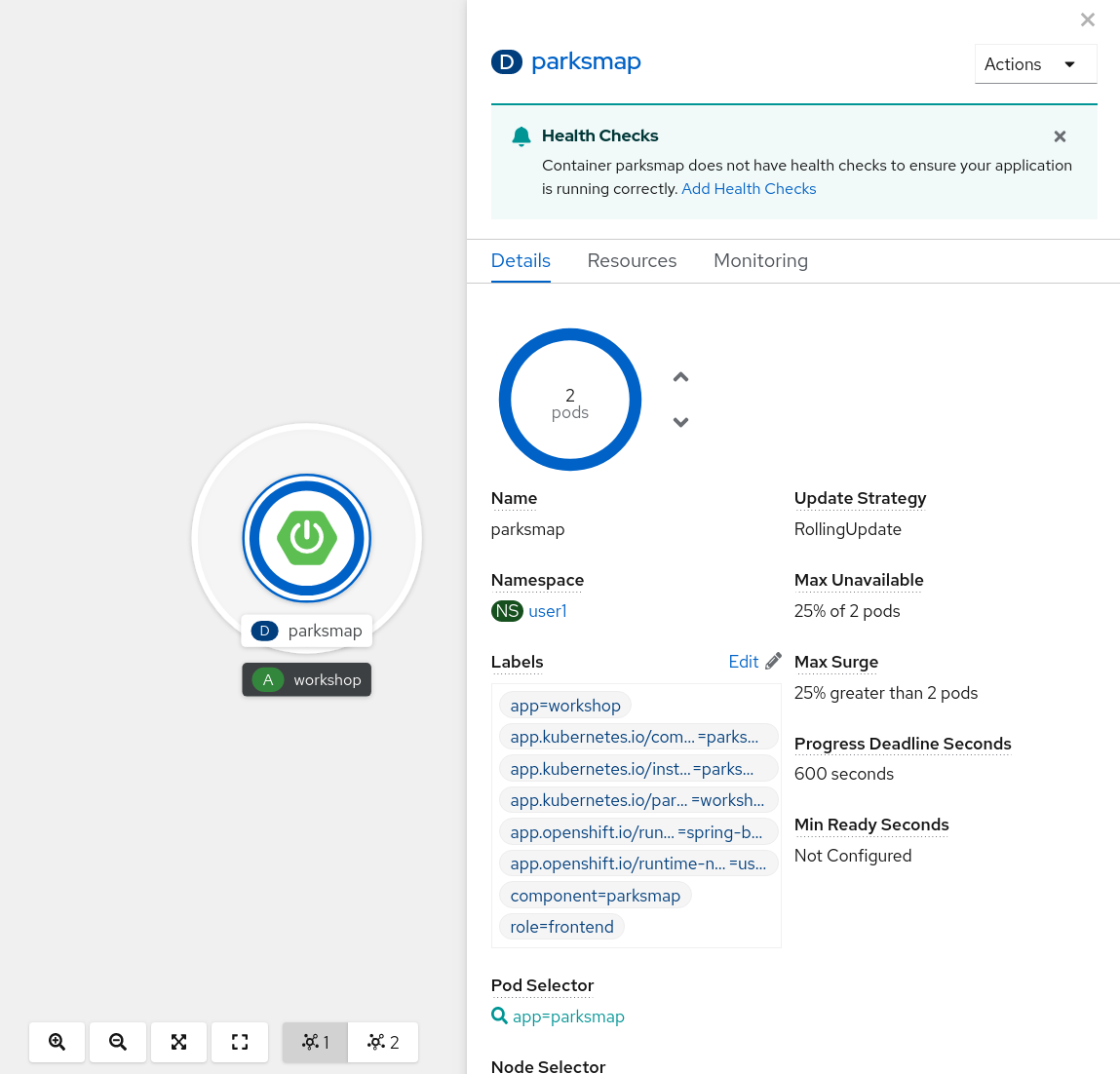

You can also see that both Pods are running in the Developer Perspective:

Overall, that’s how simple it is to scale an application. Application scaling can happen extremely quickly because OpenShift is launching new instances of an existing image, especially if that image is already cached on the node that the new Pod is scheduled on.

Application "Self Healing"

Because OpenShift’s RSs are constantly monitoring to see that the desired number of Pods are running, you might expect OpenShift to "fix" the situation if the desired replica count doesn’t match the current number.

Since you have two Pods running right now, you can see what happens if you "accidentally" kill one. Run the oc get pods command again, and choose a Pod name. Then, do the following:

oc delete pod parksmap-7dfd599cd7-vgc7z && oc get podspod "parksmap-65c4f8b676-k5gkk" deleted

NAME READY STATUS RESTARTS AGE

parksmap-65c4f8b676-bjz5g 1/1 ContainerCreating 0 9s

parksmap-65c4f8b676-fxcrq 1/1 Running 0 4m48sDid you notice anything? One container has been deleted, but a new container is already being created in its place.

Additionally, OpenShift provides rudimentary capabilities around checking the liveness and/or readiness of application instances. If the basic checks are insufficient, OpenShift also allows you to run a command inside the container in order to perform the check. That command could be a script that uses any installed language.

Based on these health checks, if OpenShift decided that your parksmap application instance wasn’t healthy, it would delete the instance and then create a new one in its place, always ensuring that the desired number of replicas was in place.

More information on probing applications is available in the Application Health section of the documentation and later in this guide.

Exercise: Scale Down

Before we continue, go ahead and scale your application down to a single instance. Feel free to do this using whatever method you like.

| Failure to scale down to 1 Pod will cause unexpected behavior in later sections. This is due to how the application has been coded, and not related to OpenShift. |